Monitors as Code

Overview

Monte Carlo developed a YAML-based monitors configuration to help teams deploy monitors as part of their CI/CD process. The following guide explains how to get started with monitors as code.

Prerequisites

- Install the CLI — https://docs.getmontecarlo.com/docs/using-the-cli

- When running

montecarlo configure, provide your API key

Using code to define monitors

First, you will need to create a Monte Carlo project. A Monte Carlo project is simply a directory which contains a montecarlo.yml file, which contains project-level configuration options. If you are using DBT, we recommend placing montecarlo.yml in the same directory as dbt_project.yml.

The montecarlo.yml format:

version: 1

default_resource: <string>

include_file_patterns:

- <string>

exclude_file_patterns:

- <string>

namespace: <string - optional>

Description of options inside the montecarlo.yml file:

version: The version of MC configuration. Set to1default_resource: The warehouse friendly name or UUID where YAML-defined monitors will be created. The warehouse UUID can be obtained via thegetUserAPI as described here.- If your account only has a single warehouse configured, MC will use this warehouse by default, and this option does not need to be defined.

- If you have multiple warehouses configured, you will need to (1) define

default_resource, and (2) specify the warehouse friendly name or UUID for each monitor explicitly in theresourceproperty to override default resource. (see YAML format for configuring monitors below).

include_file_patterns: List of file patterns to include when searching for monitor configuration files. By default, this is set to**/*.yamland**/*.yml. With these defaults, MC will search recursively for all directories nested within the project directory for any files with ayamlorymlextension.exclude_file_patterns: List of file patterns to exclude when searching for monitor configuration files. For example:directory_name/*,filename__*,*.format

Example montecarlo.yml configuration file, which should be sufficient for customers with a single warehouse:

version: 1

Example montecarlo.yml configuration file, for customers with multiple warehouses configured.

version: 1

default_resource: bigquery

Defining individual monitors

Define monitors in separate YML files than

montecarlo.ymlYour montecarlo.yml file should only be used to define project-level configuration options. Use separate YML files to define individual monitors.

Monitors are defined in YAML files within directories nested within the Monte Carlo project. Monitors can be configured in standalone YAML files, or embedded within DBT schema.yml files within the meta property of a DBT model definition.

Monitor definitions inside of yml files must conform to the expected format in order to be processed successfully by the CLI. Some example monitor configurations, defining the most basic options can be found below.

For an exhaustive list of configuration options and their definitions, refer to the Monitor configuration reference section below.

Example of a standalone monitor definition:

namespace: <string - optional>

montecarlo:

field_health:

- table: project:dataset.table_name

timestamp_field: created

resource: default warehouse override

name: field_healt_test_monitor

dimension_tracking:

- table: project:dataset.table_name

timestamp_field: created

field: order_status

resource: default warehouse override

name: dt_test_monitor

Example of monitor embedded within a DBT schema.yml file:

version: 2

models:

- name: table_name

description: My table

meta:

montecarlo:

field_health:

- table: project:dataset.table_name

timestamp_field: created

name: field_health_test_monitor

dimension_tracking:

- table: '[[ ref("table_name") ]]'

name: dimension_tracking_for_table_name

description: 'Tracks dimensions for [[ ref("table_name") ]]'

timestamp_field: created

field: order_status

name: dt_test_monitor

dbt ref resolution

In the snippet above, you can see an example of resolving dbt ref("<model_name>") notation for any string field within Monte Carlo monitor configs. Just wrap the ref in [[ ]] and make sure to quote the string as in the example above. To resolve refs, you must pass the --dbt-manifest <path_to_dbt_manifest> argument to the CLI when applying the monitor config with the path to your dbt manifest.json file (by default created in the target/ directory after running dbt commands like compile or run). Each dbt ref will be looked up in the manifest and replaced with the full table name. To use this feature you must be on version 0.42.0 or newer of the Monte Carlo CLI.

Tip: Using monitors as code with DBT

If your organization already uses DBT, you may find that embedding monitor configurations within DBT

schema.ymlfiles may make maintenance easier, as all configuration/metadata concerning a given table are maintained in the same location. For an example DBT repo with some basic monitor configuration, click here.

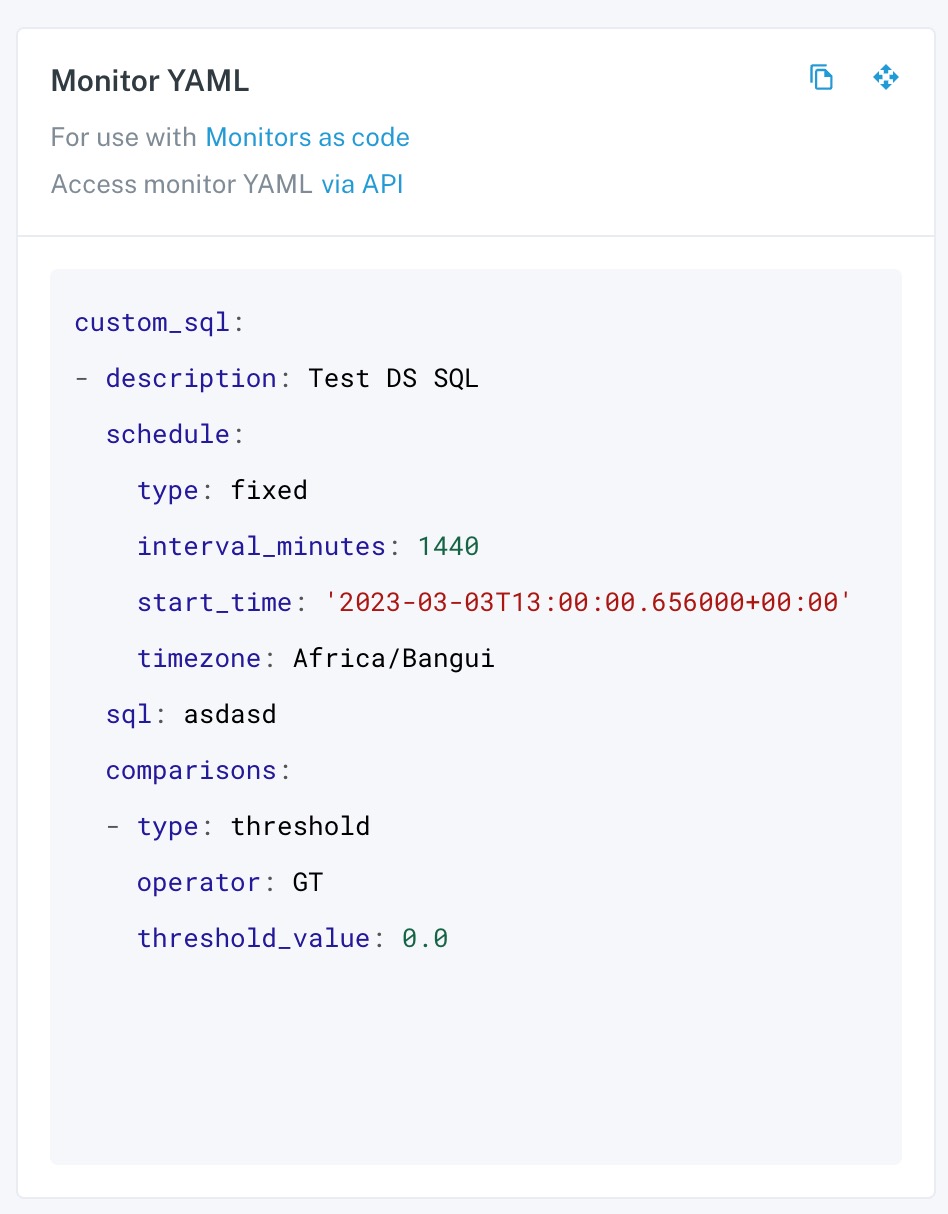

Shortcut to building monitor configuration

If you want to get a head start on setting up monitors as code, visit the monitor details page of an existing monitor where you can find the YAML definition for that monitor's configuration.

Below is a screenshot of the YAML definition for an existing monitor within Monte Carlo.

Developing and testing locally

Once the Monte Carlo project is setup with a montecarlo.yml and at least one monitor definition in a separate .yml file, you can use the Monte Carlo CLI to apply them.

To apply monitor configuration to MC run:

montecarlo monitors apply --namespace <namespace>

Note in the above command, a namespace parameter is supplied. Namespaces are required for all monitors configured via Monitors as Code as they make it easier to organize and manage monitors at scale. Namespaces can either be defined by passing a --namespace argument in the CLI command or defined within the .yml files (see above for more details).

The apply command behaves as follows:

- MC will search for all monitor configuration elements in the project, both in standalone and embedded in DBT schema files. All monitor configuration elements will be concatenated into a single configuration template.

- MC will apply the configuration template to your MC account:

- Any new monitors defined since last apply will be created

- Any previously defined monitors present in current configuration template will be updated if any attributes have changed

- Any previously defined monitors absent from current configuration template will be deleted

Namespaces

You can think of namespaces like Cloudformation stack names. It’s a logical separation of a collection of resources that you can define. Monitors from different namespaces are isolated from each other.

Some examples of why this is useful -

- You have multiple people (or teams) working on managing monitors and don’t want to conflict or override configurations.

- You want to manage different groups monitors in different pipelines (e.g. dbt models in CI/CD x & non-dbt models in CI/CD y).

Namespaces can also be defined within the montecarlo.yml file or within individual monitor definitions.

Namespaces are currently limited to 2000 resources. Support for this starts in CLI version 0.52.0, so please upgrade your CLI version if you are running into timeouts while applying changes.

To delete (destroy) a namespace:

montecarlo monitors delete --namespace <namespace>

This will delete all monitors for a given namespace.

Dry Runs

The

applycommand also supports a--dry-runargument which will dry run the configuration update and report each operation. Using this argument just shows planned changes but doesn't apply them.

Integrating into your CI/CD pipeline

Deploying monitors within a continuous integration pipeline is straightforward. Once changes are merged into your main production branch, configure your CI pipeline to install the montecarlodata CLI:

pip install montecarlodata

And run this command:

MCD_DEFAULT_API_ID=${MCD_DEFAULT_API_ID} \

MCD_DEFAULT_API_TOKEN=${MCD_DEFAULT_API_TOKEN} \

montecarlo monitors apply \

--namespace ${MC_MONITORS_NAMESPACE} \

--project-dir ${PROJECT_DIR} \

--auto-yes

Skipping confirmations

The

--auto-yesoption will disable any confirmation prompts shown by the command and should only be used on CI, where there's no interaction. Otherwise we recommend not including the--auto-yesso you can review what changes will be applied before confirming.

These environment variables need to be populated:

MCD_DEFAULT_API_ID: Your Monte Carlo API IDMCD_DEFAULT_API_TOKEN: Your Monte Carlo API TokenMC_MONITORS_NAMESPACE: Namespace to apply monitor configuration toPROJECT_DIR: If themontecarlocommand is not run within your Monte Carlo project directory, you can specify it here. Optional.

Example projects with CI/CD

For some examples of monitors as code projects with CI/CD setup, checkout these repositories:

- Standalone Monte Carlo project

- Basic DBT project with monitors (Gitlab)

- Basic DBT project with monitors (Github)

Monitor configuration reference

The term

labelsis used to define theaudiencesfor each monitor.labelsandaudiencesare equivalent, but the termlabelsmust be used in monitor config. Notification config uses the termaudiences. See Notifications as Code for more info.

montecarlo:

field_health:

- table: <string> # required

name: <string> # required

resource: <string> # optional -- override default warehouse

description: <string> # required

notes: <string> # optional

fields:

- <string>

selected_metrics: # optional -- empty to collect all metrics

- <metric> # see below for allowed values

use_important_fields: <bool> # optional -- by default, do not use important fields

segmented_expressions:

- <string> # Can be a field or a SQL expression

timestamp_field: <string> # optional

timestamp_field_expression: <string> # optional

where_condition: <string>

lookback_days: <int> # optional

aggregation_time_interval: <one of 'day' or 'hour'> # optional

min_segment_size: <int> # optional -- by default, fetch all segment sizes

schedule: # optional -- by default, fixed schedule with interval_minutes=720 (12h)

type: <fixed, or dynamic> # required

interval_minutes: <integer> # required if fixed

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

labels: # labels are equivalent to audiences

- <string>

dimension_tracking:

- table: <string> # required

name: <string> # required

resource: <string> # optional -- override default warehouse

description: <string> # required

notes: <string> # optional

field: <string> # required

timestamp_field: <string> # optional

timestamp_field_expression: <string> # optional

where_condition: <string>

lookback_days: <int> # optional

aggregation_time_interval: <one of 'day' or 'hour'> # optional

schedule: # optional -- by default, fixed schedule with interval_minutes=720 (12h)

type: <fixed, or dynamic> # required

interval_minutes: <integer> # required if fixed

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

labels: # labels are equivalent to audiences

- <string>

json_schema:

- table: <string> # required

name: <string> # required

resource: <string> # optional -- override default warehouse

description: <string> # required

notes: <string> # optional

field: <string> # required

timestamp_field: <string>

timestamp_field_expression: <string>

where_condition: <string>

schedule: # optional -- by default, fixed schedule with interval_minutes=720 (12h)

type: <fixed, or dynamic> # required

interval_minutes: <integer> # required if fixed

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

labels: # labels are equivalent to audiences

- <string>

custom_sql:

- sql: <string> # required

name: <string> # required

resource: <string> # optional -- override default warehouse

comparisons: <comparison> # required

variables: <variable values>

description: <string> # required

notes: <string> # optional

schedule:

type: <string> # must be 'fixed' or 'manual'

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

- <string>

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

labels: # labels are equivalent to audiences

- <string>

severity: <string> # optional - only use SEV-0 through SEV-4 - custom values will be rejected

field_quality:

- table: <string> / tables: <list> # required

name: <string> # requried

field: <string> / fields: <list> # required

resource: <string> # optional -- override default warehouse

metric_type: <metric_type> # required, see below

comparisons: <comparison> # required, see below

filters: # optional, rows to consider when collecting the metric

- field: <string> # required

operator: <string> # required, one of EQ, NEQ, LT, LTE, GT, GTE

value: <string> required

description: <string> # required

notes: <string> # optional

schedule:

type: <string> # must be 'fixed' or 'manual'

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

- <string>

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

labels: # labels are equivalent to audiences

- <string>

severity: <string> # optional - only use SEV-0 through SEV-4 - custom values will be rejected

freshness:

- table: <string> / tables: <list> # required

name: <string> # requried

resource: <string> # optional -- override default warehouse

freshness_threshold: <integer> # required

description: <string>

notes: <string> # optional

schedule:

type: fixed # must be fixed

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

labels: # labels are equivalent to audiences

- <string>

severity: <string> # optional - only use SEV-0 through SEV-4 - custom values will be rejected

volume:

- table: <string> / tables: <list> # required

name: <string> # required

resource: <string> # optional -- override default warehouse

comparisons: <comparison> # required

volume_metric: <row_count or byte_count> # row_count by default

description: <string>

notes: <string> # optional

schedule:

type: fixed # must be fixed

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

labels: # labels are equivalent to audiences

- <string>

severity: <string> # optional - only use SEV-0 through SEV-4 - custom values will be rejected

comparison:

- description: <string>

name: <string> # required

notes: <string>

notify_rule_run_failure: <boolean>

event_rollup_until_changed: <boolean>

schedule:

type: fixed # must be fixed

interval_minutes: <integer>

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

query_result_type: <string> # required, one of LABELED_NUMERICS, ROW_COUNT, SINGLE_NUMERIC

source_sql: <string>

target_sql: <string>

source_resource: <string>

target_resource: <string>

comparisons:

- type: <string> # only accepts source_target_delta

operator: <string> # required GT, as we check if absolute delta value is greater than threshold.

threshold_value: <float>

is_threshold_relative: <boolean>

Lookback Limits

Where we allow you to specify a longer lookback period on some monitors (in case the data in your table has historical timestamps), you cannot pick a number larger than 7. This is because for each day we "lookback", an additional query against your table is run. This is a safeguard to prevent specifying a very large period, like 90 days, and then having 90 queries run against your warehouse each time the monitor runs. If you need help with these windows, please feel free to reach out to [email protected] or the chat bot in the lower right hand corner.

Tables

tableandtablesfields passed in the config should contain valid full table ids. If the table doesn't exist in the assets page the validation will fail. Sometimes the catalog service takes time to detect newer tables, you can wait for the tables to be detected and then create monitors for them or (not recommended) you can apply the monitor config with --create-non-ingested-tables (update montecarlo cli to latest version) option to force create the tables along with the monitor. These tables will be marked deleted if they are not detected later on.

Common properties

name: Name is now a required field for any new monitors created. We only have an exception for older monitors created via Monitors as Code. But its recommended to add a name to older monitors as well.resource: Optional warehouse name can be set here to override the default warehouse set inmontecarlo.yml. Forcomparisonrules, you can usesource_resourceandtarget_resourceinstead.description: Friendly description of rule.notes: Additional context for the monitor.labels: Optional list of audiences associated with the monitor (labelsare equivalent toaudiences).severity: Optional, pre-set the severity of incidents generated by this monitor. Only use SEV-0 through SEV-4 as custom values will be rejected.

Field Health Monitor

-

table: MC global table ID (format<database>:<schema>.<table name> -

fields: List of fields in table to monitor. Optional — by default all fields are monitored -

selected_metrics: List of metrics to collect over the selected fields. See the list of available metrics for full details. Only metrics that support ML thresholds are available. Metrics will only be collected for compatible fields types. Metrics are any of:-

UNIQUE_RATE% unique values -

NULL_RATE: % null values -

EMPTY_STRING_RATE: % empty strings -

TEXT_ALL_SPACE_RATE: % all-whitespace text -

NAN_RATE: % NaN values -

TEXT_NULL_KEYWORD_RATE: % null keywords -

NUMERIC_MEAN: Mean value -

NUMERIC_MEDIAN: Median value -

NUMERIC_MIN: Minimum value -

NUMERIC_MAX: Maximum value -

PERCENTILE_20: 20th percentile -

PERCENTILE_40: 40th percentile -

PERCENTILE_60: 60th percentile -

PERCENTILE_80: 80th percentile -

NUMERIC_QUANTILES: Grouped percentiles (min, p20, p40, p60, p80, max) -

ZERO_RATE: % zero values -

NEGATIVE_RATE: % negative values -

NUMERIC_STDDEV: Standard deviation -

TRUE_RATE: % true values -

FALSE_RATE: % false values -

TEXT_INT_RATE: % integer-formatted text -

TEXT_NUMBER_RATE: % float-formatted text -

TEXT_UUID_RATE: % UUID -

TEXT_SSN_RATE: % SSN -

TEXT_US_PHONE_RATE: % USA phone number -

TEXT_US_STATE_CODE_RATE: % USA state code -

TEXT_US_ZIP_CODE_RATE: % USA zip code -

TEXT_EMAIL_ADDRESS_RATE: % email address -

TEXT_TIMESTAMP_RATE: % timestamp-formatted text -

FUTURE_TIMESTAMP_RATE: % timestamp in future -

PAST_TIMESTAMP_RATE: % timestamp in past -

UNIX_ZERO_RATE: % Unix time 0

-

-

use_important_fields: Defaults to false. If true, use the table current important fields to build the monitor. You can use important fields and also provide a specific list of fields at the same time. -

segmented_expressions: List of fields or SQL expressions used to segment the field (must have exactly one field infields). Enables Monitoring by Dimension. -

timestamp_field: Timestamp field -

timestamp_field_expression: Arbitrary SQL expression to be used as timestamp field, e.g.DATE(created). Must use eithertimestamp_fieldortimestamp_field_expressionor neither. -

where_condition: SQL snippet of where condition to add to field health query -

lookback_days: Lookback period in days. Default: 3. This option is optional and will be ignored unlesstimestamp_fieldis set. -

aggregation_time_interval: Aggregation bucket time interval, eitherhour(default) orday. This option is optional and will be ignored unlesstimestamp_fieldis set. -

min_segment_size: Minimum number of rows for a segment to be fetched. Defaults to 1. Can be used to avoid cardinality limits for datasets with a long tail of less-relevant segments. -

sensitivity_level: Set sensitivity level to One ofhigh,mediumorlow. -

scheduletype: One offixed, ordynamicinterval_minutes: For fixed, how frequently to run the monitorstart_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

-

connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API as described here. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.

The monitored fields cannot exceed 300 fields, including important fields and manually specified fields. If they do, we'll attempt to keep all the manually specified fields and as many important fields as possible (ordered by importance score) until we reach 300 fields.

Dimension Tracking Monitor

table: MC global table ID (format<database>:<schema>.<table name>field: Field in table to monitor or a valid SQL expression that returns the row's dimension value as a stringtimestamp_field: Timestamp fieldtimestamp_field_expression: Arbitrary SQL expression to be used as timestamp field, e.g.DATE(created). Must use eithertimestamp_fieldortimestamp_field_expressionor neither.where_condition: SQL snippet of where condition to add to field health querylookback_days: Lookback period in days. Default: 3. This option is optional and will be ignored unlesstimestamp_fieldis set.aggregation_time_interval: Aggregation bucket time interval, eitherhour(default) orday. This option is optional and will be ignored unlesstimestamp_fieldis set.scheduletype: One offixed, ordynamicinterval_minutes: For fixed, how frequently to run the monitorstart_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API as described here. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.

JSON Schema Monitor

table: MC global table ID (format<database>:<schema>.<table name>field: Field in table to monitortimestamp_field: Timestamp fieldtimestamp_field_expression: Arbitrary SQL expression to be used as timestamp field, e.g.DATE(created). Must use eithertimestamp_fieldortimestamp_field_expressionor neither.where_condition: SQL snippet of where condition to add to field health queryscheduletype: One offixed, ordynamicinterval_minutes: For fixed, how frequently to run the monitorstart_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API as described here. Use["json_schema"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.

SQL Rule

sql: SQL of rulequery_result_type: Optional, can be set toSINGLE_NUMERICto make the rule use a value-based thresholdsampling_sql: Optional custom SQL query to be run on breach (results will be displayed in Incident IQ to help with investigation).comparisons: See comparisons belowvariables: See variables belowscheduletype: Can befixedormanual.Manualwould be for SQL rules implemented during processes like Circuit Breakers.interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changes

SQL Rule comparisons

comparisonsare definitions of breaches, not expected return values. This section would be where you would define the logic for when to get alerted about anomalous behavior in your monitor. For example, if you make a custom SQL rule and pick:

type:thresholdoperator:GTthreshold_value: 100When Monte Carlo runs your monitor and the return results are greater than 100, we will fire an alert to any routes configured to be notified about breaches to this monitor.

type:threshold,dynamic_thresholdorchange. Ifthreshold,threshold_valuebelow is an absolute value. Ifdynamic_thresholdno threshold is needed (it will be determined automatically). Ifchange,threshold_valueas change from the historical baselineoperator: One ofEQ,NEQ,GT,GTE,LT,LTE. Operator of comparison, =, ≠, >, ≥, <, ≤ respectively.threshold_value: Threshold valuebaseline_agg_function: If type =change, the aggregation function used to aggregate data points to calculate historical baseline. One ofAVG,MAX,MIN.baseline_interval_minutes: If type =change, the time interval in minutes (backwards from current time) to aggregate over to calculate historical baselineis_threshold_relative: If type =change, whether or notthreshold_valueis a relative vs absolute threshold.is_threshold_relative: truewould be a percentage measurement,is_threshold_relative: falsewould be a numerical measurement. Relative means the threshold_value will be treated as a percentage value, Absolute means the threshold_value will be treated as an actual count of rows.connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API as described here. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.

SQL Rule variables

When defining custom sql sentences, you can use variables to execute the same sentence for different combinations of values. Variables are defined as {{variable_name}}. Then, you can define one or more values for each variable, and all combinations will be tested.

Here is an example defining the same sentence for several tables and conditions (4 sentences will be executed):

custom_sql: - sql: | select foo from {{table}} where {{cond}} variables: table: - project:dataset.table1 - project:dataset.table2 cond: - col1 > 1 - col2 > 2

Field Quality Rule

table: MC global table ID (format<database>:<schema>.<table name>)tables: Instead oftable, you can also usetablesto define a list of tables (check example with Getting Started multiple tables below](https://docs.getmontecarlo.com/docs/monitors-as-code#example-with-multiple-tables)).field: Field namefields: Instead offield, you can also usefieldsto define a list of fields. All the fields must be present in the selected tables and have the same type if multiple tables are provided.metric_type: What metric will be collected. Certain metrics are only available on specific data types:- Numeric:

APPROX_DISTINCTNESS,APPROX_DISTINCT_COUNT,NULL_RATE,NULL_COUNT,NON_NULL_COUNT,NUMERIC_MEAN,NUMERIC_MEDIAN,NUMERIC_MIN,NUMERIC_MAX,PERCENTILE_20,PERCENTILE_40,PERCENTILE_60,PERCENTILE_80,ZERO_RATE,ZERO_COUNT,NEGATIVE_RATE,NEGATIVE_COUNT,NUMERIC_STDDEV,SUM - Text:

APPROX_DISTINCTNESS,APPROX_DISTINCT_COUNT,NULL_RATE,NULL_COUNT,NON_NULL_COUNT,EMPTY_STRING_RATE,EMPTY_STRING_COUNT,TEXT_ALL_SPACES_RATE,TEXT_ALL_SPACES_COUNT,TEXT_NULL_KEYWORD_RATE,TEXT_NULL_KEYWORD_COUNT,TEXT_MAX_LENGTH,TEXT_MIN_LENGTH,TEXT_MEAN_LENGTH,TEXT_STD_LENGTH,TEXT_INT_RATE,TEXT_NOT_INT_COUNT,TEXT_NUMBER_RATE,TEXT_NOT_NUMBER_COUNT,TEXT_UUID_RATE,TEXT_NOT_UUID_COUNT,TEXT_SSN_RATE,TEXT_NOT_SSN_COUNT,TEXT_US_PHONE_RATE,TEXT_NOT_US_PHONE_COUNT,TEXT_US_STATE_CODE_RATE,TEXT_NOT_US_STATE_CODE_COUNT,TEXT_US_ZIP_CODE_RATE,TEXT_NOT_US_ZIP_CODE_COUNT,TEXT_EMAIL_ADDRESS_RATE,TEXT_NOT_EMAIL_ADDRESS_COUNT,TEXT_TIMESTAMP_RATE,TEXT_NOT_TIMESTAMP_COUNT - Time:

APPROX_DISTINCTNESS,APPROX_DISTINCT_COUNT,NULL_RATE,NULL_COUNT,NON_NULL_COUNT,FUTURE_TIMESTAMP_RATE,FUTURE_TIMESTAMP_COUNT,PAST_TIMESTAMP_RATE,PAST_TIMESTAMP_COUNT,UNIX_ZERO_RATE,UNIX_ZERO_COUNT - Date:

APPROX_DISTINCTNESS,APPROX_DISTINCT_COUNT,NULL_RATE,NULL_COUNT,NON_NULL_COUNT,FUTURE_TIMESTAMP_RATE,FUTURE_TIMESTAMP_COUNT,PAST_TIMESTAMP_RATE,PAST_TIMESTAMP_COUNT,UNIX_ZERO_RATE,UNIX_ZERO_COUNT - Boolean:

APPROX_DISTINCTNESS,APPROX_DISTINCT_COUNT,NULL_RATE,NULL_COUNT,NON_NULL_COUNT,TRUE_RATE,TRUE_COUNT,FALSE_RATE,FALSE_COUNT

- Numeric:

comparisons: See comparisons belowfilters: See filters belowscheduletype: Can befixedormanual.Manualwould be for rules used during processes like Circuit Breakers.interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changesconnection_name: Specify theconnection(also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via thegetWarehouseConnectionsAPI as described here. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.

Field Quality Rule comparisons

type:thresholdoperator: One ofEQ,NEQ,GT,GTE,LT,LTE. Operator of comparison, =, ≠, >, ≥, <, ≤ respectively.threshold_value: Threshold value

Field Quality Rule filters

field: The field nameoperator: One ofEQ,NEQ,GT,GTE,LT,LTE. Operator of comparison, =, ≠, >, ≥, <, ≤ respectively.value: The value to filter on

Freshness Rule

table: MC global table ID (format<database>:<schema>.<table name>)tables: Instead oftable, can also usetablesto define a list of tables (check example with Getting Started multiple tables below](https://docs.getmontecarlo.com/docs/monitors-as-code#example-with-multiple-tables)).freshness_threshold: Freshness breach threshold in minutesscheduletype: Must befixedinterval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changes

Volume Rule

table: MC global table ID (format<database>:<schema>.<table name>tables: Instead oftable, can also usetablesto define a list of tables (check example with multiple tables below).volume_metric: Must betotal_row_countortotal_byte_count— defines which volume metric to monitorcomparisons: See comparisons belowscheduletype: Must be "fixed"interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changes

Volume Rule comparisons

type:absolute_volumeorgrowth_volume.

If absolute_volume:

operator: One ofEQ,GTE,LTE. Operator of comparison, =, ≥, ≤ respectively.threshold_lookback_minutes: if operator isEQ, the time to look back to compare with the current value.threshold_value: If operator isGTEorLTE, the threshold value

If growth_volume:

operator: One ofEQ,GT,GTE,LT,LTE. Operator of comparison, =, >, ≥, <, ≤ respectively.baseline_agg_function: the aggregation function used to aggregate data points to calculate historical baseline. One ofAVG,MAX,MIN.number_of_agg_periods: the number of periods to use in the aggregate comparison.baseline_interval_minutes: the aggregation period length.min_buffer_value/max_buffer_value: the lower / upper bound buffer to modify the alert threshold.min_buffer_modifier_type/max_buffer_modifier_type: the modifier type of min / max buffer, can beMETRIC(absolute value) orPERCENTAGE.

Comparison Rule

comparisons: See comparisons belowquery_result_type: Should be set to one ofSINGLE_NUMERICto make the rule use a value-based threshold orLABELED_NUMERICSto make the rule use a label-based-threshold orROW_COUNTsource_sql: SQL for sourcetarget_sql: SQL for target to compare with source.source_resource: Required Source warehouse nametarget_resource: Required Target warehouse namescheduletype: Must be "fixed"interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changessource_connection_name: Specify the source connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API as described here. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.target_connection_name: Specify the target connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API as described here. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.

Comparison Rule comparisons

type: accepts onlysource_target_delta.operator: accepts onlyGT. We compare if the absolute delta value is greater than threshold and raise error.threshold_value: Max acceptable delta between source and target sqlis_threshold_relative: Whether or notthreshold_valueis a relative vs absolute threshold.is_threshold_relative: truewould be a percentage measurement,is_threshold_relative: falsewould be a absolute measurement. Relative means the threshold_value will be treated as a percentage value, Absolute means the threshold_value will be treated as an actual count.

Example

montecarlo:

field_health:

- name: field_health_1

description: Test monitor

table: project:dataset.table_name

timestamp_field: created

schedule:

type: dynamic

labels: # labels are equivalent to audiences

- label_name1

selected_metrics:

- NULL_RATE

- description: Test monitor

table: project:dataset.table_name

timestamp_field: created

fields:

- field_name

segmented_expressions:

- segmented_expression

schedule:

type: dynamic

dimension_tracking:

- name: dimension_tracking_1

description: Test monitor

table: project:dataset.table_name

timestamp_field: created

field: order_status

labels: # labels are equivalent to audiences

- label_name2

custom_sql:

- name: custom_sql_1

description: Test rule

sql: |

select foo from project.dataset.my_table

comparisons:

- type: threshold

operator: GT

threshold_value: 0

schedule:

type: fixed

interval_minutes: 60

start_time: "2021-07-27T19:00:00"

severity: SEV-1

field_quality:

- name: field_quality_1

description: Test rule

table: project.dataset.my_table

field: my_field

metric_type: NULL_RATE

comparisons:

- type: threshold

operator: GT

threshold_value: 0

schedule:

type: fixed

interval_minutes: 60

start_time: "2021-07-27T19:00:00"

severity: SEV-1

freshness:

- name: freshness_1

table: project:dataset.table_name

freshness_threshold: 30

schedule:

type: fixed

interval_minutes: 30

start_time: "2021-07-27T19:00:00"

comparison:

- description: Rule 1

name: comparison_rule_1

schedule:

type: fixed

interval_minutes: 60

start_time: '2020-08-01T01:00:00+00:00'

timezone: UTC

query_result_type: LABELED_NUMERICS

source_sql: select * from t1_id;

target_sql: select * from t2_id;

source_resource: lake

target_resource: bigquery

comparisons:

- type: source_target_delta

operator: GT

threshold_value: 2.0

is_threshold_relative: false

Example with multiple tables

montecarlo:

freshness:

- name: freshness_1

description: Test rule

tables:

- project:dataset.table_name1

- project:dataset.table_name2

freshness_threshold: 30

schedule:

type: fixed

interval_minutes: 30

start_time: "2021-07-27T19:00:00"

Example with CRON expressions

montecarlo:

custom_sql:

- name: custom_sql_1

description: Test rule

sql: |

select foo from project.dataset.my_table

comparisons:

- type: threshold

operator: GT

threshold_value: 0

schedule:

type: fixed

interval_crontab:

- "0 10,16 * * MON-FRI"

- "0 12 * * SAT-SUN"

start_time: "2021-07-27T19:00:00"

Updated about 1 month ago