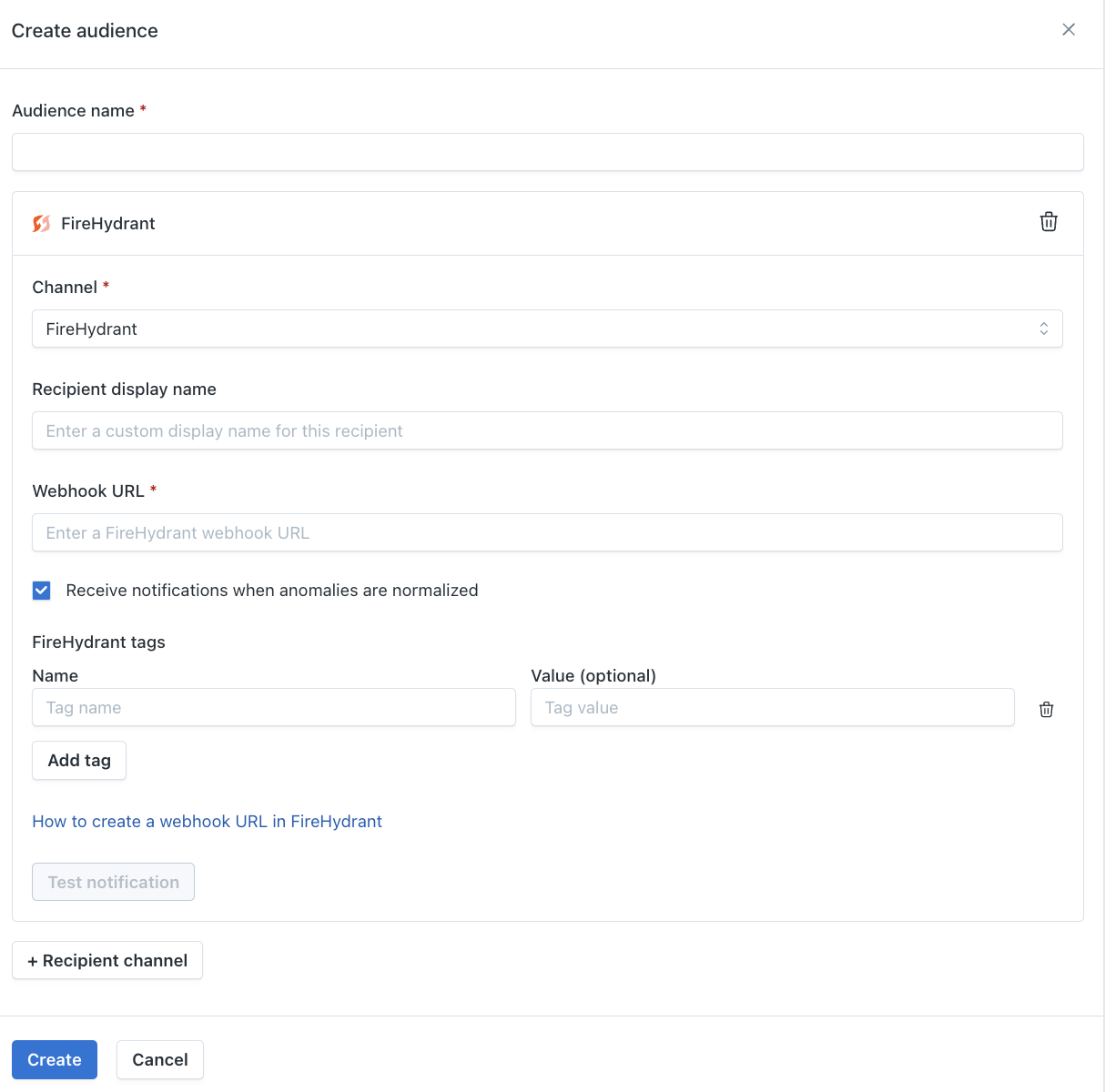

FireHydrant is now supported in Notifications-as-Code (NaC)!

You can define FireHydrant as a notification channel in your NaC config, tie it to an audience, and assign that audience to any monitor in Monitors-as-Code (MaC).

Learn more here:

FireHydrant is now supported in Notifications-as-Code (NaC)!

You can define FireHydrant as a notification channel in your NaC config, tie it to an audience, and assign that audience to any monitor in Monitors-as-Code (MaC).

Learn more here:

You can now define FireHydrant Incident Tags on any FireHydrant notification channel within an Audience. Configure key/value or key-only tags, and they are automatically included on every alert sent through that channel.

Incident tags flow through automatically, ensuring incidents arrive in FireHydrant with the right routing and categorization without any extra manual steps.

Learn more here: https://docs.getmontecarlo.com/docs/firehydrant#firehydrant-incident-tags

Agent monitors can now be cloned: duplicate an existing monitor's configuration and point it at a different span.

When you're monitoring multiple spans with similar setups, cloning saves you from repetitive configuration and lets you scale monitor coverage across your agents faster.

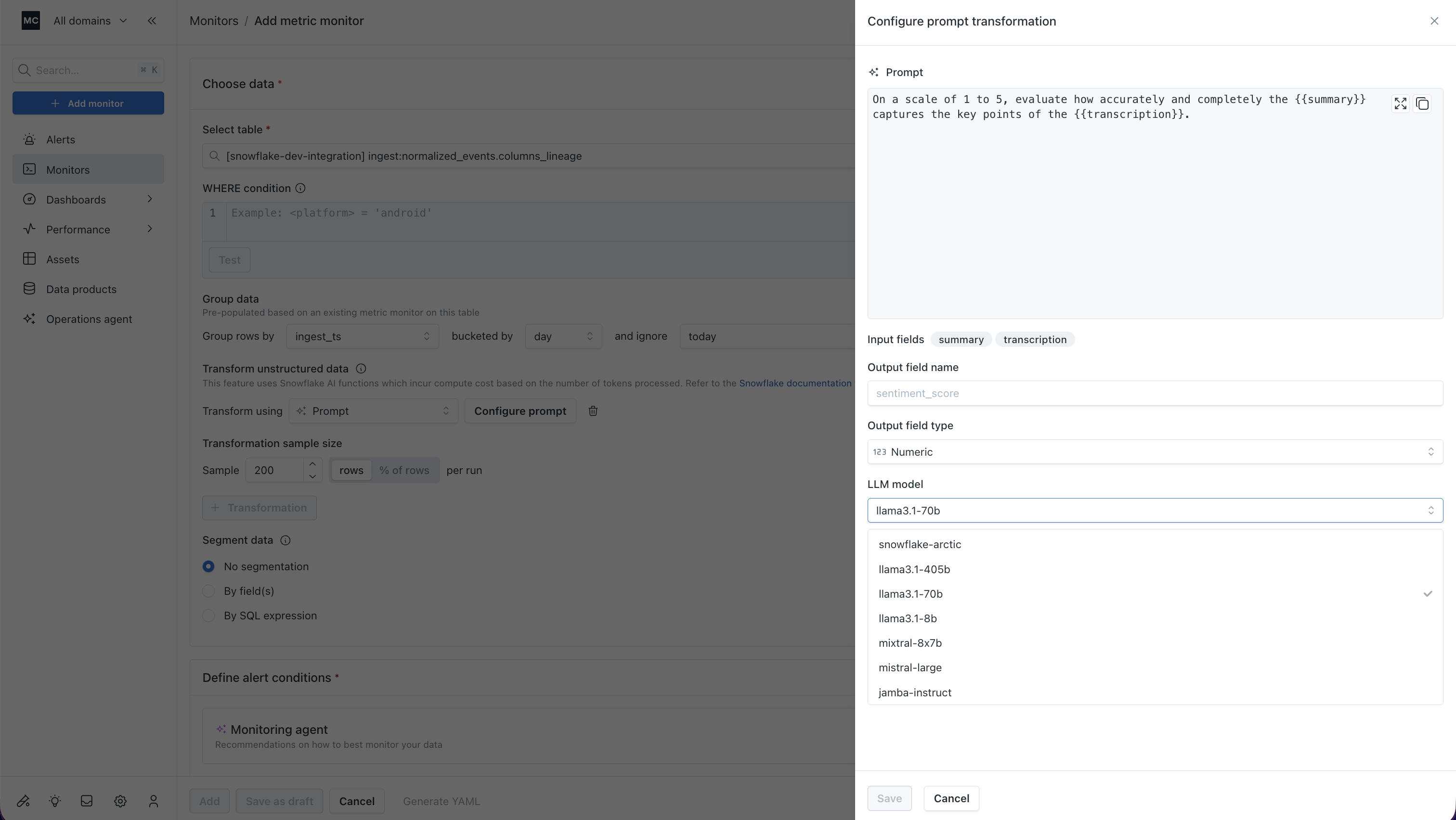

Metric monitors now support custom prompt-based evaluations on your tables — no external pipelines, no SQL hacks.

Write a prompt and plug in any table fields as variables (e.g., "Is {{SUMMARY}} an accurate summary of {{TRANSCRIPTION}}?"), pick your output type (string, numeric, or boolean), choose your LLM, and configure sampling. Monte Carlo handles the rest.

Whether your warehouse holds AI-generated summaries, extracted features, model scores, or anything in between — you have a native way to catch bad outputs in your tables before they become bad decisions. Available for Snowflake, Databricks, and BigQuery.

Learn more here: https://docs.getmontecarlo.com/docs/metric-monitors#configuring-a-prompt-transformation

Propose monitors, collaborate on the right configuration, and review before anything goes live — now available for Multi-table Metric Monitors.

We recently launched Draft Monitors to give teams more control over the monitor creation process. Viewers can propose monitors and share them with teammates for feedback. Editors, Admins and Owners can review the configuration, make changes if needed, and enable them when the time is right.

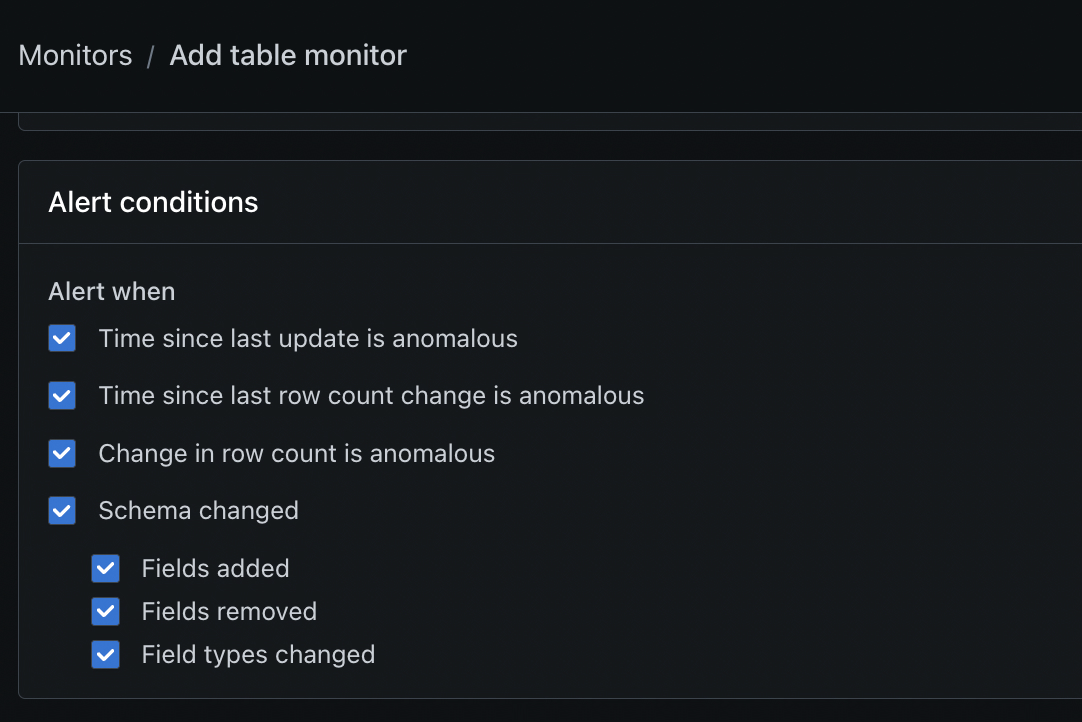

You can now configure Table Monitors to alert on specific schema change types rather than all schema changes at once! The supported types are:

Previously, any schema change would trigger an alert. Now you can tune your alerts to the changes that actually matter to your team, cutting through the noise and making it easier to act fast.

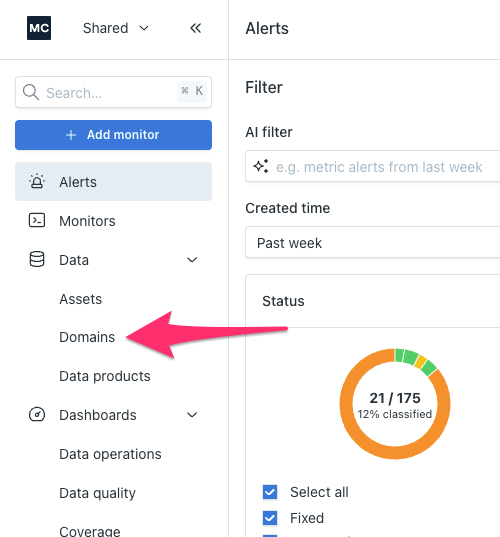

In the coming months, changes to Domains will be released to address painpoints in the ownership of Monitors and messiness in reporting. The options for how to configure Domains will remain unchanged, but the UI will shift and the relationship between Monitors and Domains will become more explicit.

These changes have been already deployed to some newer customers, and will be rolled out to all customers later. Stay tuned for more communication.

Changes:

These changes address a couple longstanding challenges in Monte Carlo:

Note that the options for how to configure Domains will remain unchanged. Rather, the change is in the relationship between Domains, Monitors, and Alerts created by those Monitors.

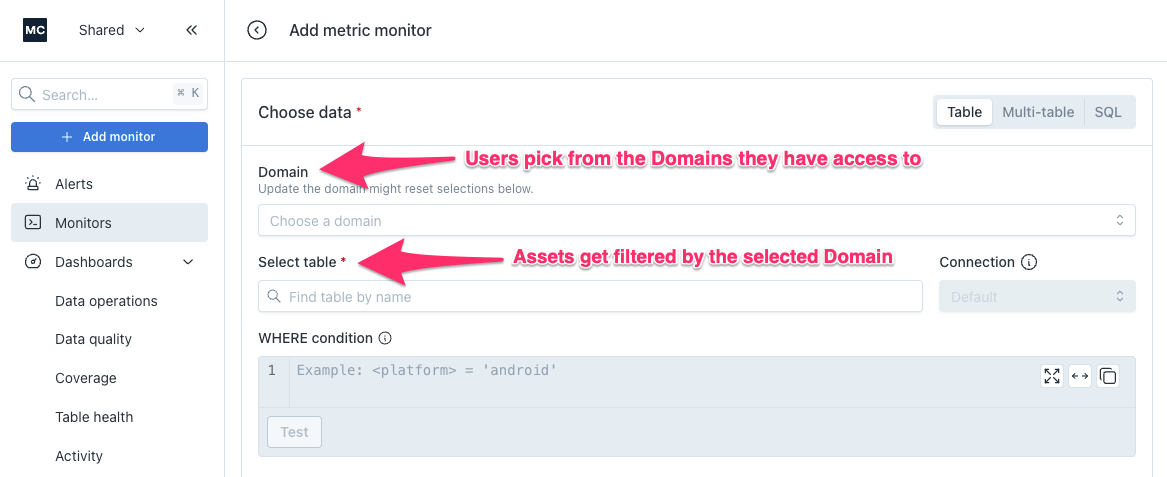

When creating a Monitor, the top of the form will ask the user to select a Domain. The available Domains will be filtered to the ones they have access to. Asset selection for that Monitor will then be filtered by the selected Domain.

Domain management will move out of Settings and into its own tab on the left side-nav.

Improved analytics and dashboarding will be available, showing key metrics by Domain.

Monte Carlo now supports Network Access Controls for the API and other cloud endpoints.

With Network Access Controls, you can restrict access to specific IP addresses or CIDR ranges across both global and regional endpoints (for example, api.getmontecarlo.com and api.eu1.getmontecarlo.com).

When enabled, traffic originating from IP addresses outside the defined ranges is blocked. Controls can be configured globally or on a per-endpoint basis, providing flexibility for different access needs. For example, you can:

Learn more here: https://docs.getmontecarlo.com/docs/network-access-control

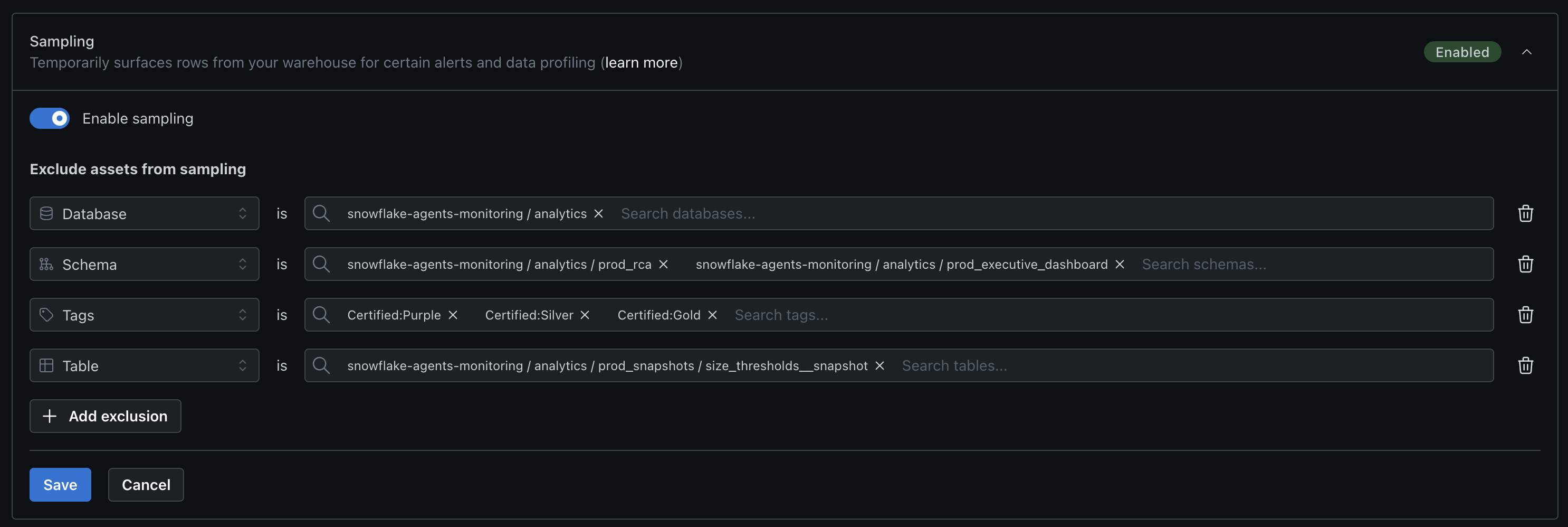

Monte Carlo now supports more granular configuration of data sampling at the integration level.

In addition to disabling sampling for an entire integration, you can now disable sampling for specific databases, schemas, tables, or tagged assets — while keeping sampling enabled elsewhere. These controls are fully available in the UI.

For more advanced use cases, the API also supports inclusion-based configurations, allowing you to explicitly define which assets are eligible for sampling

Learn more here: https://docs.getmontecarlo.com/docs/configure-data-sampling

Monte Carlo UI Example

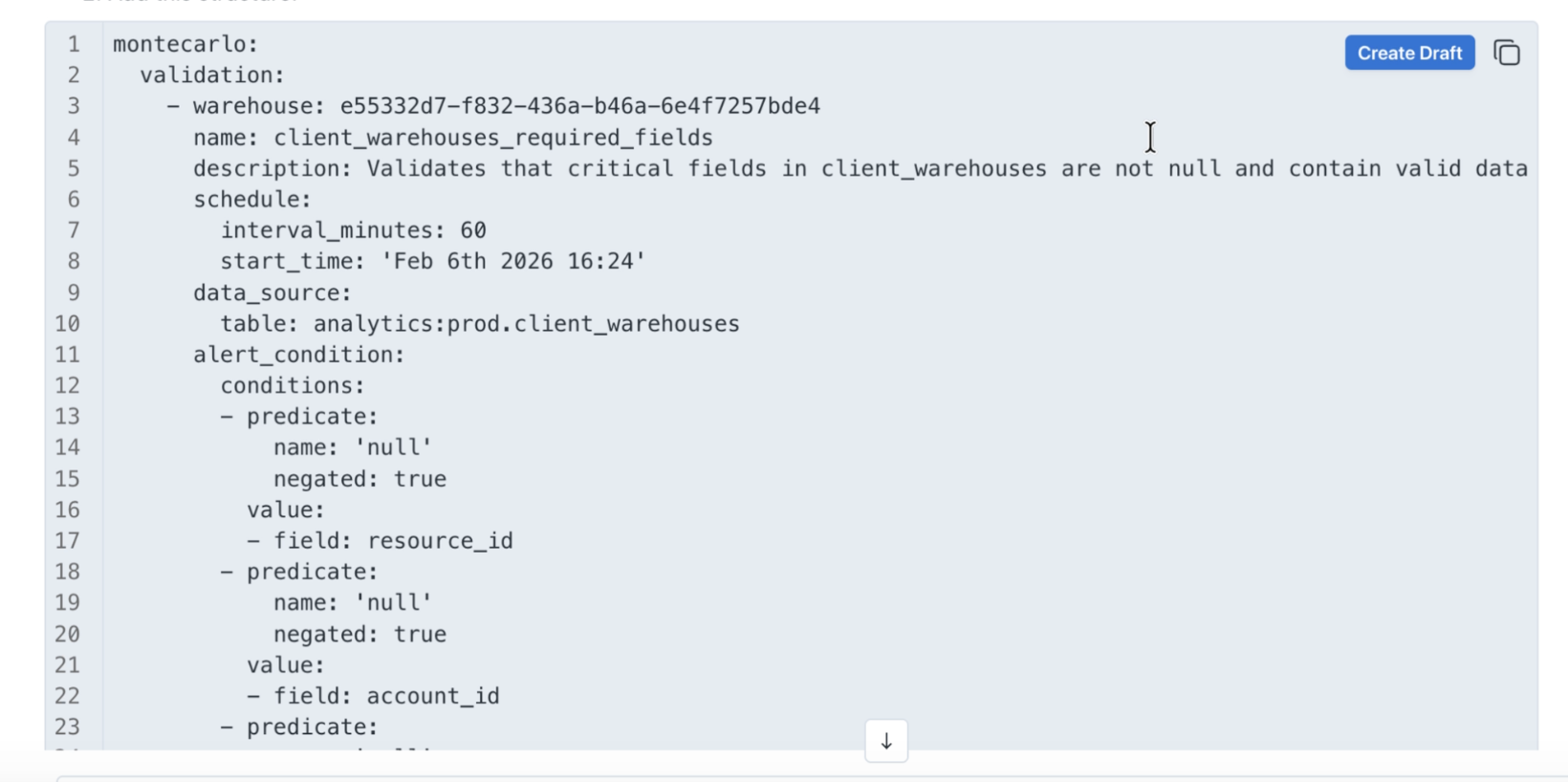

Turn Operations Agent YAML response into a Draft monitor in one click!

Previously, when the Operations Agent suggested a monitor, it returned a YAML definition. Now, you can create a Draft Monitor directly from the chat - no copy-paste.

What’s new: