Several weeks ago we shipped a big set of improvements to freshness monitoring. Specifically, support for week-over-week seasonality. Some people call these "bimodal" thresholds, often because they expect the weekend to have a different threshold than weekdays (two modes).

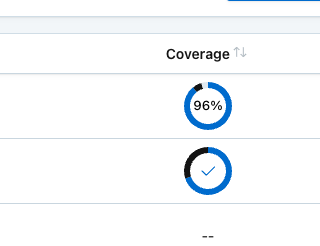

We've now released the same week-over-week seasonality support for the "Time since last row count change" monitor. This is one of the key monitors we deploy “out of the box” on a table, so the impact is very wide. Specific changes:

- We more accurately recognize weekends. We dynamically recognize weekends regardless of where they fall in the week (e.g. Fri/Sat, or when there’s just a single day “off”)

- Tighter thresholds in the “active” period of the week. 110K tables across our customer base now have thresholds at least 25% tighter than before

- Thoughtful thresholds in the “off” part of the week. So if the table doesn’t start back up on Monday, we’ll alert.

In the future, we’ll also add bimodality for day/night behaviors.