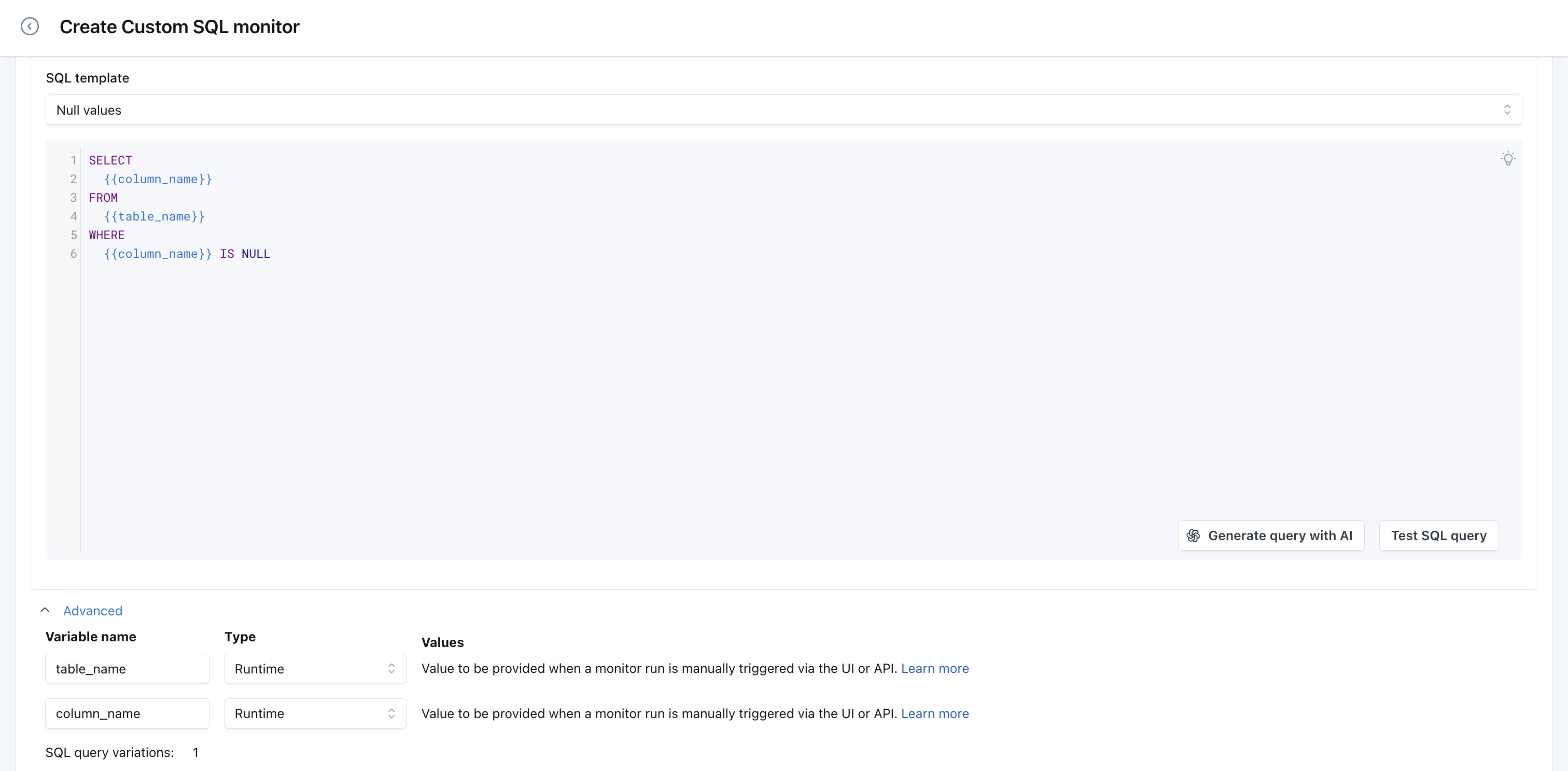

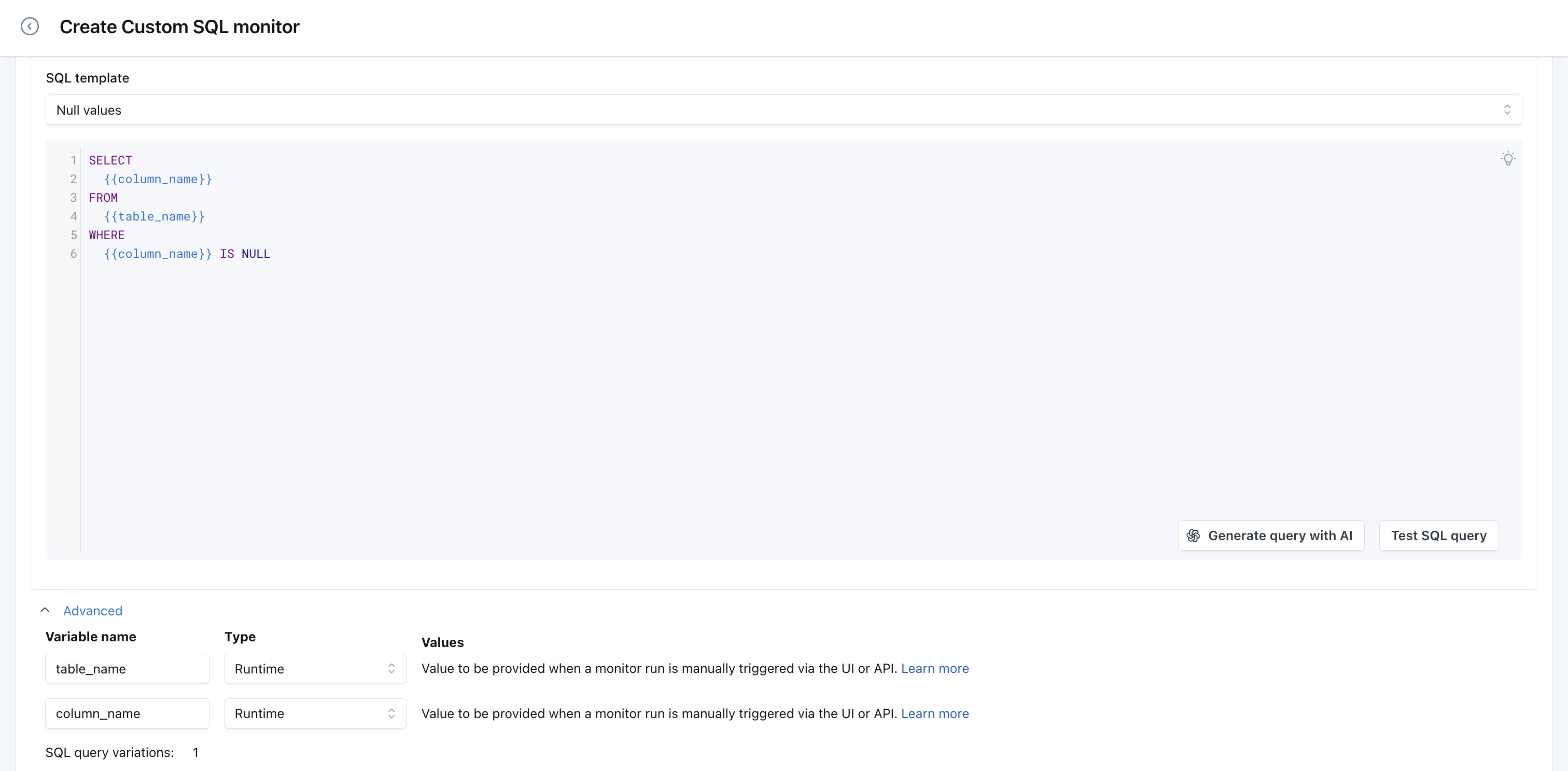

Setting variable values for Custom SQL monitors is more flexible now. While it has been possible to define static values for a while, it is now possible to set variable values at runtime via both the UI and API.

Setting variable values for Custom SQL monitors is more flexible now. While it has been possible to define static values for a while, it is now possible to set variable values at runtime via both the UI and API.

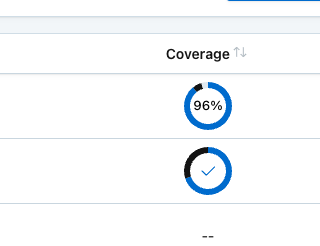

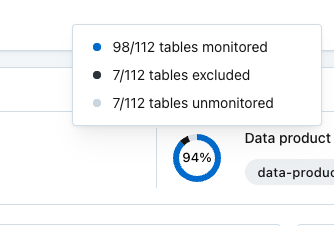

Excluded tables are now called out separately in the upstream coverage charts across the UI. This helps users distinguish between upstream tables that are excluded from being monitored through an explicit rule in the Usage UI, from those that do not have any matching rules to include or exclude them in monitoring. These new details will impact upstream coverage charts in several places across our UI:

Volume monitors are now available for Oracle DB. Users will get automated volume metrics and anomaly detections; they can also set explicit thresholds for volume monitors. Updated docs here.

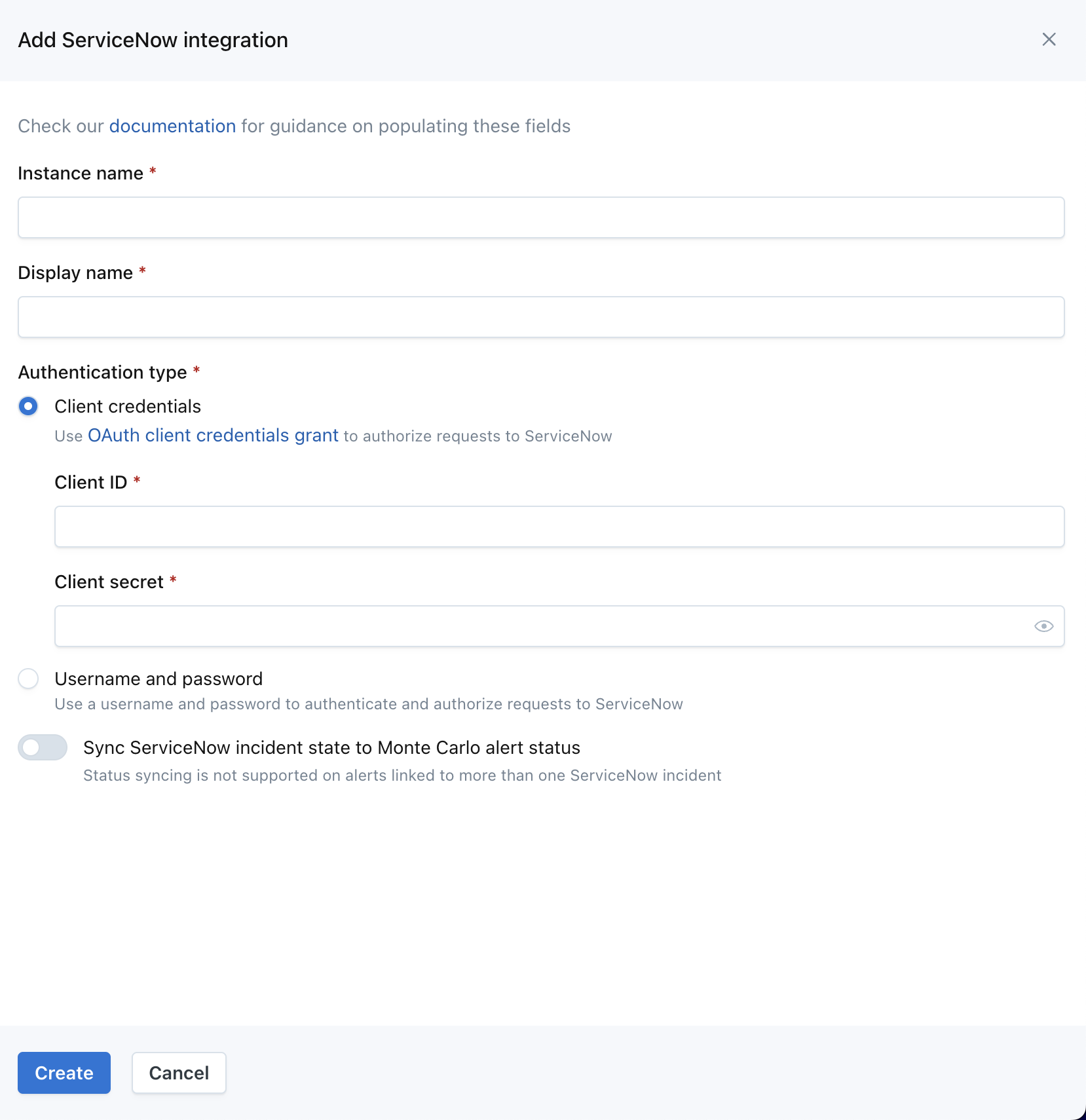

The ServiceNow integration now supports authenticating via OAuth.

Three key improvements were just shipped for the Monte Carlo <> Jira integration:

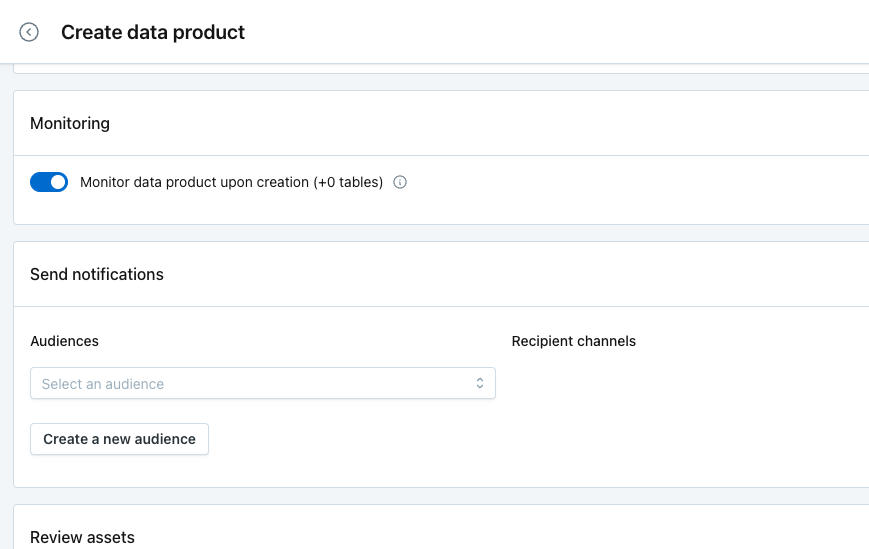

We've made it easier to select what Audiences a Data Product's out-of-the-box detectors should route to, right from the creation and edit flow.

Now, when you create a Data Product, you will be able to select an existing Audience (or create a new one) to automatically route alerts to from the Data Product. This is essentially handling the creation of a Notification Rule under "Other notifications" to route "Affected data" by that Asset tag for the Data Product to that Audience.

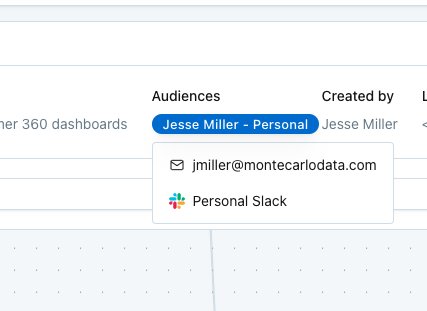

Additionally, on the Data Product Coverage Tab you can see which Audiences are associated with this Data Product.

Note: We only show the Audiences where there is a Notification rule that specifies only that Data Product Asset tag and no other conditions. i.e. if the Notification Rule said "Include Data Product tag but exclude this other tag" it would not show up in this section.

Custom monitor failure notifications due to misconfigurations used to be limited to a frequency of 1 notification per week. We've changed the frequency to up to 1 notification per day in order to reduce the risk of monitor failing silently while still being mindful of alert fatigue.

MC can now surface Hex projects in lineage to help users evaluate impact of data issues on Hex projects.

We’ve improved our automated freshness monitor to better handle weekly patterns. We've sometimes heard customers refer to these as “bimodal” thresholds… meaning one threshold during weekdays, and a different threshold during weekends. These are valuable when the table updates during the week, but not (or less frequently) on the weekend.

Specific changes:

In the future, we’ll extend these improvements to “Time since last row count change”, and also add bimodality for day/night behaviors.

Cozy up with some hot chocolate, because the banner in Monte Carlo is now filled with holiday cheer.