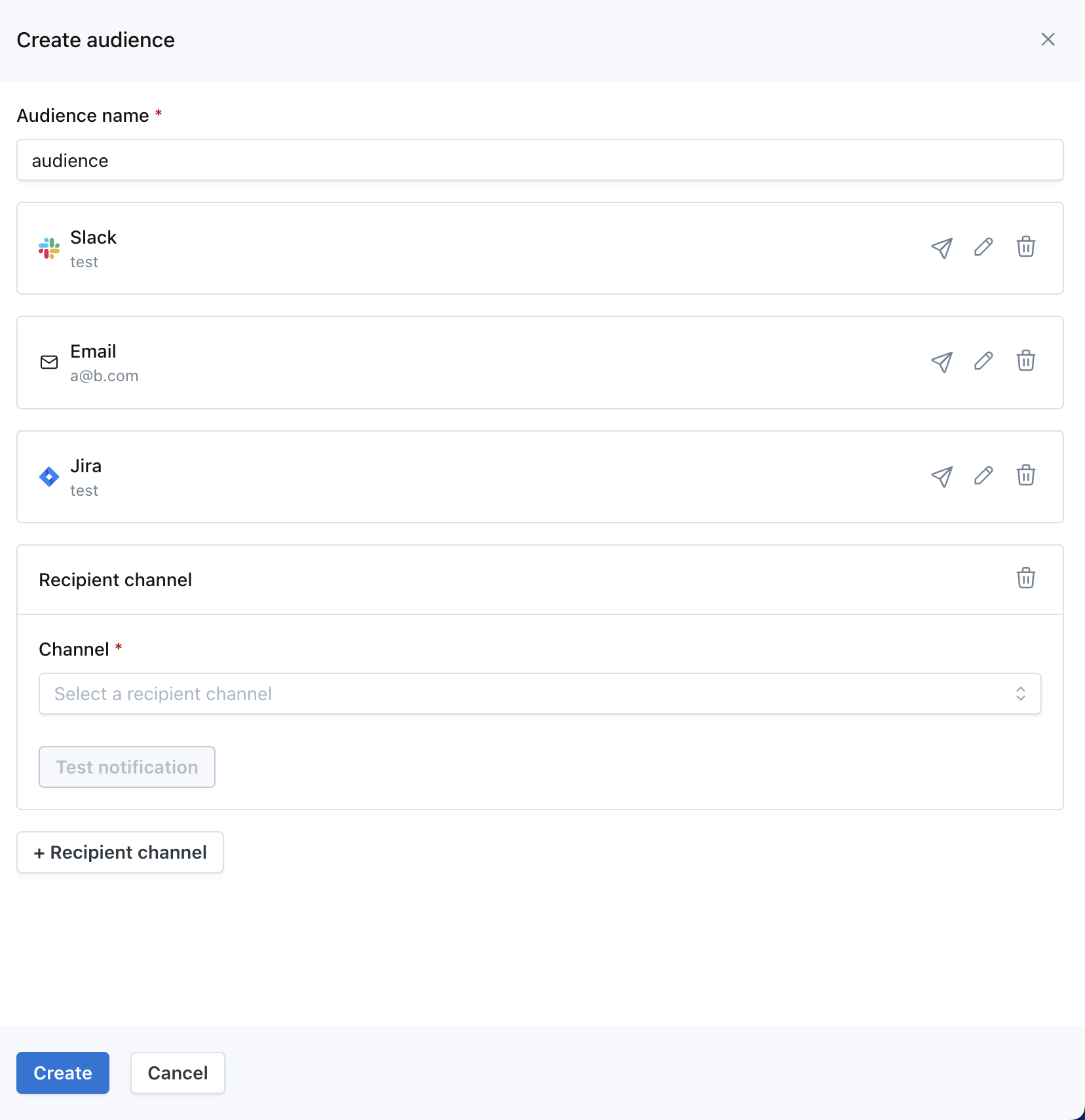

We've updated the audience creation form to simplify the experience of configuring recipient channels and managing monitor notifications.

We've updated the audience creation form to simplify the experience of configuring recipient channels and managing monitor notifications.

Previously, new users in Monte Carlo would land on the Alerts page. This change only affects go-forward new users. If your first login was earlier than today, you will continue to land on Alerts.

User research indicated that the majority of new users were seeking for information relating to their table, i.e. check lineage, see freshness or volume, add a monitor, etc. Landing on the alerts page often made it tricky to fulfill that intent, because it was hard to search & open for the specific table you cared about.

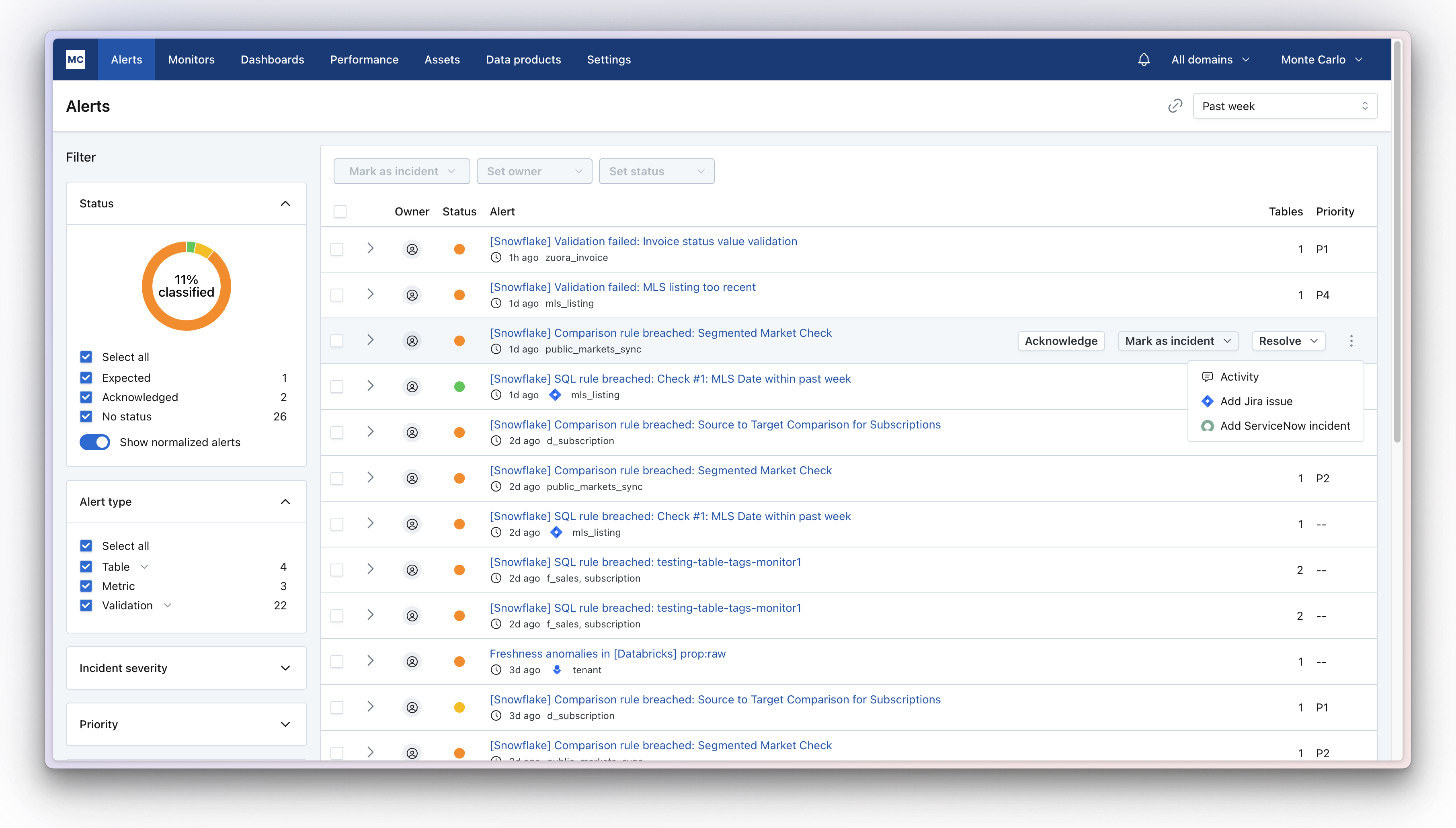

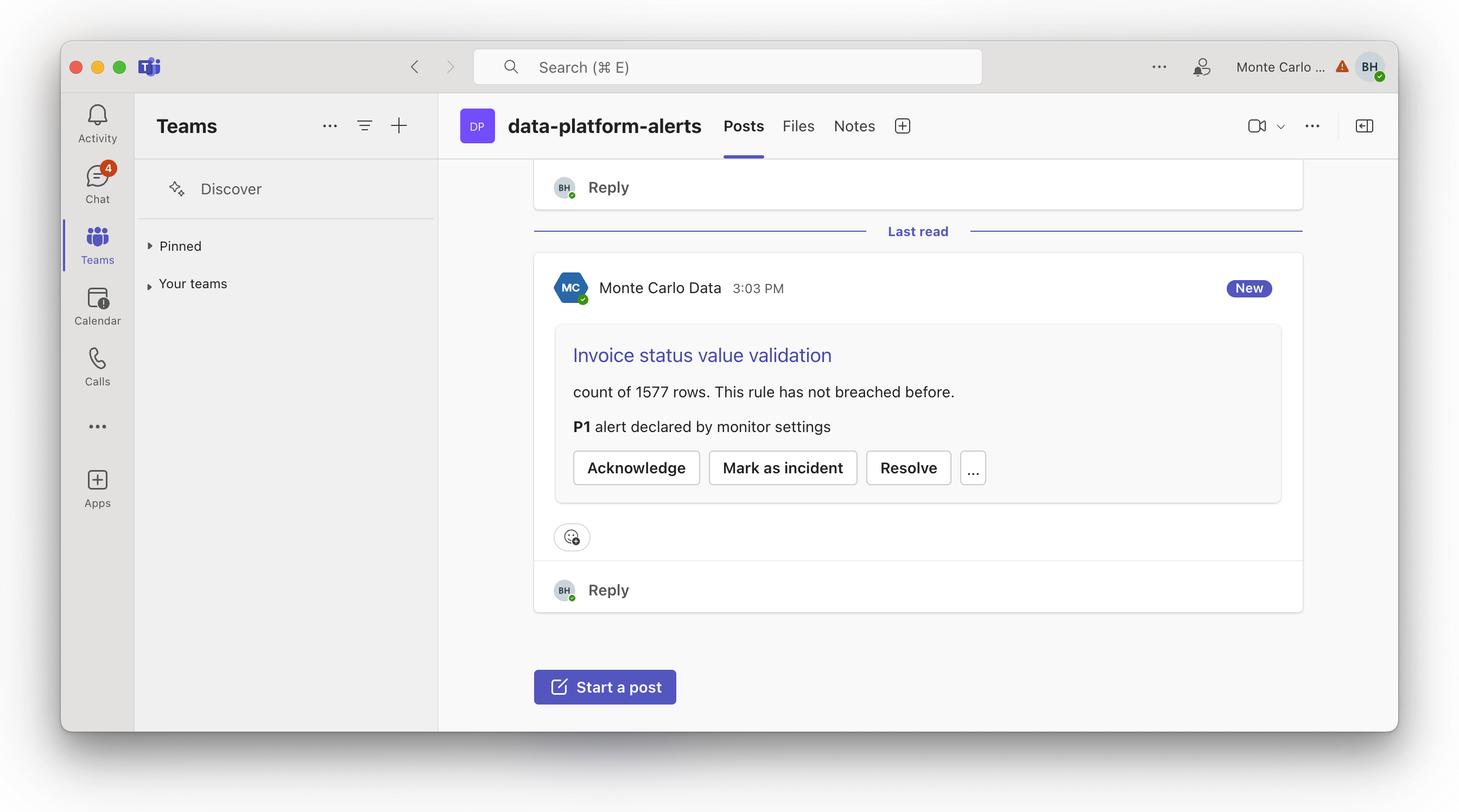

You can now acknowledge and resolve alerts, mark them as incidents, assign owners, and snooze monitors without leaving Teams or the Monte Carlo alert feed. With direct links to add Jira issues and view monitor details, you can get data operations work done quickly.

The feed is gradually rolling out to customers and Microsoft Teams will be released to all on Monday. If you would like it turned on now, please reach out to [email protected].

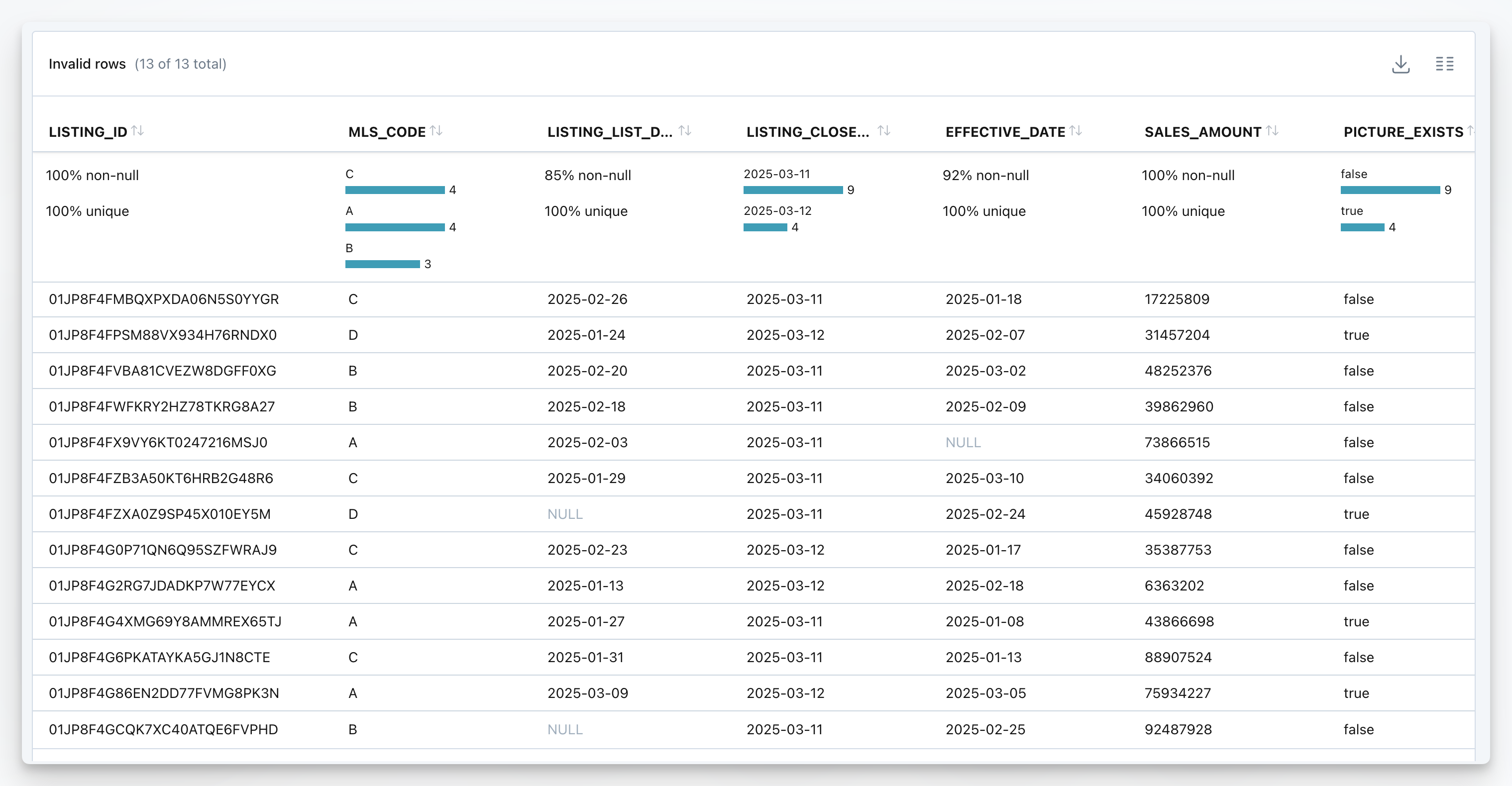

Investigating data issues just got easier with invalid row sample profiling. Quickly identify correlations with at-a-glance statistics and top values. Drill in deeper, copy entire columns directly to your clipboard, customize views by column selection and sorting, or download rows as a CSV for offline analysis.

Now available in public preview—rolling out to accounts over the next week. If you have feedback or requests on this, we'd love to hear more.

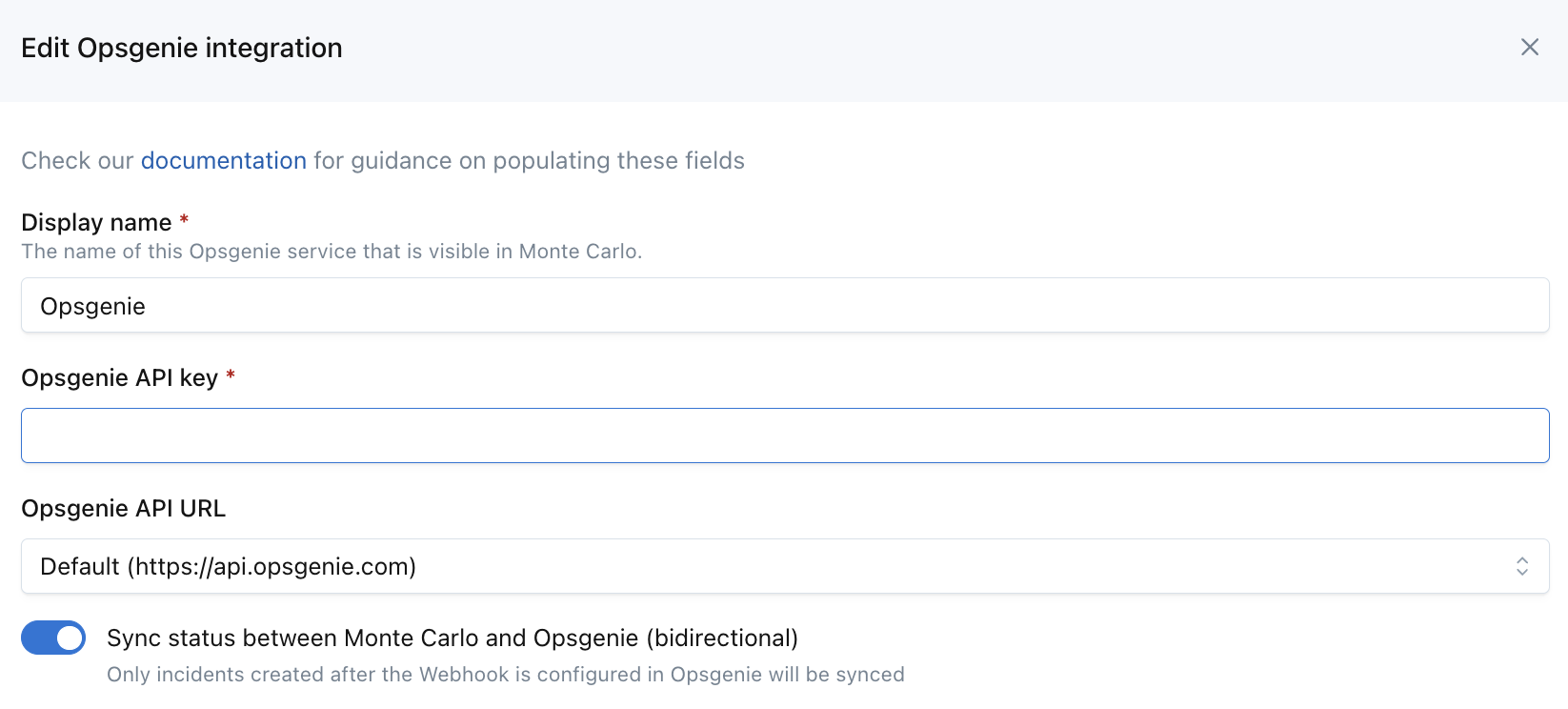

We've added support for bidirectional synchronization for alert priority, owner, status, and comments between Monte Carlo and Opsgenie so that users no longer have to reconcile the information manually.

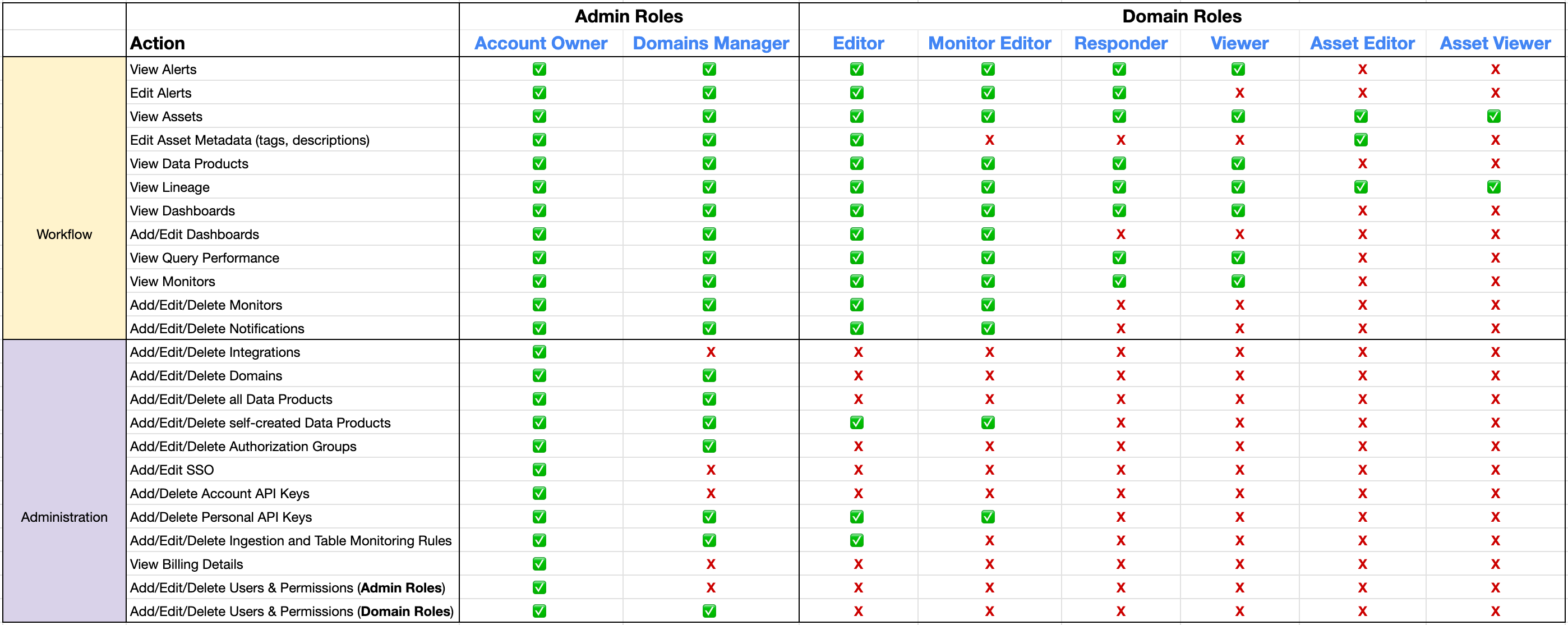

A user with this role has all the same privileges as an Editor, except they cannot:

This means that a Monitor Editor can create monitors like Metrics and Validations, but cannot add Table Monitors that may contribute to your Monte Carlo bill. See docs for more details.

The section in Settings formerly called "Usage" has been renamed "Table Monitors". Users go here to manage which assets are ingested and which have table monitors applied.

This is a cosmetic change and does not impact any underlying functionality.

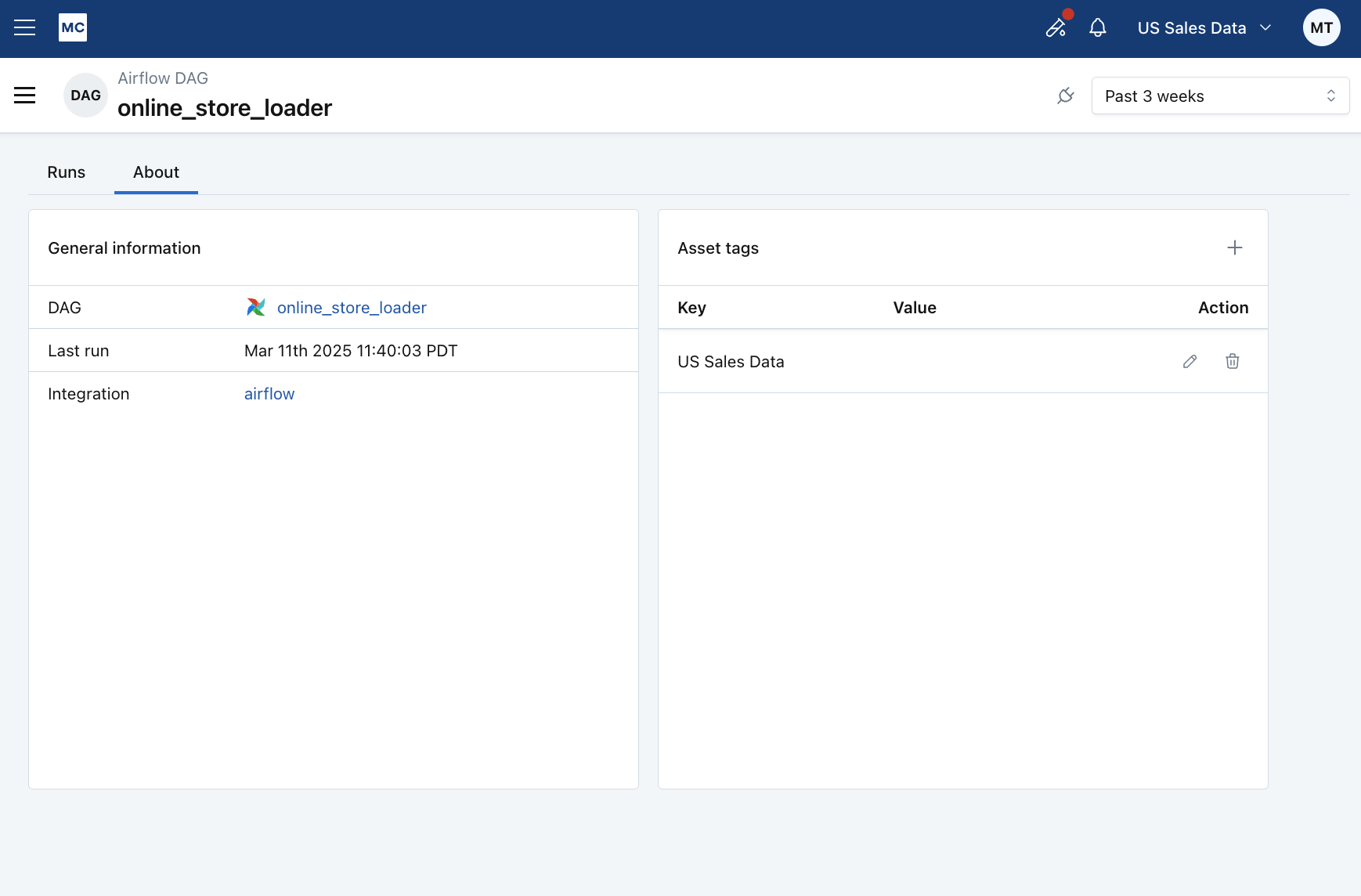

Users can now apply tags to ETL jobs (Airflow DAGs, Databricks workflows, Azure Data Factory pipelines, dbt jobs), similar to how they add tags for tables today. MC also automatically imports external job tags from Airflow DAGs, Databricks workflows, Azure Data Factory into MC. For Airflow tags import, python package airflow-mcd 0.3.6 or above is required

This gives users flexibility to organize their job assets same way as tables, such as bulk adding jobs to audience, domains using tags.

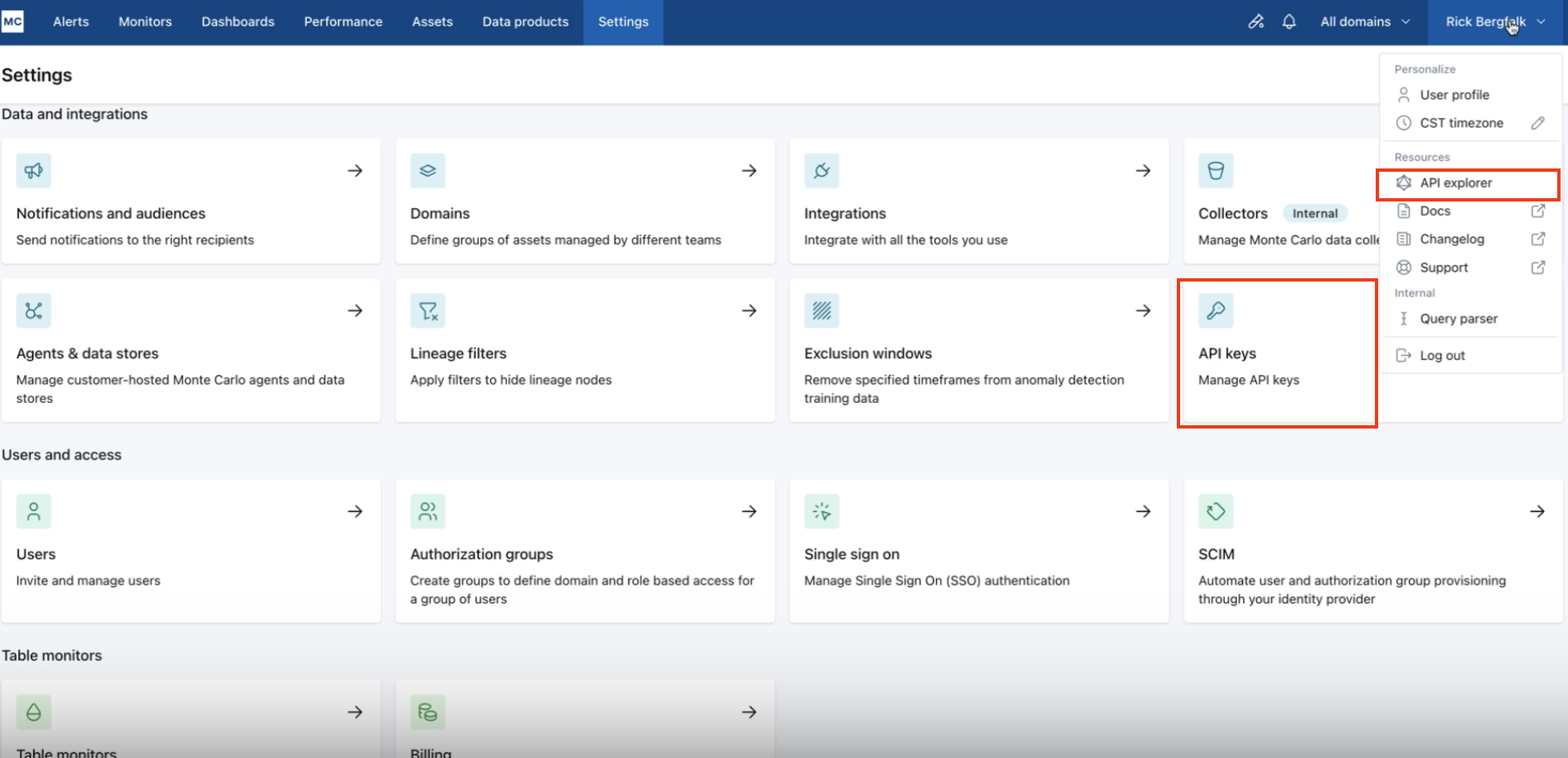

We are removing the old "API" section from the Settings page. "API keys" is now under "Data and integrations" section, and "API explorer" is now under user menu.

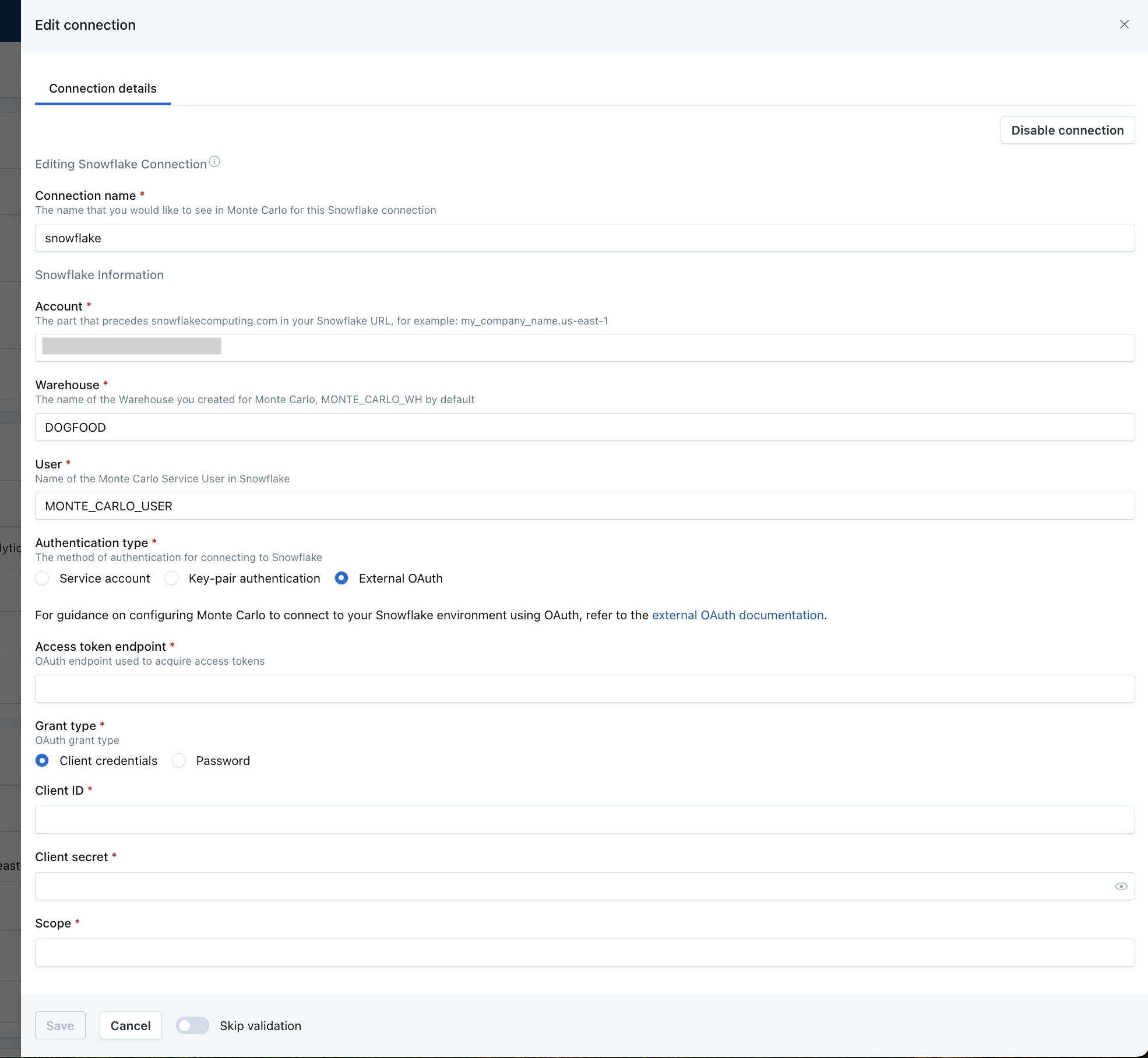

MC now supports Snowflake OAuth via Snowflake External OAuth. Customers can update existing Snowflake connection to use OAuth and set up new connection using OAuth. Docs here.