The operations agent is more than just a chatbot - it’s brings the power of the tools in our MCP Server to users out of the box — no API keys, no setup, no configs, no external tools. Just ask.

The operations agent also partners with our support assistant, trained on our public documentation, so you can get answers to product questions directly in Monte Carlo. Learn more about the support assistant.

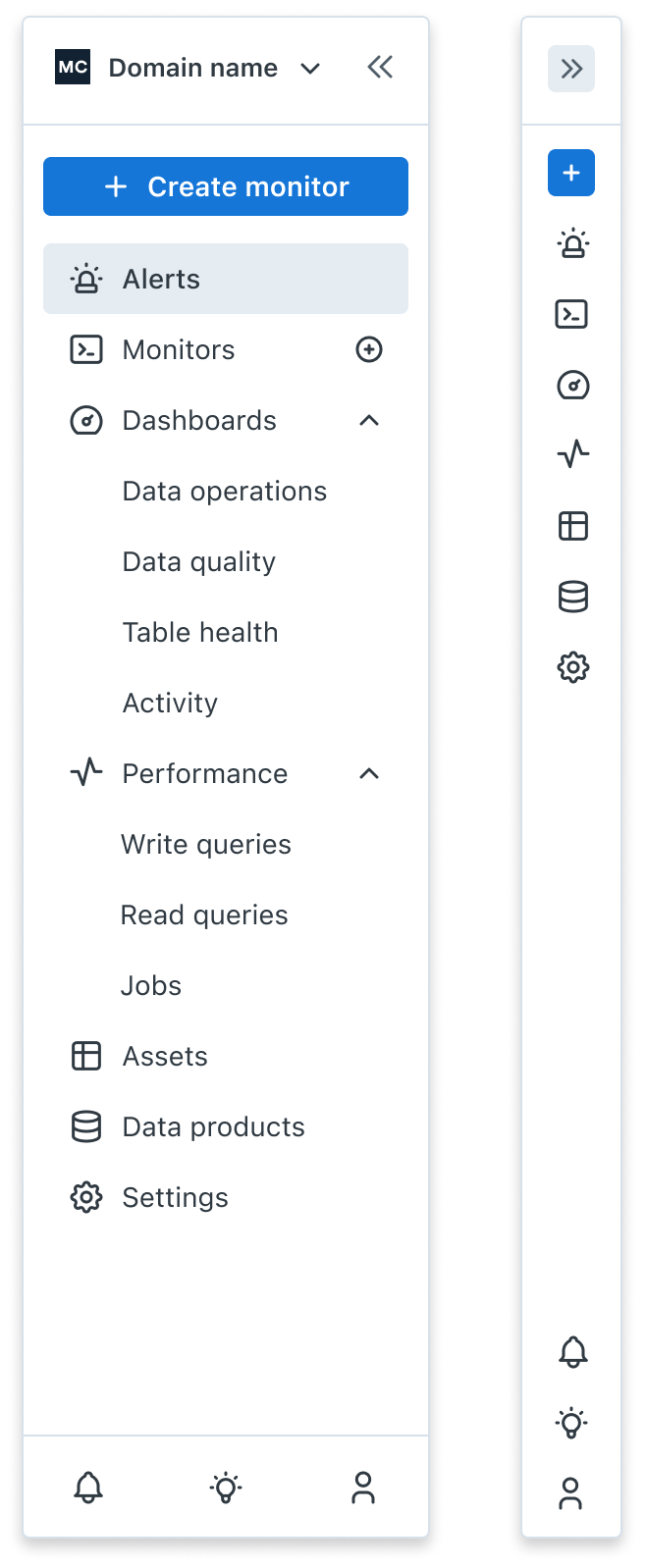

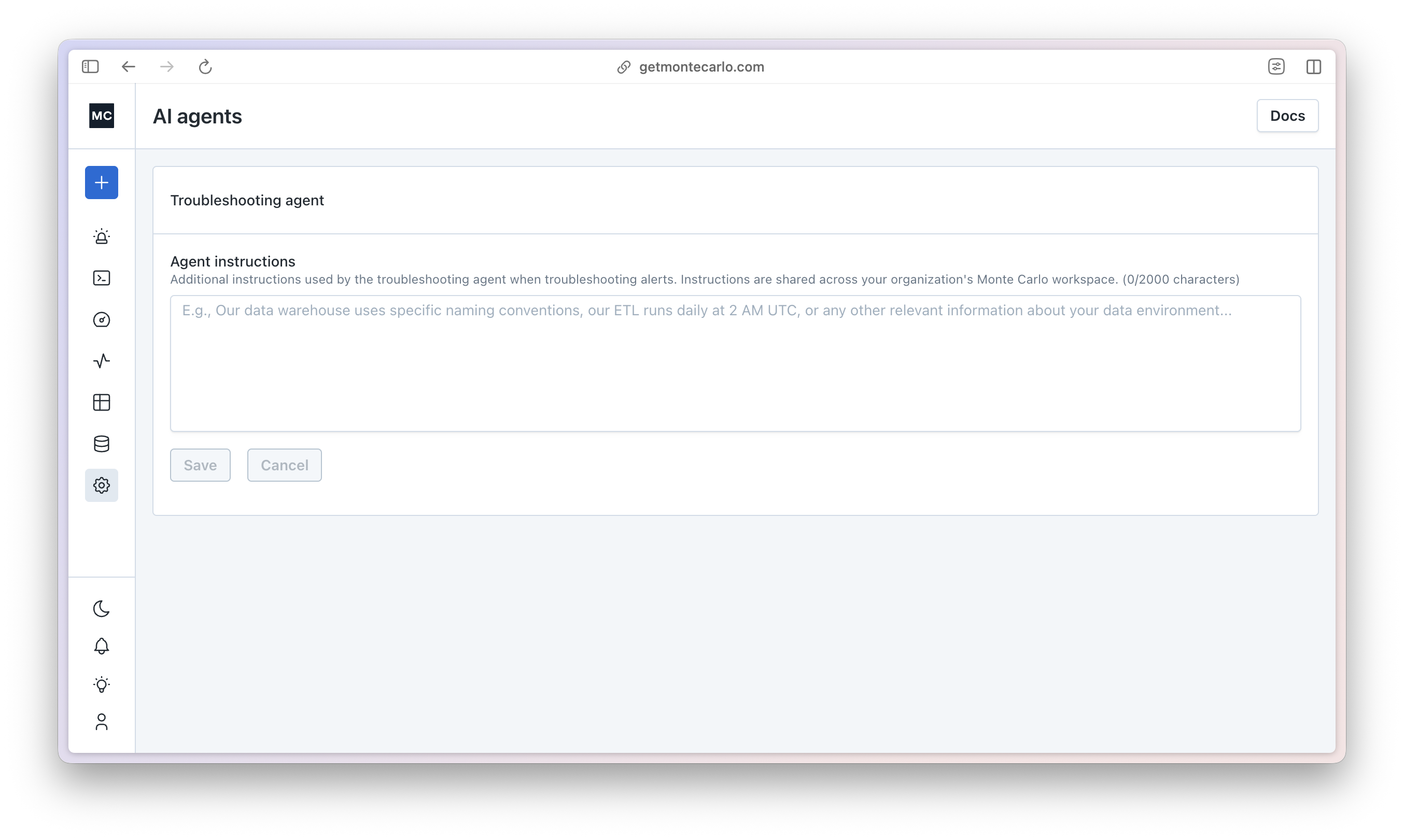

The operations agent is in public preview. If necessary, both the operations agent and support assistant can be disabled in the AI agents settings page. If AI features were already disabled, the operations agent will be disabled by default.