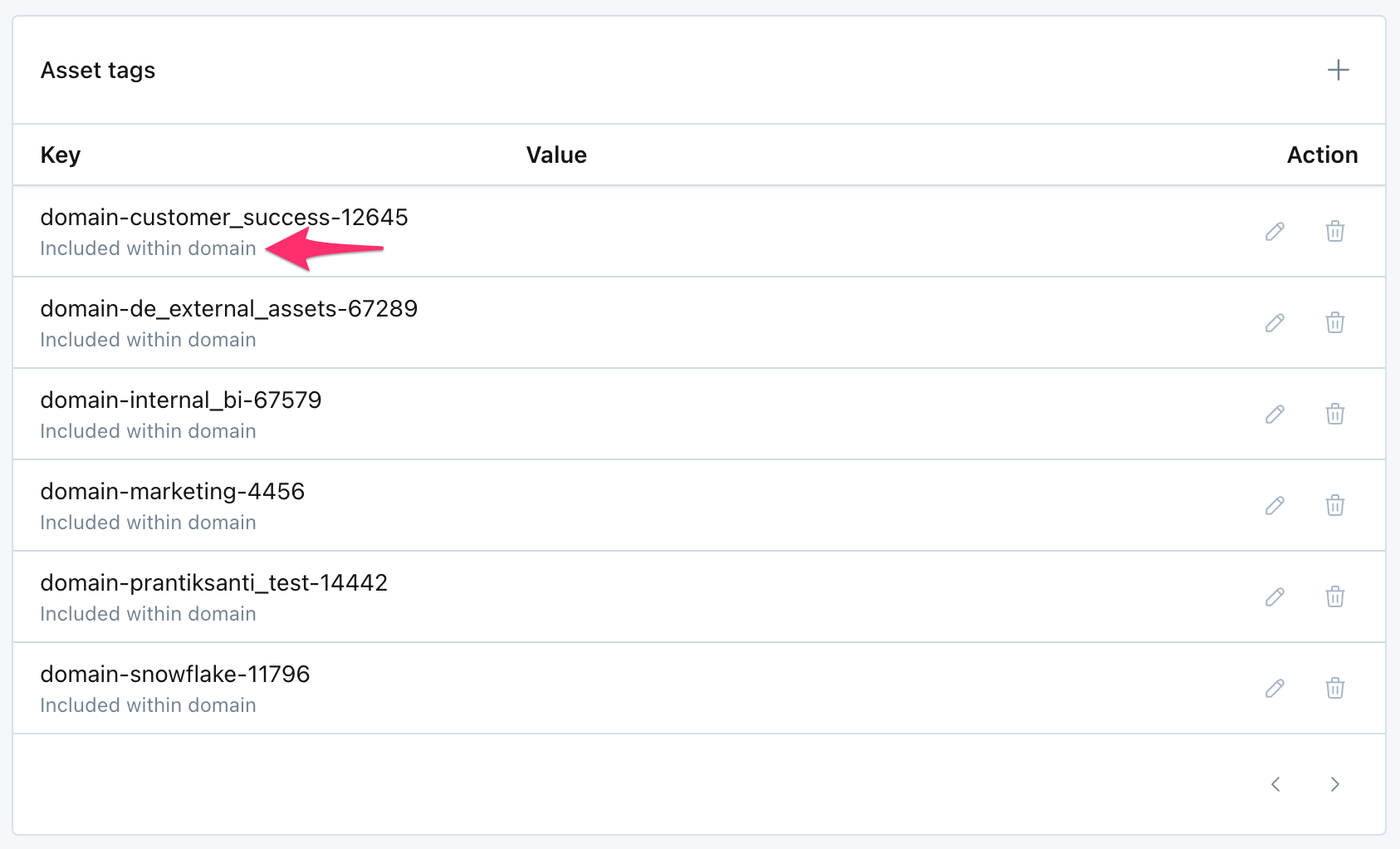

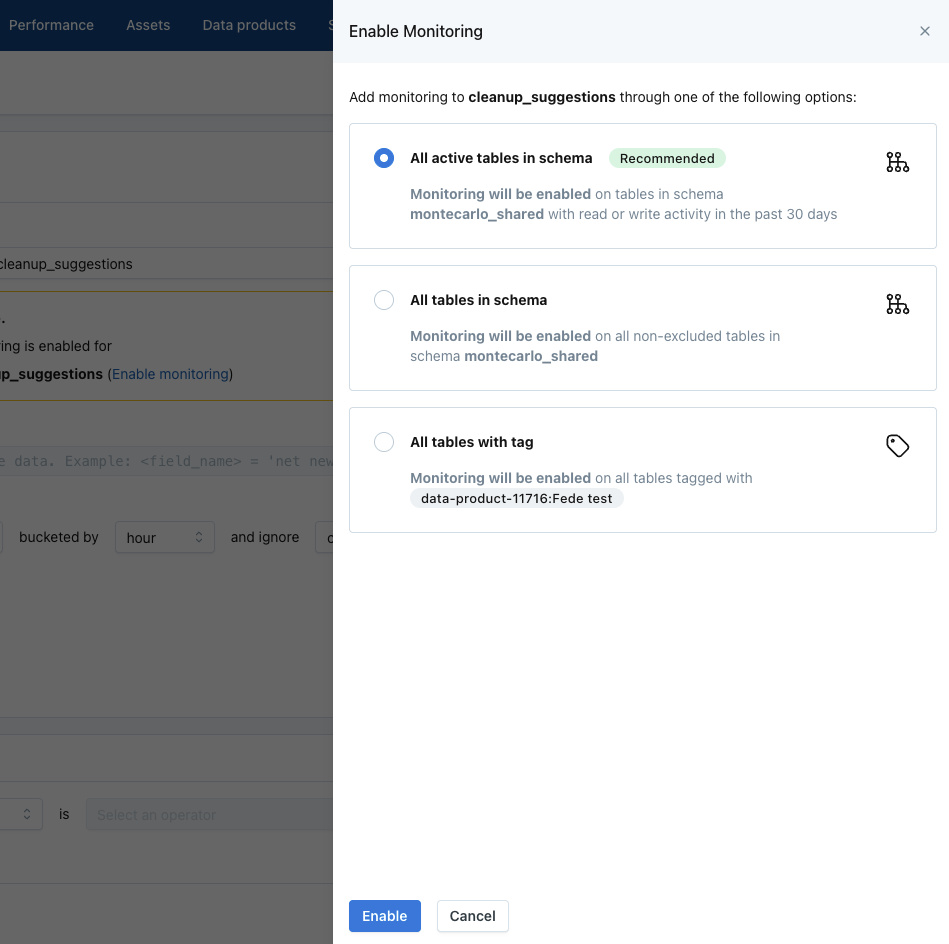

Similar to how Data Products apply a tag to the Assets they include, Domains now do the same.

Our goal is to improve the filtering experience across Monte Carlo. When users want to slice/dice/target the right tables on Dashboards, Table Monitors, Alert feed, and more, they often need some combination of Data Products, Domains, and Tags. Going forward, we'll invest in Asset Tags as the interoperable layer for all these different filter concepts.