For customers that use Redshift data sharing for sharing data between producer and consumer clusters, MC can now support lineage between such clusters and allow custom monitoring on each.

See docs here for more details

For customers that use Redshift data sharing for sharing data between producer and consumer clusters, MC can now support lineage between such clusters and allow custom monitoring on each.

See docs here for more details

We have previously announced that Insights are being deprecated on July 1. In the next 24 hours, this feature will no longer be available. You can read more about that announcement here.

Insights are replaced by Exports, which make critical Monte Carlo objects—Monitors, Alerts, Events, and Assets—exportable in clean, consistent formats. By making this well-modeled data available, customers can build better and more reliable analytics than they could before.

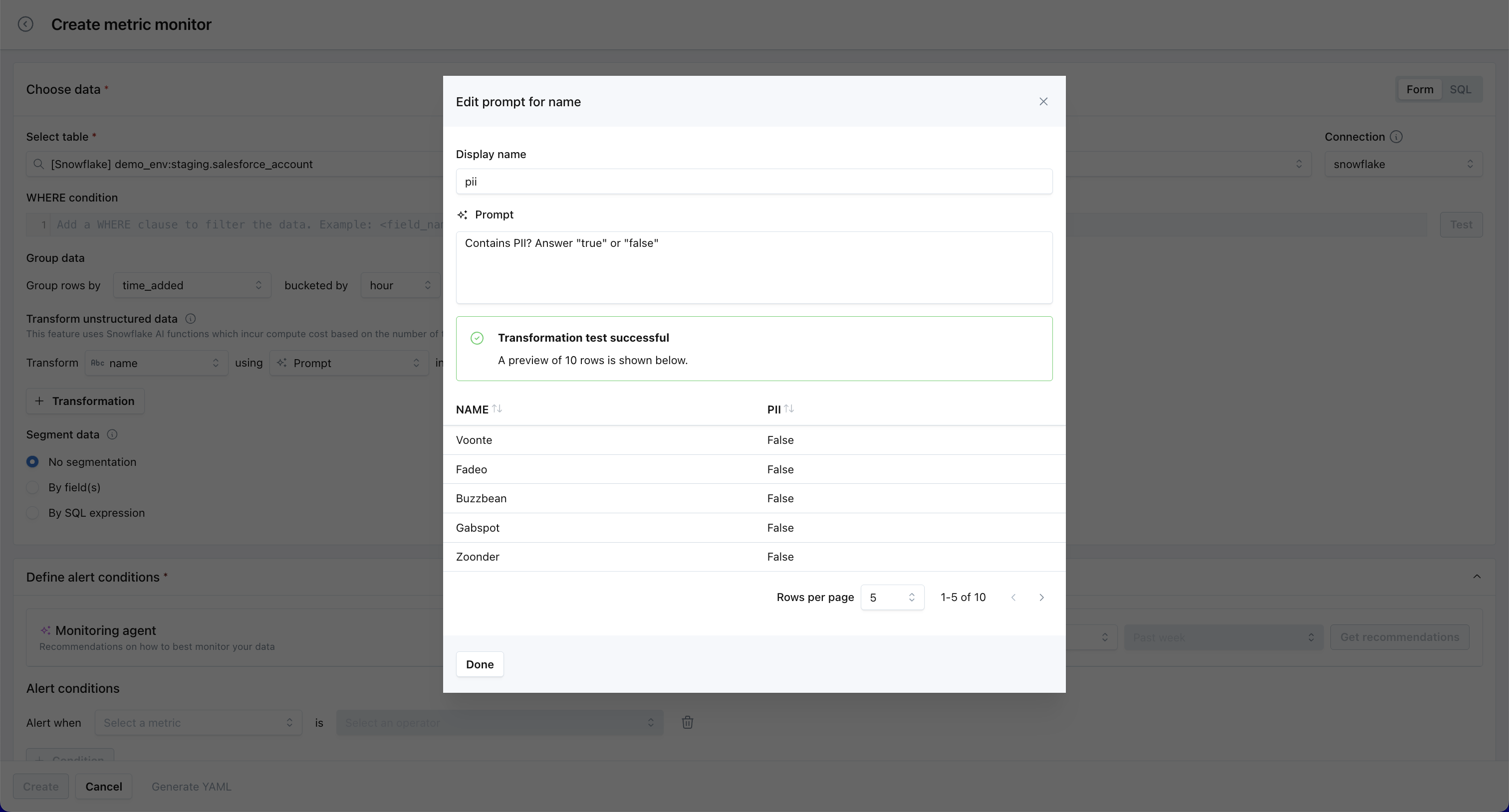

Monte Carlo now supports unstructured data monitoring in metric monitors, enabling data and AI teams to apply LLM-powered transformations—such as sentiment analysis, classification, and custom prompts—to text columns from Snowflake and Databricks. Transformed columns can be used in alert conditions and to segment data—no SQL required. Built-in guardrails ensure performance and cost-efficiency at scale. This unlocks observability for LLM and RAG pipelines, allowing users to monitor things like tone drift in generated output, hallucination-prone responses, or consistency between input context and generated text.

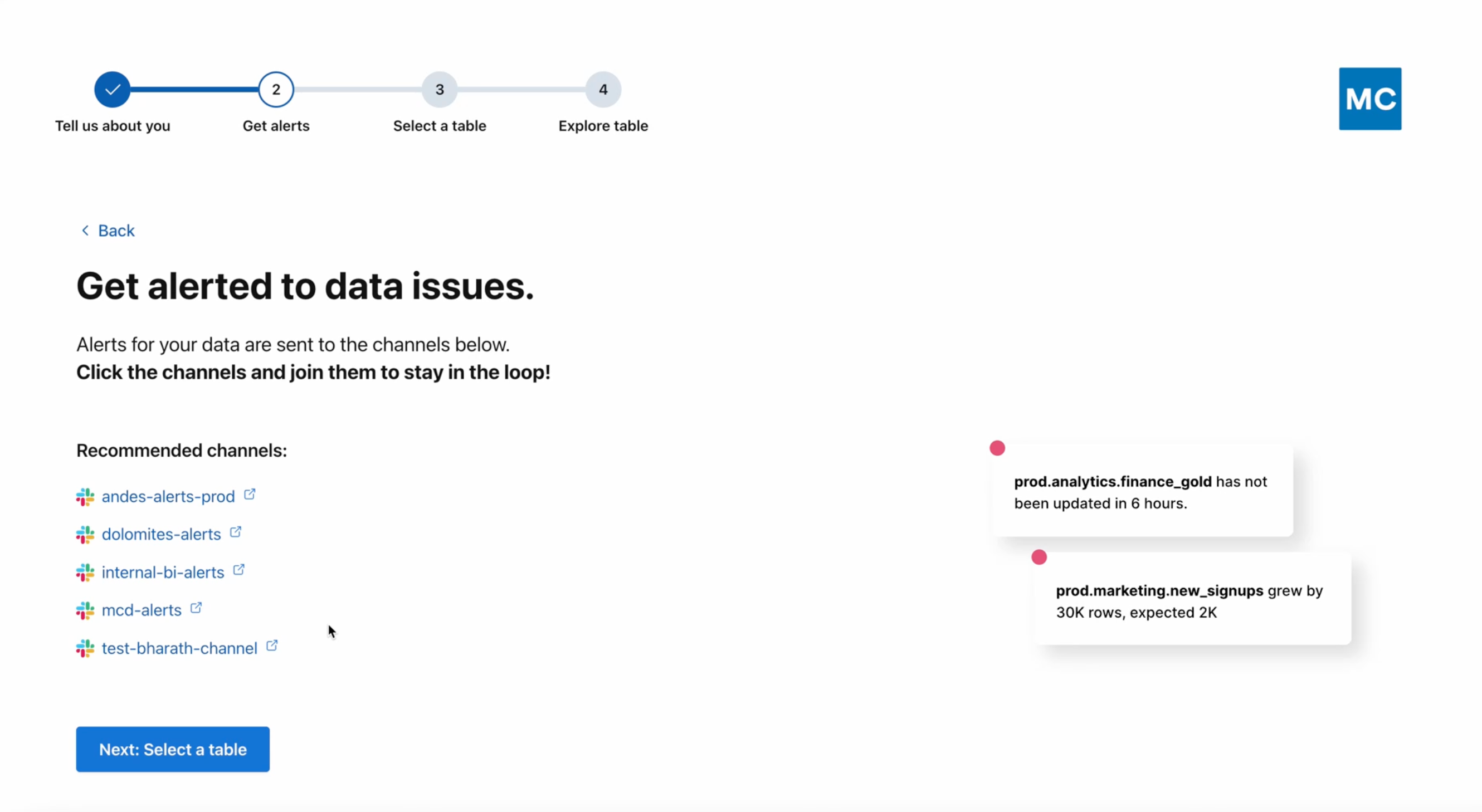

Historically, if you were logging into Monte Carlo for the very first time, we did not offer much in-product onboarding. This was a jarring way to experience the product for the first time.

We're now sending new users through a more opinionated, in-app onboarding flow. It's designed to:

(1) is one of the most effective things we've found to drive user retention, and (2) reflects the most common actions we saw successful users taking in their first session.

Our intention is to help more users satisfy their intent in their first visit into Monte Carlo, and create healthy habits that ensure they continue to see value.

One of four steps in the onboarding flow shown to new users when logging into Monte Carlo for the first time. This one encourages they join channels (Slack, Teams, etc) where alerts are already being sent.

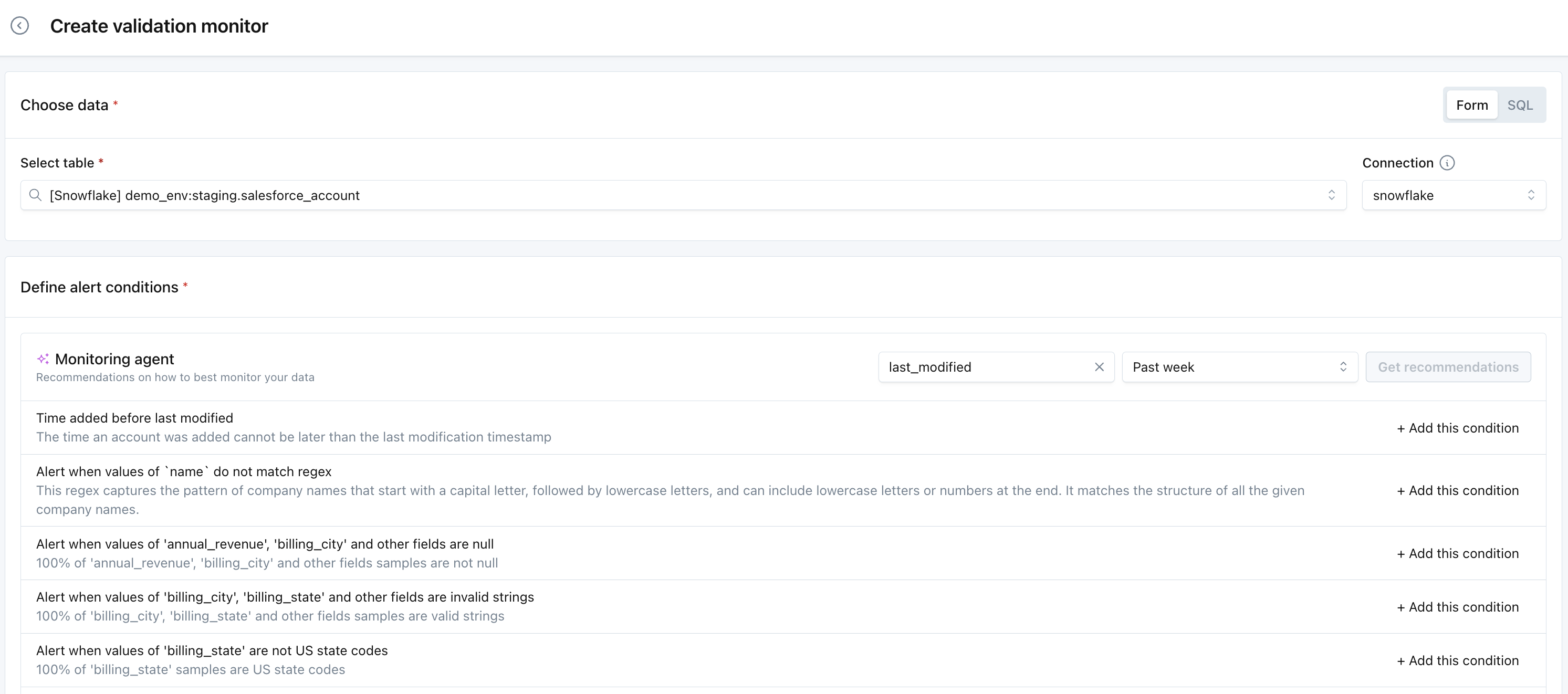

Our AI-powered Monitoring agent is now integrated directly into the metric and validation monitor wizards. The Monitoring agent analyzes sample data from your tables to intelligently recommend the most relevant monitors, including multi-column validations, segmented metric monitors, and regular expression validations. This integration brings the same smart recommendations that were previously available in Data profiler directly into the monitor creation workflow, making it faster and easier to set up comprehensive data quality monitoring with AI-suggested configurations tailored to your data.

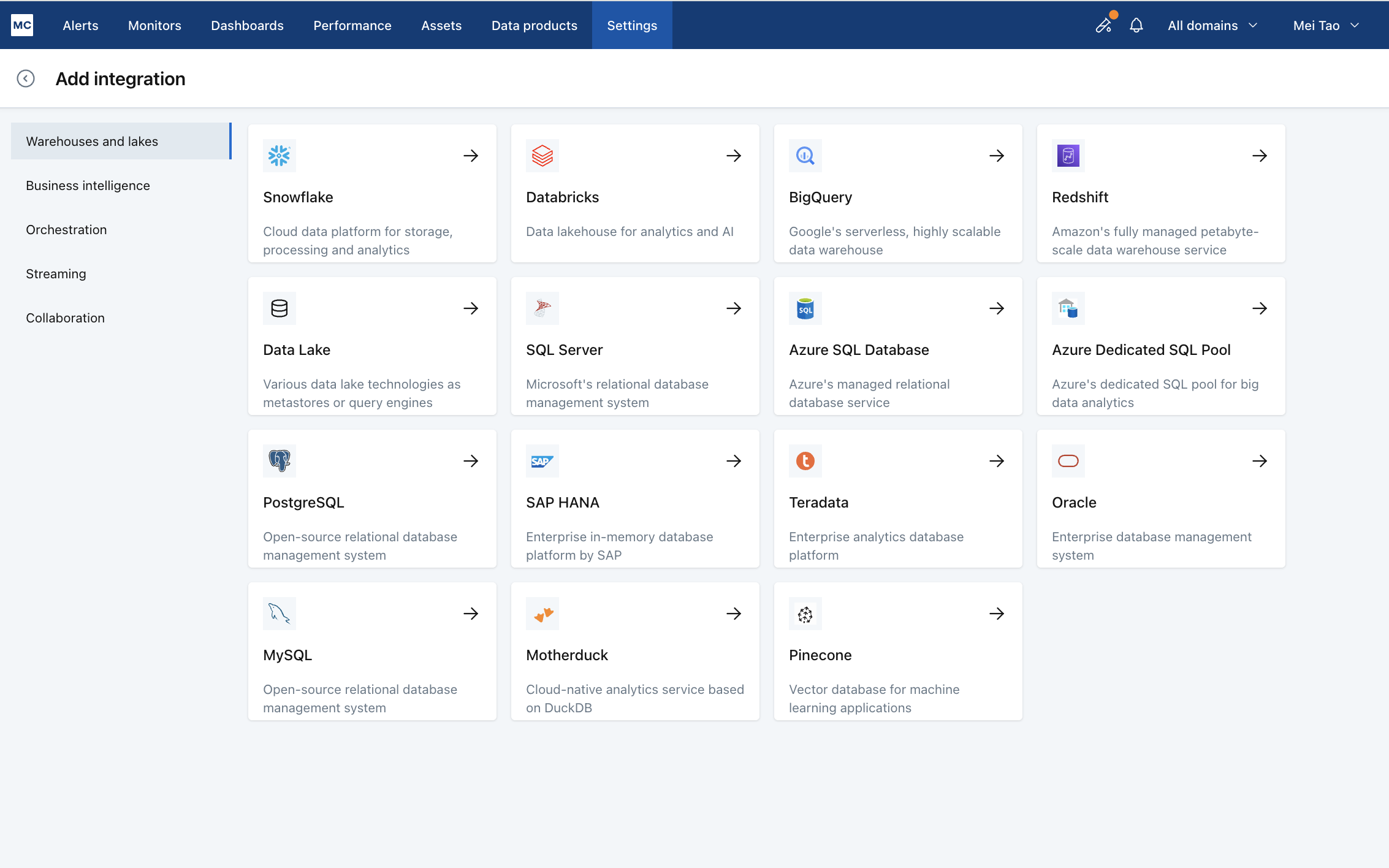

MC released a number of UI updates to the Integrations Settings view -

Check out the video walkthrough here.

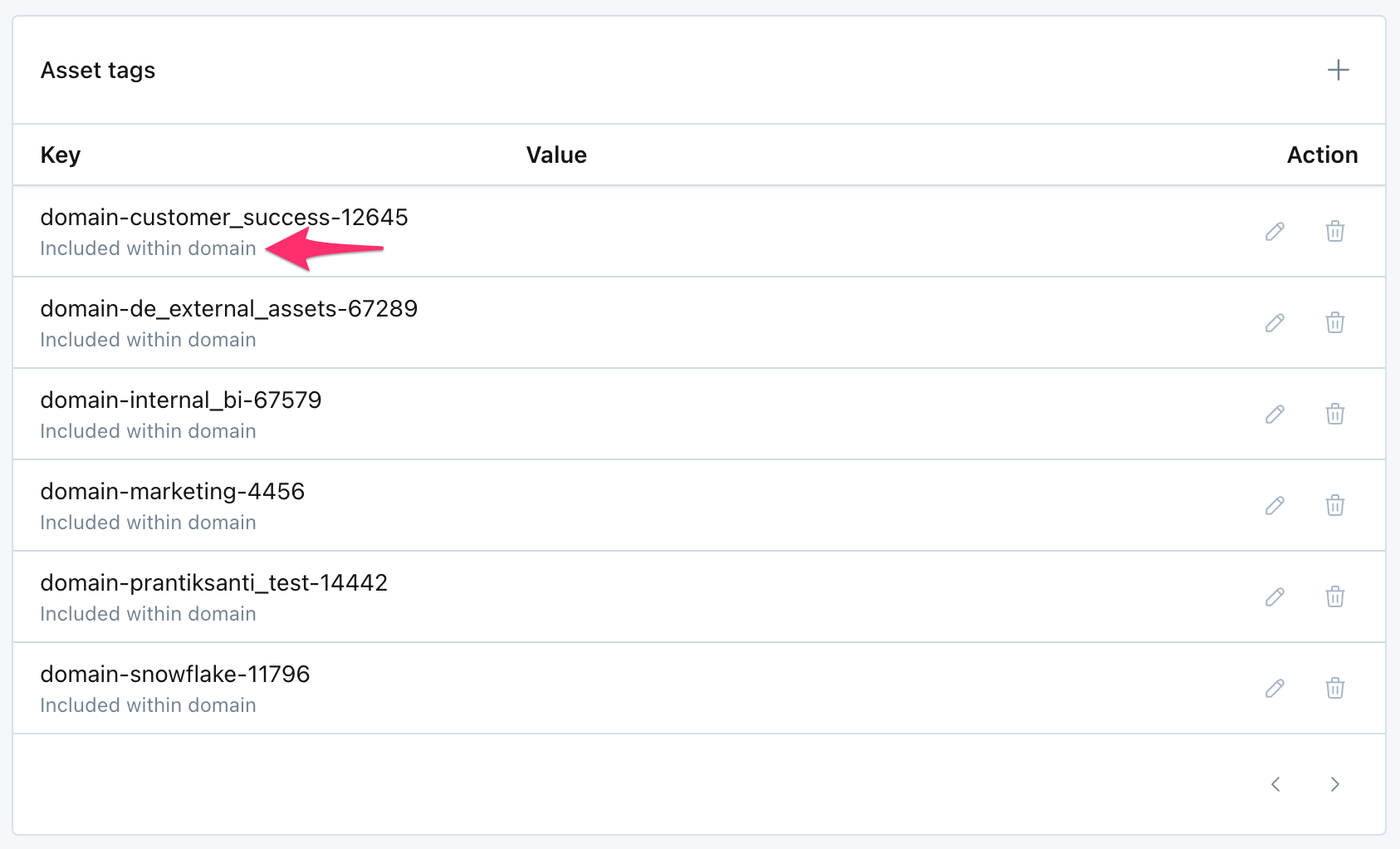

Similar to how Data Products apply a tag to the Assets they include, Domains now do the same.

Our goal is to improve the filtering experience across Monte Carlo. When users want to slice/dice/target the right tables on Dashboards, Table Monitors, Alert feed, and more, they often need some combination of Data Products, Domains, and Tags. Going forward, we'll invest in Asset Tags as the interoperable layer for all these different filter concepts.

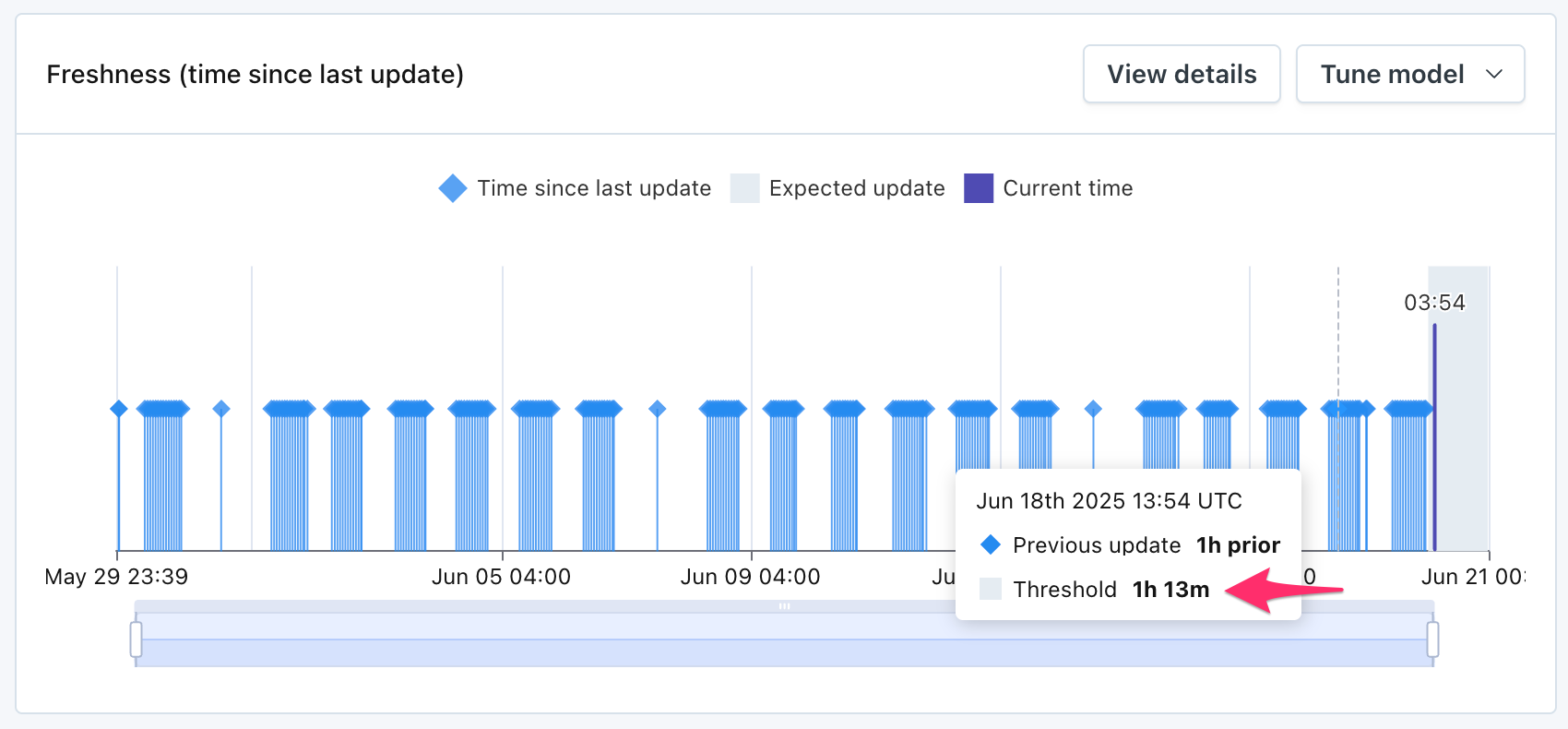

Last year, we improved freshness monitors to handle weekly patterns much better. This is useful if your tables update frequently during the week, but not on the weekends.

Now, we support the same for daily patterns. If your tables update frequently during the day and infrequently at night, the threshold will adjust to be much tighter during the day and much wider at night.

This is particularly common in financial services, where many data pipelines update frequently during market hours but not at night or during the weekend.

In this table, updates happen hourly during the day, and not at all during the night. With this new release, the threshold during the day is now much tighter -- just over an hour.

Users with the Viewer role can now access data profiling and monitor recommendations, making it easier for more team members to analyze and understand their tables. Note: the Editor role is still required in order to create monitors.

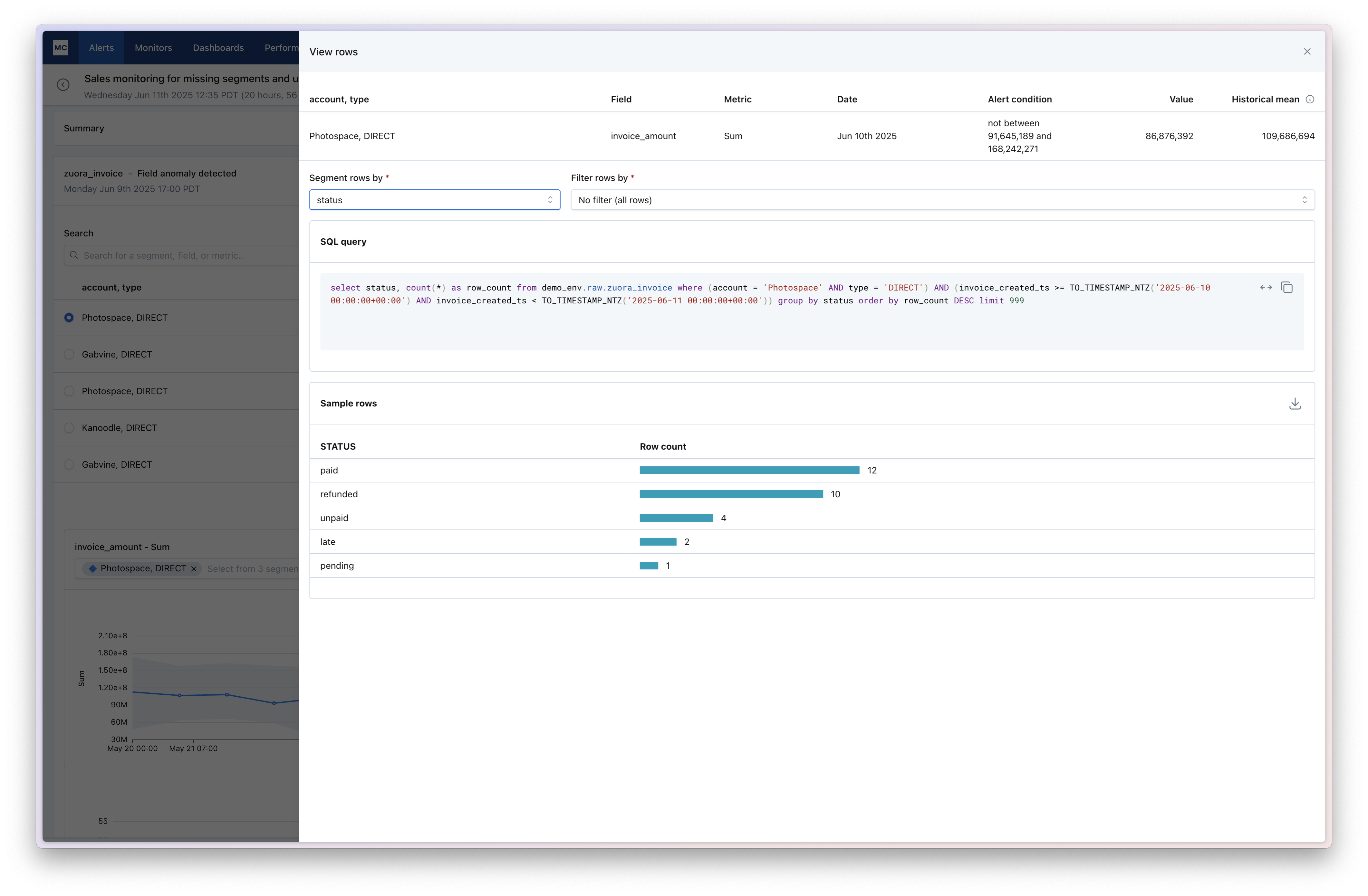

Metric Investigation helps you trace anomalies detected by Monte Carlo metric monitors back to their potential root causes — faster and with more context. With this feature, you can sample and segment rows related to a metric alert, giving you immediate visibility into the underlying data that triggered an alert.

We've also brought sampling capabilities for all metrics, rather than just the existing subset of metrics that were previously supported.

Learn more in the Metric investigation documentation.