Monitors as Code

Overview

Monte Carlo developed a YAML-based monitors configuration to help teams deploy monitors as part of their CI/CD process. The following guide explains how to get started with monitors as code.

Prerequisites

- Install the CLI

- When running

montecarlo configure, provide your API key

Using code to define monitors

First, you will need to create a Monte Carlo project. A Monte Carlo project is simply a directory which contains a montecarlo.yml file, which contains project-level configuration options. If you are using DBT, we recommend placing montecarlo.yml in the same directory as dbt_project.yml.

The montecarlo.yml format:

version: 1

default_resource: <string>

include_file_patterns:

- <string>

exclude_file_patterns:

- <string>

namespace: <string - optional>Description of options inside the montecarlo.yml file:

version: The version of MC configuration. Set to1default_resource: The warehouse friendly name or UUID where YAML-defined monitors will be created. The warehouse UUID can be obtained via thegetUserAPI as described here.- If your account only has a single warehouse configured, MC will use this warehouse by default, and this option does not need to be defined.

- If you have multiple warehouses configured, you will need to (1) define

default_resource, and (2) specify the warehouse friendly name or UUID for each monitor explicitly in theresourceproperty to override default resource. (see YAML format for configuring monitors below).

include_file_patterns: List of file patterns to include when searching for monitor configuration files. By default, this is set to**/*.yamland**/*.yml. With these defaults, MC will search recursively for all directories nested within the project directory for any files with ayamlorymlextension.exclude_file_patterns: List of file patterns to exclude when searching for monitor configuration files. For example:directory_name/*,filename__*,*.format

Example montecarlo.yml configuration file, which should be sufficient for customers with a single warehouse:

version: 1Example montecarlo.yml configuration file, for customers with multiple warehouses configured.

version: 1

default_resource: bigqueryDefining individual monitors

Define monitors in separate YML files than "montecarlo.yml"Your montecarlo.yml file should only be used to define project-level configuration options. Use separate YML files to define individual monitors.

Monitors are defined in YAML files within directories nested within the Monte Carlo project. Monitors can be configured in standalone YAML files, or embedded within DBT schema.yml files within the meta property of a DBT model definition.

Monitor definitions inside of yml files must conform to the expected format in order to be processed successfully by the CLI. Some example monitor configurations, defining the most basic options can be found below.

For an exhaustive list of configuration options and their definitions, refer to the Monitor configuration reference section below.

Example of a standalone monitor definition:

namespace: <string - optional>

montecarlo:

custom_sql:

- name: no_errors

warehouse: default warehouse override

sql: SELECT * FROM db.schema.results WHERE state = 'error'

alert_conditions:

- operator: GT

threshold_value: 0Example of monitor embedded within a DBT schema.yml file:

version: 2

models:

- name: results

description: Run results table

meta:

montecarlo:

custom_sql:

- name: no_errors

sql: SELECT * FROM db.schema.results WHERE state = 'error'

alert_conditions:

- operator: GT

threshold_value: 0dbt ref resolution

In the snippet above, you can see an example of resolving dbt ref("<model_name>") notation for any string field within Monte Carlo monitor configs. Just wrap the ref in [[ ]] and make sure to quote the string as in the example above. To resolve refs, you must pass the --dbt-manifest <path_to_dbt_manifest> argument to the CLI when applying the monitor config with the path to your dbt manifest.json file (by default created in the target/ directory after running dbt commands like compile or run). Each dbt ref will be looked up in the manifest and replaced with the full table name. To use this feature you must be on version 0.42.0 or newer of the Monte Carlo CLI.

Tip: Using monitors as code with DBTIf your organization already uses DBT, you may find that embedding monitor configurations within DBT

schema.ymlfiles may make maintenance easier, as all configuration/metadata concerning a given table are maintained in the same location. For an example DBT repo with some basic monitor configuration, click here.

Shortcut to building monitor configuration

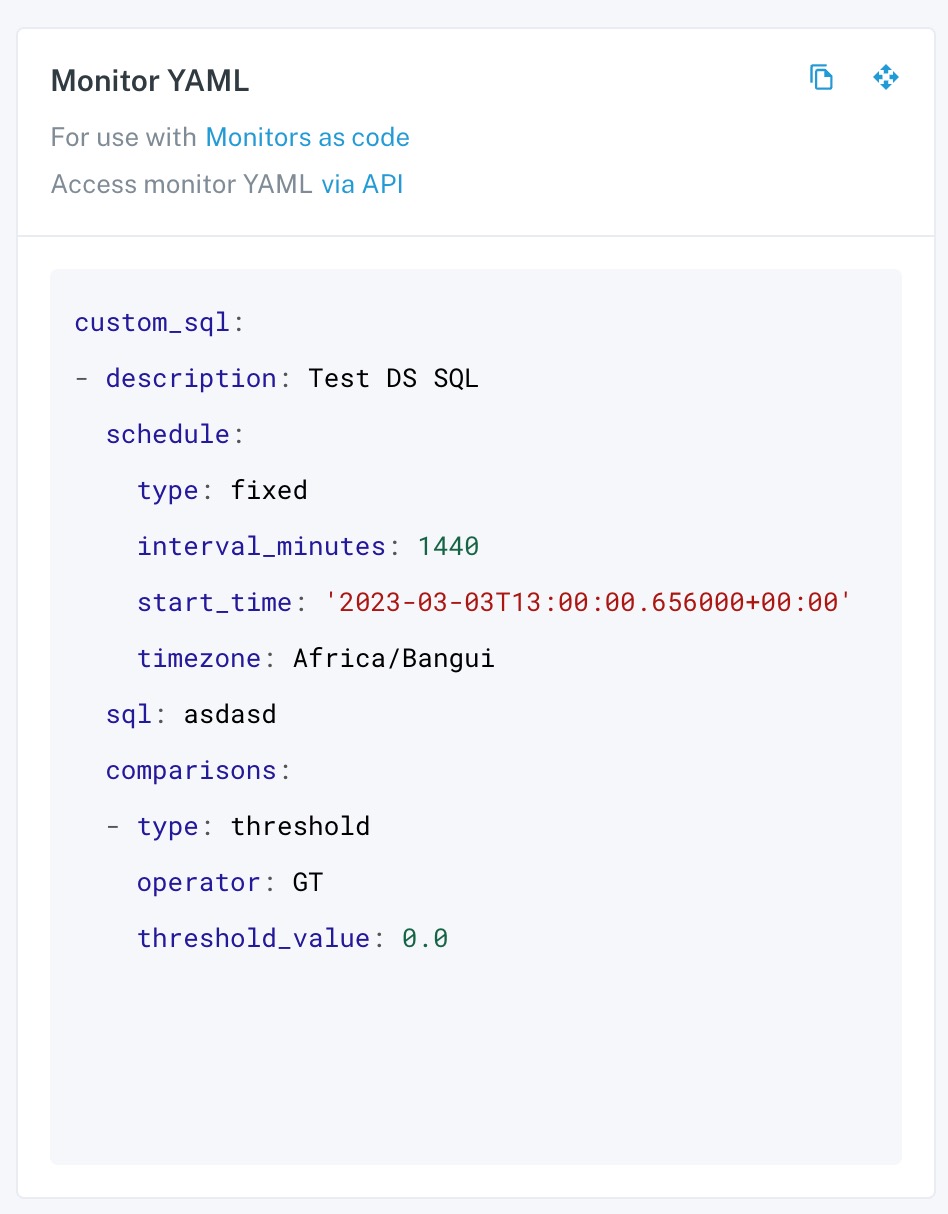

If you want to get a head start on setting up monitors as code, visit the monitor details page of an existing monitor where you can find the YAML definition for that monitor's configuration.

Below is a screenshot of the YAML definition for an existing monitor within Monte Carlo.

Developing and testing locally

Once the Monte Carlo project is setup with a montecarlo.yml and at least one monitor definition in a separate .yml file, you can use the Monte Carlo CLI to apply them.

To apply monitor configuration to MC run:

montecarlo monitors apply --namespace <namespace>Note in the above command, a namespace parameter is supplied. Namespaces are required for all monitors configured via Monitors as Code as they make it easier to organize and manage monitors at scale. Namespaces can either be defined by passing a --namespace argument in the CLI command or defined within the .yml files (see above for more details).

The apply command behaves as follows:

- MC will search for all monitor configuration elements in the project, both in standalone and embedded in DBT schema files. All monitor configuration elements will be concatenated into a single configuration template.

- MC will apply the configuration template to your MC account:

- Any new monitors defined since last apply will be created

- Any previously defined monitors present in current configuration template will be updated if any attributes have changed

- Any previously defined monitors absent from current configuration template will be deleted

Namespaces

You can think of namespaces like Cloudformation stack names. It’s a logical separation of a collection of resources that you can define. Monitors from different namespaces are isolated from each other.

Some examples of why this is useful -

- You have multiple people (or teams) working on managing monitors and don’t want to conflict or override configurations.

- You want to manage different groups monitors in different pipelines (e.g. dbt models in CI/CD x & non-dbt models in CI/CD y).

Namespaces can also be defined within the montecarlo.yml file or within individual monitor definitions.

Namespaces are currently limited to 2000 resources. Support for this starts in CLI version 0.52.0, so please upgrade your CLI version if you are running into timeouts while applying changes.

To delete (destroy) a namespace:

montecarlo monitors delete --namespace <namespace>This will delete all monitors for a given namespace.

Dry Runs

The

applycommand also supports a--dry-runargument which will dry run the configuration update and report each operation. Using this argument just shows planned changes but doesn't apply them.

Integrating into your CI/CD pipeline

Deploying monitors within a continuous integration pipeline is straightforward. Once changes are merged into your main production branch, configure your CI pipeline to install the montecarlodata CLI:

pip install montecarlodataAnd run this command:

MCD_DEFAULT_API_ID=${MCD_DEFAULT_API_ID} \

MCD_DEFAULT_API_TOKEN=${MCD_DEFAULT_API_TOKEN} \

montecarlo monitors apply \

--namespace ${MC_MONITORS_NAMESPACE} \

--project-dir ${PROJECT_DIR} \

--auto-yes

Skipping confirmationsThe

--auto-yesoption will disable any confirmation prompts shown by the command and should only be used on CI, where there's no interaction. Otherwise we recommend not including the--auto-yesso you can review what changes will be applied before confirming.

These environment variables need to be populated:

MCD_DEFAULT_API_ID: Your Monte Carlo API IDMCD_DEFAULT_API_TOKEN: Your Monte Carlo API TokenMC_MONITORS_NAMESPACE: Namespace to apply monitor configuration toPROJECT_DIR: If themontecarlocommand is not run within your Monte Carlo project directory, you can specify it here. Optional.

Example projects with CI/CD

For some examples of monitors as code projects with CI/CD setup, checkout these repositories:

- Standalone Monte Carlo project

- Basic DBT project with monitors (GitLab)

- Basic DBT project with monitors (GitHub)

Monitor configuration reference

Common properties

name: Uniquely identifies the monitor in the namespace. The name is necessary for us to track which monitor the definition maps to, so we can detect what changed or if it's a new monitor. If we stop seeing a given monitorname, we'll delete that monitor.warehouse(previous name:resource): Optional warehouse name can be set here to override the default warehouse set inmontecarlo.yml. Formetric_comparisonmonitors, you can usewarehouseinsourceandtargetinstead.description: Friendly description of the monitor.tags: Optional list of name/value tags to associate to the monitor.notes: Additional context for the monitor.audiences(previous name:labels): Optional list of audiences associated with the monitor.failure_audiences: Optional list of audiences to notify about run failures for this monitor.priority(previous name:severity): Optional, pre-set the priority of alerts generated by this monitor. Only use 'P1' through 'P5' as custom values will be rejected.data_quality_dimension: Optional, data quality dimension for the monitor. Options are:ACCURACY,COMPLETENESS,CONSISTENCY,TIMELINESS,VALIDITY,UNIQUENESS.notify_run_failure(deprecated): Previously controlled whether run failures would notify monitor audiences. Failure notifications are now handled directly via thefailure_audiencesfield.

Tables

tableandtablesfields passed in the config should contain valid full table ids. If the table doesn't exist in the assets page the validation will fail. Sometimes the catalog service takes time to detect newer tables, you can wait for the tables to be detected and then create monitors for them or (not recommended) you can apply the monitor config with--create-non-ingested-tablesoption to force create the tables along with the monitor. These tables will be marked deleted if they are not detected later on.

Table Monitor

Table monitors are only available for some accounts as we complete the rollout of this feature. See Table Monitors Migration.

Defined under the table property.

table:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

description: <string> # required

asset_selection:

databases: # optional - pick only specific databases

- name: <string>

schemas: # optional - pick only specific schemas

- <string>

filters: # optional

- # list of filters, see below

exclusions: # optional

- # list of exclusions, see below

alert_conditions: # optional

- # list of metrics to monitor, see below

audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optional

domain: <string> #optionalfiltersandexclusions: a list of the following- Table name condition:

table_name: the pattern to matchtable_name_operator: one ofSTARTS_WITH,ENDS_WITH,CONTAINS,MATCH_PATTERN. Patterns are globbing patterns where*can be used to match 'any text'.

- Tag condition:

table_tags: list of table tag namestable_tags_operator: one ofHAS_ANYorHAS_ALL.

- Activity condition:

- One of the following followed by the number of days:

read_write_daysread_dayswrite_days

- For exclusion rules, you must also include the

negated: trueproperty. id: pass in a string id for referencing an alert condition in case of errors.

- One of the following followed by the number of days:

- Table name condition:

alert_conditions: a list of metrics to monitor. Must be one of:metric: last_updated_onmetric: total_row_countmetric: total_row_count_last_changed_onmetric: schema

domain: the domain to associate with the table monitor. This controls which assets are available for inclusion and which users can access the monitor based on their domain assignments.

Validation Monitor

Defined under the validation property.

validation:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

connection_name: <string> # optional - override default connection name

timeout: <int> # optional - only supported on some warehouses

description: <string> # required

notes: <string> # optional

schedule:

interval_minutes: <integer>

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

dynamic_schedule_tables: # optional - used to specify table for dynamic schedule

- <string> # accepts just one table.

data_source:

table: <string> # optional - need to pass either table or sql

sql: <string> # optional - need to pass either table or sql

alert_conditions:

conditions:

- id: <string> # optional helps to reference error in alert conditions

value: # optional - use this if type is set to UNARY

- field: <string>

left: # optional - use this if type is set to BINARY

- field:

right: # optional - use this if type is set to BINARY

- literal: <string> or <integer> or <date> or <timestamp>

sql: <string> # optional - use this if type is set to SQL

predicate: # optional - use this if type is set to UNARY or BINARY

name: <string>

type: <string> # UNARY or BINARY or SQL

operator: <string> # AND or OR

type: GROUP

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

audiences: # optional

- <string>

failure_audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionalconnection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the[getWarehouseConnections](https://apidocs.getmontecarlo.com/#query-getWarehouseConnections)API call. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.timeout: Timeout in seconds for the query. Defaults to 15 minutes. Only supported on Snowflake.data_source: Need to pass eithertableorsqltable: MC global table ID (format<database>:<schema>.<table name>)sql: SQL for the data source (generallyselect * from table_name)

alert_condition:type: GROUPoperator: AND or OR (default is AND)conditions: array of conditions. you can pass 4 types of conditionsid: pass in a string id for referencing an alert condition in case of errors.

- UNARY:

type: UNARYpredicate: refer the available UNARY predicate list for different field data types.name: predicate namenegated: set to true if you want the condition to be negated.

value: users can pass multiple fieldsfield: pass in a field name

field: pass in a field name that exists in thedata_sourceprovided above

- BINARY:

type: BINARYpredicate: refer the available BINARY predicate list for different field data types.name: predicate namenegated: set to true if you want the condition to be negated.

left: users can pass multiple fieldsfield: pass in a field name

right: users can pass multiple fieldsliteral: pass in integer or string or date or timestamp or boolean as a string

field: pass in a field name that exists in thedata_sourceprovided above

- SQL

type: SQLsql: sql expression likefield_name < 100

- GROUP: We can also pass Group as a condition in itself. (see examples below)

predicates:

UNARY:

- NUMBER:

null,is_zero,is_negative,is_between_0_and_1,is_between_0_and_100,is_nan - STRING:

null,empty_string,all_spaces,containing_spaces,null_string,is_uuid,us_zip_code,us_ssn,us_state_code,us_phone_number,can_sin,ca_postal_code,fr_insee_code,fr_postal_code,de_postal_code,de_tax_id,ie_ppsn,is_postal_code,it_postal_code,it_fiscal_code,es_dni,es_postal_code,uk_postal_code,uk_nino,nl_postal_code,nl_bsn,tr_postal_code,tr_id_no,ch_oasi,pl_postal_code,pl_pesel,email,timestamp_iso_8061,timestamp_mm-dd-yyyy,timestamp_dd-mm-yyyy,timestamp_mm/dd/yyyy,timestamp_dd/mm/yyyy,true_string,false_string - BOOLEAN:

null - DATE:

null,in_past,in_future,today,yesterday,in_past_7_days,in_past_30_days,in_past_365_days,in_past_calendar_month,in_past_calendar_week,sunday,monday,tuesday,wednesday,thursday,friday,saturday,weekday - TIMESTAMP:

in_past,in_future,today,yesterday,in_past_7_days,in_past_30_days,in_past_365_days,in_past_calendar_month,in_past_calendar_week,sunday,monday,tuesday,wednesday,thursday,friday,saturday,weekday

BINARY:

- NUMBER:

equal,greater_than,greater_than_or_equal,less_than,less_than_or_equal,in_set - STRING:

equal,contains,starts_with,ends_with,matches_regex,in_set - BOOLEAN:

equal - DATE:

equal,greater_than,greater_than_or_equal,less_than,less_than_or_equal - TIMESTAMP:

equal,greater_than,greater_than_or_equal,less_than,less_than_or_equal

Comparison Monitor

Defined under the metric_comparison property.

metric_comparison:

- name: <string> # required

description: <string> # required

notes: <string> # optional

source:

- table: <string> # optional - need to pass either table or sql

sql: <string> # optional - need to pass either table or sql

warehouse: <string> # optional - override default warehouse

connection_name: <string> # optional - override default connection name

timeout: <int> # optional - only supported on some warehouses

where_condition: <string> # optional

segment_fields:

- <string> # optional - can only contain one field

segment_sql:

- <string> # optional

target:

- table: <string> # optional - need to pass either table or sql

sql: <string> # optional - need to pass either table or sql

warehouse: <string> # optional - override default warehouse

connection_name: <string> # optional - override default connection name

timeout: <int> # optional - only supported on some warehouses

where_condition: <string> # optional

segment_fields:

- <string> # optional - can only contain one field

segment_sql:

- <string> # optional

alert_conditions:

- metric: <string> # metric name, see below for allowed values

custom_metric: # custom metric (when a metric name is not used)

- display_name: <string> # required

sql_expression: <string> # required

target_sql_expression: <string> # required

source_field: <string> # optional - field name

target_field: <string> # optional - field name

threshold_value: <float> # used for operators besides AUTO and range operators

is_threshold_relative: <boolean> # optional - default is false

schedule: # optional - fixed schedule by default

- type: <string> # required - fixed, dynamic, cron, or manual

interval_minutes: <integer> # required if fixed

interval_crontab:

- <string>

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

dynamic_schedule_tables: # optional - used to specify table for dynamic schedule

- <string> # accepts just one table.

audiences: # optional

- <string>

failure_audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- <string>

data_quality_dimension: <string> # optionalsourceandtarget: The data sources to compare. Must pass eithertableorsqltable: MC global table ID (format<database>:<schema>.<table name>)sql: SQL for the data source (generallyselect * from table_name)connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the[getWarehouseConnections](https://apidocs.getmontecarlo.com/#query-getWarehouseConnections)API call. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.timeout: Timeout in seconds for the query. Defaults to 15 minutes. Only supported on Snowflake.where_condition: SQL condition to use to filter the query results. Must not include theWHEREclause, which will be added automatically.segment_fields: List of fields used to segment the monitor metrics. Only supports 1 field. The field must exist in the table. Must use eithersegment_fieldsorsegment_sqlor neither. Enables Monitoring by Dimension.segment_sql: SQL expression used to segment the monitor metrics. Supports 1 custom SQL expression. Must use eithersegment_fieldsorsegment_sqlor neither. Enables Monitoring by Dimension.

alert_conditions: List of configurations for metrics, fields, and breach conditions. A field may only be included once in a monitor. See section below for details.scheduletype: One offixed(default),dynamic,cron,manualinterval_minutes: For fixed, how frequently to run the monitorinterval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angelesdynamic_schedule_tables: - you need to pass anMC global table IDwhich belongs to the same warehouse as the monitor. Ideally the MC global table ID should be one of tables passed in source or target.

Comparison Monitor alert conditions

operator: defaults to and only acceptsGT. We compare if the absolute delta value is greater than threshold and raise error.metric: See the list of available metrics for full details. Use the value from theMaC Namecolumn in this field.source_fieldandtarget_field:The source field name and target field name that should be monitored for this metric, operator, and threshold. The field types must be compatible with the metric.- Each field may only be included once in the monitor, regardless of metric.

- The row count metric may only be used once in the monitor.

- When using a custom metric,

source_fieldandtarget_fieldshould not be included; the fields used should be included directly in the SQL expression.

custom_metric: Allows defining a custom metric based on a source SQL expression and target SQL expression instead of a predefined metric indicated inmetric.threshold_value: Max acceptable delta between source and targetis_threshold_relative: Whether or notthreshold_valueis a relative vs absolute threshold.is_threshold_relative: truewould be a percentage measurement,is_threshold_relative: falsewould be a absolute measurement. Relative means thethreshold_valuewill be treated as a percentage value, Absolute means thethreshold_valuewill be treated as an actual count.

Metric Monitor

Defined under the metric property.

metric:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

connection_name: <string> # optional - override default connection name

timeout: <int> # optional - only supported on some warehouses

description: <string> # required

notes: <string> # optional

data_source:

table: <string> # optional - need to pass either table or sql

sql: <string> # optional - need to pass either table or sql

where_condition: <string> # optional

aggregate_time_field: <string> # optional

aggregate_time_sql: <string> # optional

aggregate_by: day | hour # optional

segment_fields:

- <string> # optional

segment_sql:

- <string> # optional

alert_conditions:

- metric: <string> # metric name, see below for allowed values

custom_metric: # custom metric (when a metric name is not used)

display_name: <string>

sql_expression: <string>

operator: <string> # AUTO, GT, LT, etc. see below

fields: # optional - monitor specific field list instead of all fields

- <string> # field name

threshold_value: <float> # used for operators besides AUTO, AUTO_HIGH, AUTO_LOW, and range operators

lower_threshold: <float> # used only for range operators

upper_threshold: <float> # used only for range operators

sensitivity: <str> # optional - one of low, medium, or high

collection_lag: <int> # time to delay collection in hours

schedule: # optional - by default, fixed schedule with interval_minutes=720 (12h)

type: <fixed, or dynamic> # required

interval_minutes: <integer> # required if fixed

start_time: <date as isoformatted string>

timezone: <timezone> # optional -- select regional timezone for daylight savings ex. America/Los_Angeles

dynamic_schedule_tables: # optional - used to specify table for dynamic schedule

- <string> # accepts just one table.

audiences: # optional

- <string>

failure_audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionaldata_source: Need to pass eithertableorsqltable: MC global table ID (format<database>:<schema>.<table name>)sql: SQL for the data source (generallyselect * from table_name)

alert_conditions: List of configurations for metrics, fields, and breach conditions. A field may only be included once in a monitor for a given metric. See section below for details.sensitivity: Set sensitivity to one ofhigh,mediumorlow. The sensitivity determines how lax are the thresholds generated by machine learning when using theAUTOoperations in the alert conditions.segment_fields: List of fields or SQL expressions used to segment the monitor metrics. Supports up to 5 fields. The fields must exist in the table. Must use eithersegment_fieldsorsegment_sqlor neither. Enables Monitoring by Dimension.segment_sql: SQL expression used to segment the monitor metrics. Supports 1 custom SQL expression. Must use eithersegment_fieldsorsegment_sqlor neither. Enables Monitoring by Dimension.aggregate_time_field: Timestamp field to group the metrics by. Must use eitheraggregate_time_fieldoraggregate_time_sqlor neither.aggregate_time_sql: Arbitrary SQL expression to be used as the timestamp field, e.g.DATETIME(created). Must use eitheraggregate_time_fieldoraggregate_time_sqlor neither.aggregate_by: Eitherhourorday. When usinghour, metrics will be aggregated in hourly buckets according to the provided field or expression. The field or expression must be of a timestamp type. When usingday, metrics will be aggregated in daily buckets according to the provided field or expressionwhere_condition: SQL condition to use to filter the query results. Must not include theWHEREclause, which will be added automatically.collection_lag: Time to delay metric collection, in hours. Only relevant when metrics are aggregated by time. Must be a multiple of 24 when using daily aggregation.scheduletype: One offixed(default) ordynamicinterval_minutes: For fixed, how frequently to run the monitorstart_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angelesdynamic_schedule_tables: - you need to pass anMC global table IDwhich belongs to the same warehouse as the monitor. Ideally the MC global table ID should be one of tables passed in the data source.

connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the[getWarehouseConnections](https://apidocs.getmontecarlo.com/#query-getWarehouseConnections)API call. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.timeout: Timeout in seconds for the query. Defaults to 15 minutes. Only supported on Snowflake.

Metric monitor alert conditions

operator: acceptsAUTO,AUTO_HIGH,AUTO_LOW,GT,GTE,LT,LTE,EQ,NEQ,OUTSIDE_RANGE,INSIDE_RANGE.AUTOuses ML to detect both anomalously high and low values.AUTO_HIGHuses ML to detect only anomalously high values.AUTO_LOWuses ML to detect only anomalously low values.- All other operators require an explicit threshold.

fields: List of field names that should be monitored for this metric, operator, and threshold. This field is optional and defaults to all fields in the table. The field type must be compatible with the metric.- Each field may only be included once in the monitor for a given metric (including as part of an "all fields" metric).

- When using a custom metric,

fieldsshould not be included; the fields used should be included directly in the SQL expression.

threshold_value: Breach threshold for the operator. Not used forAUTO,AUTO_HIGH,AUTO_LOW,OUTSIDE_RANGE, orINSIDE_RANGE. For rate metrics, ensure the threshold is between 0 and 1 (not 0%-100%).lower_threshold: Lower end of threshold range for operatorsOUTSIDE_RANGEandINSIDE_RANGEupper_threshold: Upper end of threshold range for operatorsOUTSIDE_RANGEandINSIDE_RANGEmetric: See the list of available metrics for full details, including which field types are compatible. Use the value from theMaC Namecolumn in this field.custom_metric: Allows defining a custom metric based on a SQL expression instead of a predefined metric indicated inmetric.

JSON Schema Monitor

Defined under the json_schema property.

json_schema:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

connection_name: <string> # optional - override default connection name

timeout: <int> # optional - only supported on some warehouses

description: <string> # required

notes: <string> # optional

table: <string> # required

field: <string> # required

timestamp_field: <string> # optional

timestamp_field_expression: <string> # optional

where_condition: <string> # optional

schedule: # optional - by default, fixed schedule with interval_minutes=720 (12h)

type: <fixed, or dynamic> # required

interval_minutes: <integer> # required if fixed

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionaltable: MC global table ID (format<database>:<schema>.<table name>field: Field in table to monitortimestamp_field: Timestamp fieldtimestamp_field_expression: Arbitrary SQL expression to be used as timestamp field, e.g.DATE(created). Must use eithertimestamp_fieldortimestamp_field_expressionor neither.where_condition: SQL snippet of where condition to add to queryscheduletype: One offixed(default), ordynamicinterval_minutes: For fixed, how frequently to run the monitorstart_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles

connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API call. Use["json_schema"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.timeout: Timeout in seconds for the query. Defaults to 15 minutes. Only supported on Snowflake.

Custom SQL monitor

Defined under the custom_sql property.

custom_sql:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

connection_name: <string> # optional - override default connection name

timeout: <int> # optional - only supported on some warehouses

description: <string> # required

notes: <string> # optional

sql: <string> # required

alert_conditions: <comparison> # required

variables: <variable values> # optional

schedule:

type: <string> # must be 'fixed' or 'manual'

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

dynamic_schedule_tables: # optional - used to specify table for dynamic schedule

- <string> # accepts just one table.

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

audiences: # optional

- <string>

failure_audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionalsql: SQL to get the results for the monitor.query_result_type: Optional, can be set toSINGLE_NUMERICto make the monitor use a value-based thresholdsampling_sql: Optional custom SQL query to be run on breach (results will be displayed in Incident IQ to help with investigation).alert_conditions: See alert conditions belowvariables: See variables belowscheduletype: Can befixed(default) ormanualordynamic.Manualwould be used for Custom SQL monitors used for Circuit Breakers.interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angelesdynamic_schedule_tables: - you need to pass anMC global table IDwhich belongs to the same warehouse as the monitor. Ideally the MC global table ID should belong to one of the same tables passed in the sql.

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changesconnection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the getWarehouseConnections API call. Use["sql_query"]as thejobTypeparameter in thegetWarehouseConnectionsAPI call.timeout: Timeout in seconds for the query. Defaults to 15 minutes. Only supported on Snowflake.

Custom SQL monitor alert conditions

alert_conditionsare definitions of breaches, not expected return values. This section would be where you would define the logic for when to get alerted about anomalous behavior in your monitor. For example, if you make a Custom SQL monitor and pick:

type:thresholdoperator:GTthreshold_value: 100When Monte Carlo runs your monitor and the return results are greater than 100, we will fire an alert to any routes configured to be notified about breaches to this monitor.

type:threshold(default),dynamic_thresholdorchange. Ifthreshold,threshold_valuebelow is an absolute value. Ifdynamic_thresholdno threshold is needed (it will be determined automatically). Ifchange,threshold_valueas change from the historical baseline.operator: One ofEQ,NEQ,GT,GTE,LT,LTE,AUTO,AUTO_HIGH,AUTO_LOW. Operator of comparison,=,≠,>,≥,<,≤respectively.AUTO,AUTO_HIGH, andAUTO_LOWare used only fordynamic_threshold(AUTOis used by default, so does not need to be provided).AUTOuses ML to detect both anomalously high and low values.AUTO_HIGHuses ML to detect only anomalously high values.AUTO_LOWuses ML to detect only anomalously low values.- All other operators require an explicit threshold.

threshold_value: Threshold value. Should not be provided fordynamic_threshold.baseline_agg_function: If type =change, the aggregation function used to aggregate data points to calculate historical baseline. One ofAVG,MAX,MIN.baseline_interval_minutes: If type =change, the time interval in minutes (backwards from current time) to aggregate over to calculate historical baseline.is_threshold_relative: If type =change, whether or notthreshold_valueis a relative vs absolute threshold.is_threshold_relative: truewould be a percentage measurement.is_threshold_relative: falsewould be a numerical measurement. Relative means the threshold_value will be treated as a percentage value. Absolute means the threshold_value will be treated as an actual count of rows.threshold_sensitivity: If type =dynamic_threshold, one oflow,medium, orhighmay be provided to adjust the sensitivity of the automatic thresholds. Defaults tomedium.lowresults in fewer breaches whilehighresults in more breaches.

Custom SQL monitor variables

You can use variables to execute the same query for different combinations of values. Variables are defined as {{variable_name}} inside the query text. Then, you can define one or more values for each variable, and all combinations will be tested.

Here is an example defining the same query for several tables and conditions (4 queries will be executed):

custom_sql:

- sql: |

select foo from {{table}} where {{cond}}

variables:

table:

- project:dataset.table1

- project:dataset.table2

cond:

- col1 > 1

- col2 > 2You can also define runtime variables, which need to be provided when executing the monitor. Runtime variables are used to implement Circuit breakers. Runtime variables can only use manual scheduling, as you must provide their values before the monitor can be executed.

To declare a runtime variable, use the following format:

custom_sql:

- sql: |

select foo from {{table}} where field > {{threshold}}

variables:

threshold:

# variable is runtime

runtime: true

table:

# static variables must use this format when mixed with runtime variables

values:

- project:dataset.table1

- project:dataset.table2

schedule:

type: manualWhen running that monitor, you will need to provide a value for threshold as explained in Adding circuit breakers to your pipeline . The monitor will run two queries, one for each table, using the same provided threshold value.

Runtime variables and monitor tablesWe parse the SQL query to associate tables to the Custom SQL monitor. If we fail to parse the query, or the referenced tables have not yet been ingested, we won't associate any tables with the monitor.

When using runtime variables, we cannot predictably parse the query as the variable might be filled with any value. We will still aim to parse it, by replacing any runtime variables with a

''.For example, if the query is:

SELECT * FROM prod.analytics.invoices WHERE amount > {{amount}}we will attempt to parse it asSELECT * FROM prod.analytics.invoices WHERE amount > '{{amount}}', which in general provides better results.You can check which tables are inferred from any given query by using the Query Parser.

Legacy Freshness Monitor

Freshness Rules are being deprecatedCustomers can no longer create new Freshness Rules through the UI in Monte Carlo. Instead, users can now manage freshness thresholds (whether automatic or manual) in a single place in Assets. See more details here.

Existing Freshness Rules will continue to function, and can still be created through Monitors as Code until further notice. However, we discourage new usage, as they will not receive updates or enhancements.

Defined under the freshness property.

freshness:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

description: <string> # required

notes: <string> # optional

table: <string> / tables: <list> # required

freshness_threshold: <integer> # required

schedule:

type: fixed # must be fixed

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionaltable: MC global table ID (format<database>:<schema>.<table name>).tables: Instead oftable, can also usetablesto define a list of tables.freshness_threshold: Freshness breach threshold in minutes.scheduletype: Must befixed.interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles.

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidents.event_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changes.connection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the[getWarehouseConnections](https://apidocs.getmontecarlo.com/#query-getWarehouseConnections)API call. Use ["sql_query"] as the jobType parameter in thegetWarehouseConnectionsAPI call.

Legacy Volume Monitor

Volume Rules are being deprecatedCustomers can no longer create new Volume Rules through the UI in Monte Carlo. Instead, users can now manage volume thresholds (whether automatic or manual) in a single place in Assets. See more details here.

Existing Volume Rules will continue to function, and can still be created through Monitors as Code until further notice. However, we discourage new usage, as they will not receive updates or enhancements.

Defined under the volume property.

volume:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

description: <string> # required

notes: <string> # optional

table: <string> / tables: <list> # required

alert_conditions: <comparison> # required

volume_metric: <row_count or byte_count> # row_count by default

schedule:

type: fixed # must be fixed

start_time: <date as isoformatted string>

interval_minutes: <integer>

interval_crontab:

- <string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

event_rollup_count: <integer> # optional - enable to only send notifications every X incidents

event_rollup_until_changed: <boolean> # optional - enable to send subsequent notifications if breach changes, default: false

audiences: # optional

- <string>

failure_audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionaltable: MC global table ID (format<database>:<schema>.<table name>.tables: Instead oftable, can also usetablesto define a list of tables.volume_metric: Must betotal_row_countortotal_byte_count— defines which volume metric to monitor.alert_conditions: See alert conditions below.scheduletype: Must be "fixed".interval_minutes: How frequently to run the monitor (in minutes).interval_crontab: How frequently to run the monitor (using a list of CRON expressions, check example below).start_time: When to start the schedule. If unspecified, for fixed schedules, then start sometime within the next hour.timezone: Optional - select regional timezone for daylight savings ex. America/Los_Angeles.

event_rollup_count: Optional - a Reduce Noise option to only send notifications every X incidentsevent_rollup_until_changed: Optional - a Reduce Noise option to send subsequent notifications if breach changesconnection_name: Specify the connection (also known as query-engine) to use. Obtain the warehouse UUID via thegetUserAPI as described here. Then obtain names of the connections in the warehouse via the[getWarehouseConnections](https://apidocs.getmontecarlo.com/#query-getWarehouseConnections)API call. Use ["sql_query"] as the jobType parameter in thegetWarehouseConnectionsAPI call.

Volume Monitor alert conditions

type:absolute_volumeorgrowth_volume.

If absolute_volume:

operator: One ofEQ,GTE,LTE. Operator of comparison,=,≥,≤respectively.threshold_lookback_minutes: if operator isEQ, the time to look back to compare with the current value.threshold_value: If operator isGTEorLTE, the threshold value

If growth_volume:

operator: One ofEQ,GT,GTE,LT,LTE. Operator of comparison,=,>,≥,<,≤respectively.baseline_agg_function: the aggregation function used to aggregate data points to calculate historical baseline. One ofAVG,MAX,MIN.number_of_agg_periods: the number of periods to use in the aggregate comparison.baseline_interval_minutes: the aggregation period length.min_buffer_value/max_buffer_value: the lower / upper bound buffer to modify the alert threshold.min_buffer_modifier_type/max_buffer_modifier_type: the modifier type of min / max buffer, can beMETRIC(absolute value) orPERCENTAGE.

Query Performance Monitor

Defined under the query_performance property.

query_performance:

- name: <string> # required

warehouse: <string> # optional - override default warehouse

description: <string> # required

notes: <string> # optional

audiences: # optional

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optional

selection:

- field_name: snowflake_warehouse # the criteria to select on

values:

- MCD_DEMO_DW # depends on field_name

operator: exclude | include

alert_conditions:

- threshold: 199.0

metric: runtime_change

baseline_agg_function: AVG

threshold_lookback_minutes: 10080

- threshold: 21600000.0

metric: runtime

- threshold: 2.0

metric: consecutive_breach_countselection: list of criteria to include or exclude queries from the monitorfield_name: the kind of criteria to definevalue: value to check the field againstoperator: eitherincludeorexclude

alert_conditions: when must the monitor trigger an alert. Is a list of three separate conditions:runtime_change: increase of query run time compared to an aggregation over the last query run times in a given time window (e.g average of last twelve hours). Threshold is a percentage.runtime: minimum run time to alert onconsecutive_breach_count: number of consecutive breaches of run time increases before the monitor alerts.

Creating the right "selection" and "alert_conditions"Performance monitors allow for a wide range of criteria, and the values might reference internal Monte Carlo IDs.

In order to create your Performance Monitors using Monitors as Code, you should create them from the UI and use the Generate YAML file at the bottom to get the proper

selectioncriteria andalert_conditionformat.

Legacy Metric Monitor

Legacy monitorStarting March 1, 2025, new monitors can no longer be created using the

field_healthproperty.Use the

metricproperty to define new Metric Monitors instead.

Defined under the field_health property.

field_health: # use 'metric' monitor instead

- table: <string> # required

name: <string> # required

warehouse: <string> # optional - override default warehouse

description: <string> # required

notes: <string> # optional

segmented_expressions:

- <string> # Can be a field or a SQL expression

timestamp_field: <string> # optional

timestamp_field_expression: <string> # optional

where_condition: <string> # optional

lookback_days: <int> # optional

aggregation_time_interval: <one of 'day' or 'hour'> # optional

min_segment_size: <int> # optional -- by default, fetch all segment sizes

alert_condition:

- metric: <string> # metric name, see below for allowed values

custom_metric: # custom metric (when a metric name is not used)

display_name: <string>

sql_expression: <string>

operator: <string> # AUTO, GT, LT, etc. see below

fields: # optional -- monitor specific field list instead of all fields

- <string> # field name

threshold_value: <float> # used for operators besides AUTO, AUTO_HIGH, AUTO_LOW, and range operators

lower_threshold: <float> # used only for range operators

upper_threshold: <float> # used only for range operators

schedule: # optional - by default, fixed schedule with interval_minutes=720 (12h)

type: <fixed, or dynamic> # required

interval_minutes: <integer> # required if fixed

start_time: <date as isoformatted string>

timezone: <timezone> # optional - select regional timezone for daylight savings ex. America/Los_Angeles

audiences: # optional

- <string>

priority: <string> # optional

tags: # optional

- name: <string>

value: <string>

data_quality_dimension: <string> # optionalClick here to see the parameters of that property.

Dimension Tracking Monitor

As of October 2024, new Dimension Tracking monitors can no longer be created. Instead, use the Relative Row Count metric in a metric monitor.

Also, as of May 2025, all Dimension Tracking monitors created before October 2024 have been automatically migrated to Relative Row Count metric monitors.

If you have a dimension_tracking: section in your MaC configuration, you can use the command montecarlo monitors export-migrated-dt to export the MaC configuration for these automatically migrated monitors. You can add these monitors to your metric: section and then delete the old dimension_tracking: section. Note that if you are happy with the migrated monitors as they are and do not need to change anything about them, then no action is required.

Example monitors

The best way to look at example YAML for monitors, is to create and export a monitor YAML using the Monte Carlo UI. That will let you experiment with different field values and combinations and quickly get the right YAML to use in your monitor definition.

Here are some examples for quick reference:

montecarlo:

validation:

- description: Simple Validation breach test

notes: Simple Validation breach test

audiences:

- audience_1

failure_audiences:

- audience_2

priority: P2 # optional

tags: # optional

- name: environment

value: production

data_quality_dimension: COMPLETENESS # optional

schedule:

interval_minutes: 60

start_time: '2024-06-26T04:35:02+00:00'

data_source:

sql: select * from project.dataset.table_name

alert_condition:

conditions:

- id: sql_id_1

sql: test_number < 100

- id: unary_id_1

predicate:

name: 'null'

negated: true

value:

- field: test_string

- id: binary_id_1

predicate:

name: equal

left:

- field: test_boolean

right:

- literal: 'true'

type: GROUP

operator: AND

metric:

- name: metric_1

data_source:

table: project:dataset.table_name

description: Test monitor

aggregate_by: day

aggregate_time_field: created_at

schedule:

interval_minutes: 60

audiences:

- audience_1

failure_audiences:

- audience_2

priority: P1 # optional

tags: # optional

- name: critical_table

value: sales_data

data_quality_dimension: UNIQUENESS # optional

alert_conditions:

# Breach for anomalous unique rate (ML-based) on any field

- operator: AUTO

metric: UNIQUE_RATE

# Breach if null rate exceeds 5% on any field

- operator: GT

metric: NULL_RATE

threshold_value: 0.05

# Breach if true rate <= 75% for these fields

- operator: LTE

metric: TRUE_RATE

threshold_value: 0.75

fields:

- boolean_field_1

- boolean_field_2

# Breach if max value is outside range [0,1] for these fields

- operator: OUTSIDE_RANGE

metric: NUMERIC_MAX

lower_threshold: 0.0

upper_threshold: 1.0

fields:

- numeric_field_1

- numeric_field_2

# Using a custom metric

- operator: LTE

custom_metric:

display_name: Unique text combination

sql_expression: COUNT(DISTINCT CONCAT(text_field_1, text_field_2))

threshold_value: 2

custom_sql:

- name: custom_sql_1

description: Test rule

sql: |

select foo from project.dataset.my_table

alert_conditions:

- operator: GT

threshold_value: 0

schedule:

interval_minutes: 60

start_time: "2021-07-27T19:00:00"

priority: P1 # optional

tags: # optional

- name: data_source

value: my_table

data_quality_dimension: ACCURACY # optionalUpdated 13 days ago