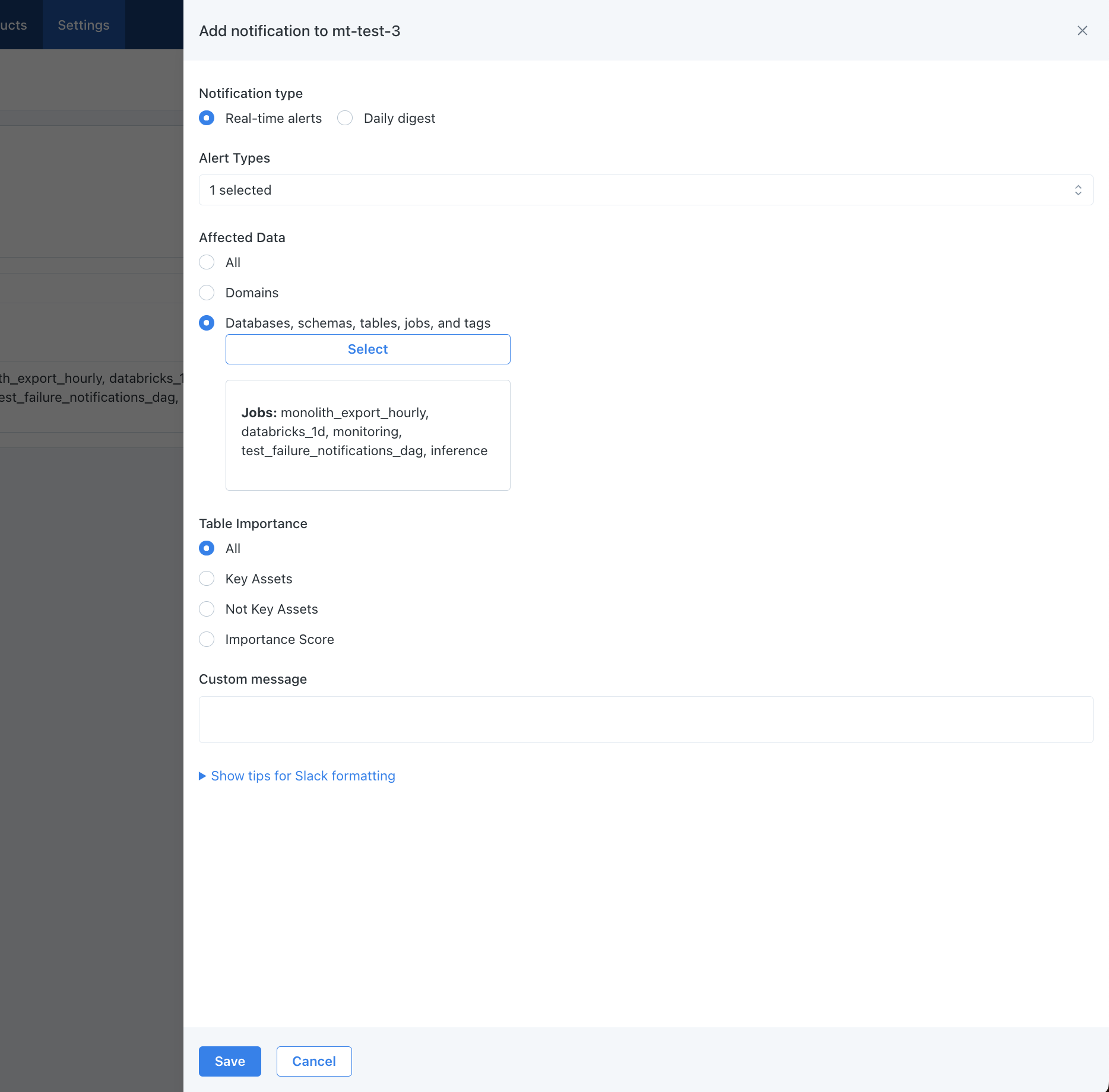

Users can now filter for specific Airflow DAGs to include in an audience so only alerts on selected DAGs will be sent to the notification channels for the audience to minimize potential alert noise. Previously, an audience got either all or no airflow dags’ alerts.

Users can also select specific Airflow DAGs to include in a domain, and then leverage the defined domain to define an audience. This ensures users are only seeing the DAGs relevant to them across the UI.

Docs here https://docs.getmontecarlo.com/docs/airflow#monte-carlo-notifications-of-airflow-failure-alerts