Unity Catalog

Alpha / early access

PrerequisitesTo complete this guide, you will need admin rights in your Databricks workspace.

To connect Monte Carlo to the Databricks Unity Catalog (UC), follow these steps:

- Create a cluster in your Databricks workspace.

- Create an API key in your Databricks workspace.

- Provide service account credentials to Monte Carlo.

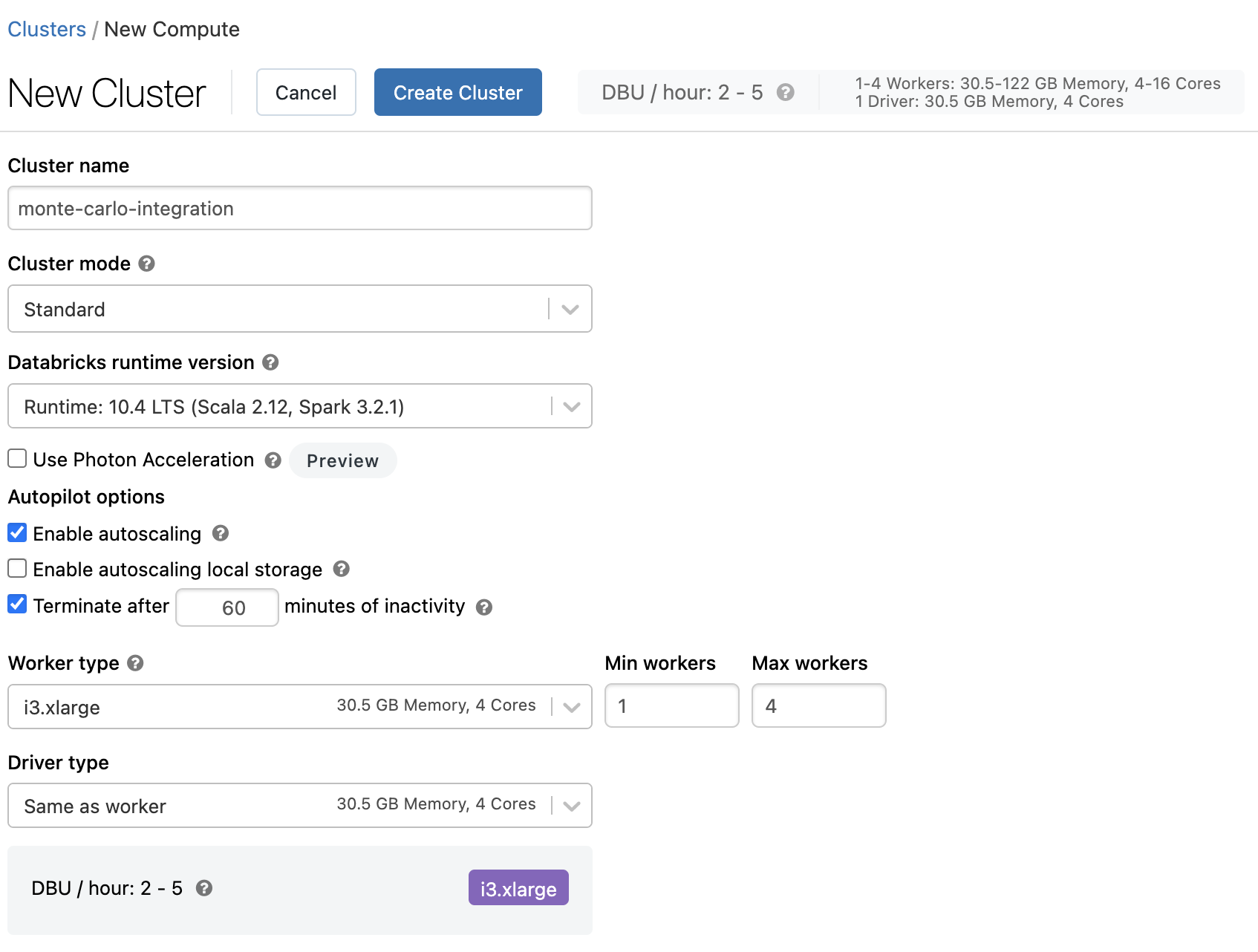

Create a Databricks cluster

Monte Carlo requires a Databricks runtime version with Spark >=

3.0, and at least one worker.

Are you also using an external metastore?If you are using the built-in (i.e. central) Databricks Hive metastore this is automatically supported when you provision a UC cluster. If you want to use UC with either the Glue Catalog or an external hive metastore instead please follow the guides below for additional cluster requirements:

-

Follow these steps to create an UC compatible all-purpose cluster in your workspace. For environments with 10,000 tables or fewer Monte Carlo recommends using an

i3.2xlargenode type. Otherwise please reach out to your account representative for help right-sizing. -

Follow this guide to retrieve the cluster ID and start the cluster.

Cluster creation wizard example

Updated 8 months ago