Data Collector

DeprecatedAs of January 2024, the Monte Carlo Data Collector Deployment Model has been deprecated in favor of the Agent and Object Storage Deployment models. Please see Architecture & Deployment Options for more information.

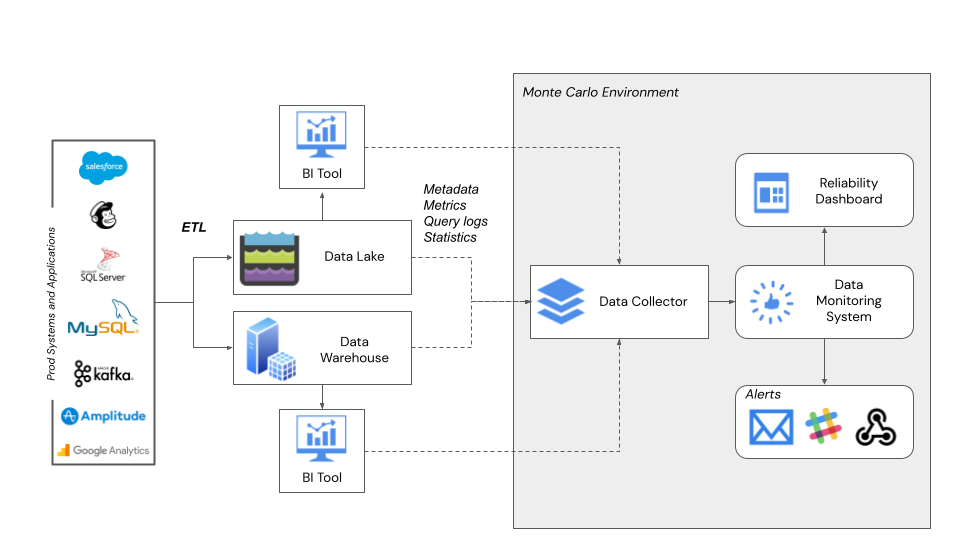

Monte Carlo uses a data collector to connect to data warehouses, data lakes and BI tools in order to extract metadata, logs and statistics. The data collector is deployed in Monte Carlo's secure AWS environment across our Fortune 500 clients to ensure a seamless deployment process and best-in-class customer support. This section outlines the architecture and deployment options of the data collector.

Architecture

The data collector architecture is optimized as seen below to ensure maximum security as we interact with your data.

Architecture

Deployment Options

SaaS Option

Monte Carlo is a SaaS platform. We will take on deployment and management of the data collector. This makes it easier for us to manage the infrastructure in cases like upgrading, operationally monitor the collector's infrastructure, and provide better debugging support.

When Monte Carlo is hosting the data collector, the major configuration required is network connectivity, which your MC representative will help you through.

Hybrid Option

Additional stepsThis hosting method is only a solution for limited customers. Please contact your account team if you would like the ability to self host.

If choosing the hybrid solution, please see additional requirements below and be advised that this will limit the ability for Monte Carlo to provide best-in-class support.

Though the SaaS deployment option is strongly recommended, this is optional and not required. If you have more stringent security/compliance requirements, deploying the collector in your AWS environment is a viable option. Please see our Hybrid Solution documentation for more details regarding this route.

Updated 7 months ago