Troubleshooting & FAQ: Databricks

What Databricks platforms are supported?

All three Databricks cloud-platforms (AWS, GCP and Azure) are supported!

What about Delta Lake?

Delta Tables are supported and delta size and freshness metrics are monitored with automated monitors. You can also opt in to any field health, dimension, custom SQL and SLI monitors as well. See here for additional details.

We also support Streaming Tables & Materialized Views, as they are often used with Delta Live Tables

What about my non Delta tables?

Yes -- you can opt into field health, dimension, custom SQL and SLI monitors.

How many Databricks workspaces are supported?

We can support multiple workspaces by setting up additional integrations. If you are using the unity catalog, you only need to set up a single connection to that catalog, even if there are multiple workspaces connected to it.

How do I exclude schemas from collection?

https://docs.getmontecarlo.com/docs/usage

Are there any limitations?

- Volume is shown in bytes, not rows. From an asset page, click to opt-into row monitoring, or contact us.

- Freshness SLOs with Glue or an external Hive Metastore are not supported.

- Table Lineage is only available with Unity Catalog

- Query Logs are only available with Unity Catalog for Queries run with SQL Warehouses.

Can I use another Query Engine instead of Databricks SQL?

We recommend using a SQL Warehouse as the query engine for Databricks, but other query engines may work, as specified under "Data Lakes"

How do I handle a "Cannot make databricks job request for a DC with disabled remote updates" error?

If you have disabled remote updates on your Data Collector we cannot automatically provision resources in your Databricks workspace using the CLI. Please reach out to your account representative for details on how to create these resources manually.

How do I handle a "A Databricks connection already exists" error?

This means you have already connected to Databricks. You cannot have more than one Databricks metastore or Databricks delta integration.

How do I handle a "Scope monte-carlo-collector-gateway-scope already exists" error?

This means a scope with this name already exists in your workspace. You can specify creating a scope with a different name using the --databricks-secret-scope flag.

Alternatively, after carefully reviewing usage, you can delete the scope via the Databricks CLI/API. Please ensure you are not using this scope elsewhere as any secrets attached to the scope are not recoverable. See details here.

How do I handle a "Path (/monte_carlo/collector/integrations/collection.py) already exists" error?

This means a notebook with this name already exists in your workspace. If you can confirm this is a notebook provisioned by Monte Carlo and there are no existing jobs you should be able to delete the notebook via the Databricks CLI/API. See details here. Otherwise please reach out to your account representative.

How do I retrieve job logs?

- Open your Databricks workspace.

- Select Workflows from the sidebar menu.

- Select Jobs from the top and search for a job containing the name

monte-carlo-metadata-collection. - Select the job.

- Select any run to review logs for that particular execution. The jobs should all show

Succeededfor the status, but for partial failures (e.g. S3 permission issues) the log output will contain the errors and overall error counts.

I received a "Privilege SELECT is not applicable" error granting permissions in Unity Catalog. What should I do?

If the command results in an error like the one below, your UC metastore was created during the UC public preview period.

Privilege SELECT is not applicable to this entity [...:CATALOG/CATALOG_STANDARD]. If this seems unexpected, please check the privilege version of the metastore in use [0.1].Follow Databricks instructions to upgrade the privilege model to 1.0 or configure permissions according to the old model.

Why do I see different lineages between Unity Catalog and Monte Carlo?

Monte Carlo builds lineage using the system tables provided from Unity Catalog. We expire nodes that have not seen an update for 7 days to de-noise the lineage, whereas Databricks does not expire the nodes for 1 year. This might cause an infrequently updated node to not show up in MC lineage but remain in Unity lineage. Please feel free to reach out to us if this is an issue for your use case.

Why do I see duplicate Databricks jobs?

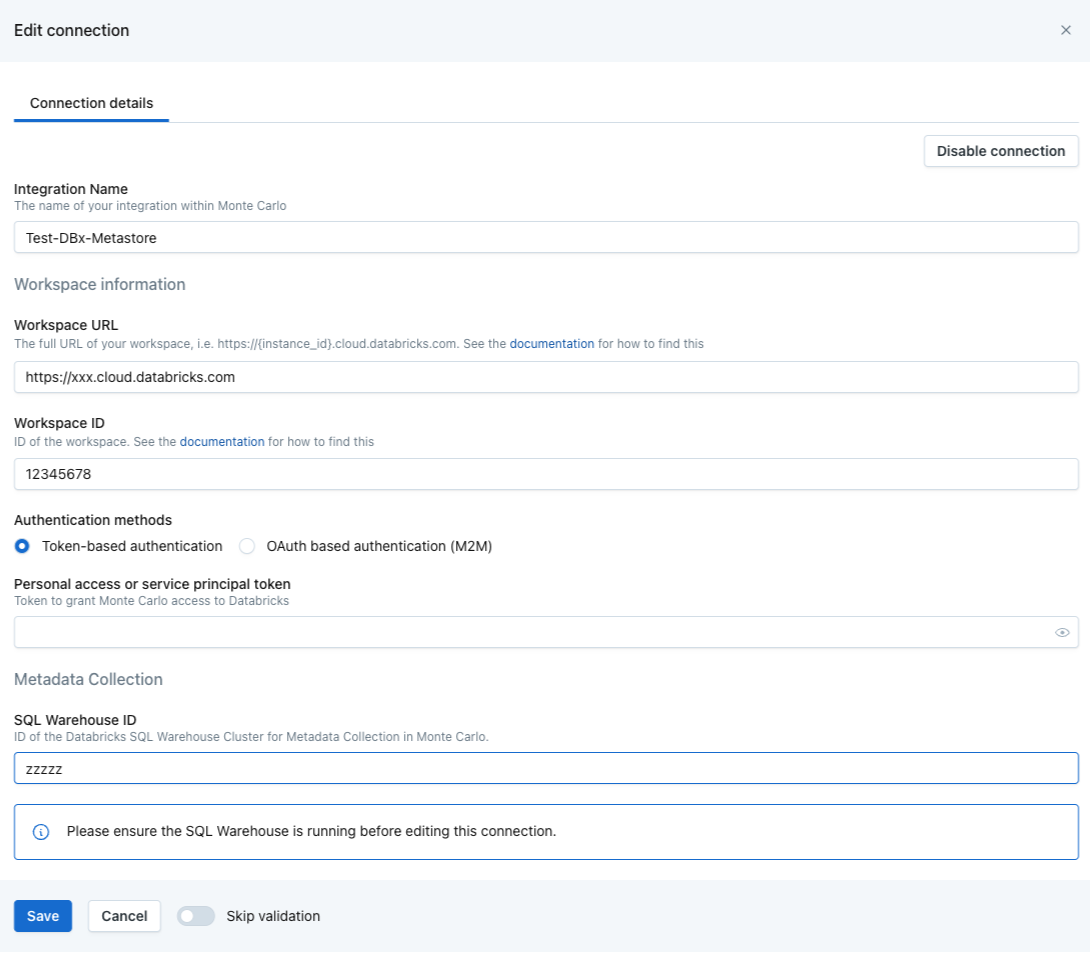

This usually happens when you have multiple Databricks integrations connected to different workspaces, but the Workspace ID is not specified in one or more of the connections.

To fix this:

- Go to Settings → Integrations.

- Click Edit on your Databricks integration.

- Enter the correct Workspace ID.

- Click Save.

This will ensure each integration is linked to the right workspace and prevent job duplication.

How long do Databricks jobs and workflows stay visible on the Assets page if they don’t have new runs?

Jobs and workflows will remain listed on the Assets page as long as they have at least one run in the past seven days. If a job has had no runs for more than seven days, it will still be stored in our database—but it won’t appear on the Assets page until a new run occurs. Once you trigger a new run, the job and all of its past runs will automatically reappear.

Why am I seeing freshness updates if I only ran an OPTIMIZE or a VACUUM query, which doesn’t change the data in my table??

Monte Carlo retrieves freshness information using DESCRIBE DETAIL queries. Databricks updates the last altered time of a table when OPTIMIZE or VACCUM queries run. As a result, Monte Carlo interprets these changes as freshness events, even though the table’s content hasn’t actually changed.

Updated 7 months ago