Azure: Agent Deployment

How-to create and register

Prerequisites

- You are an admin in Azure and have installed Terraform (>= 1.12.2) with Azure Auth (for step 1).

- You are an Account Owner (for step 2).

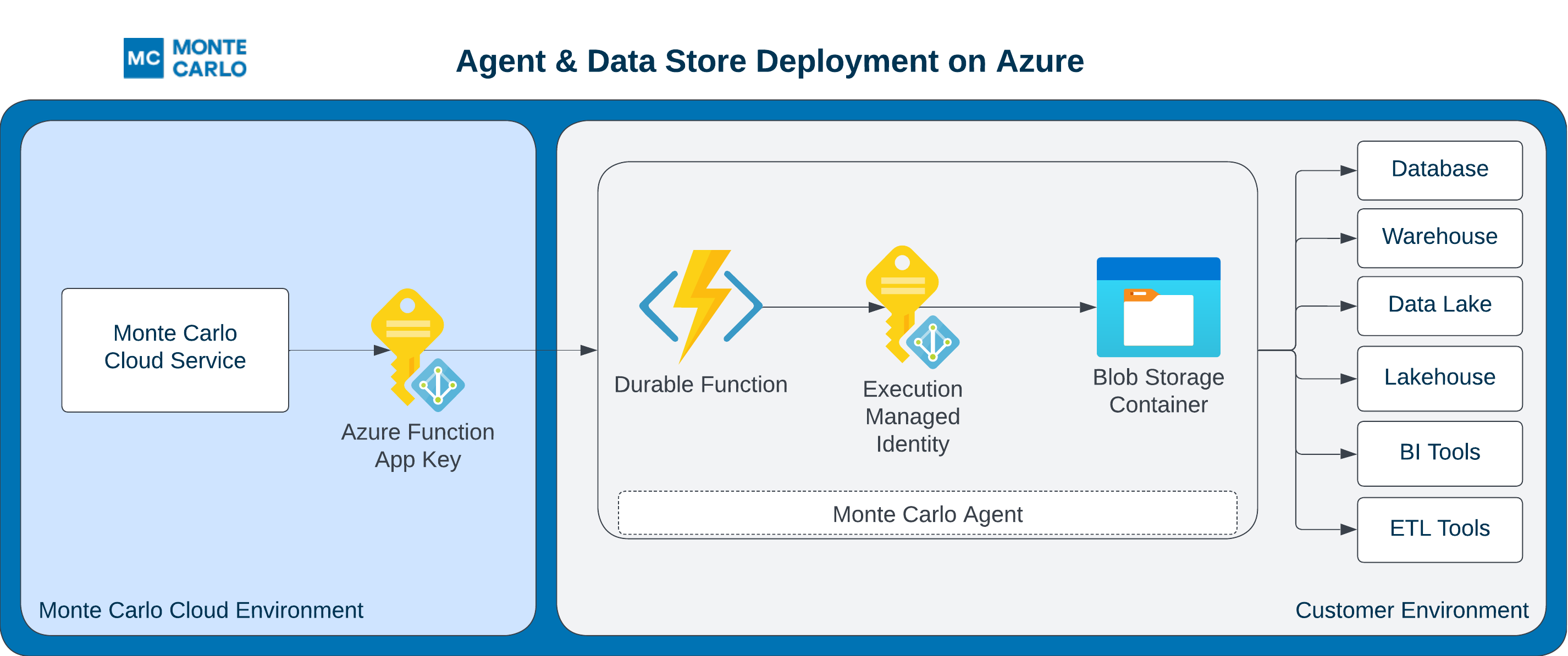

This guide outlines how to setup an Agent (with object storage) in your Azure cloud.

The FAQs answer common questions like how to review resources and what integrations are supported.

Steps

1. Deploy the Agent

You can use the mcd-agent Terraform module to deploy the Agent and manage resources as code (IaC).

For instance, using the following example Terraform config:

module "apollo" {

source = "monte-carlo-data/mcd-agent/azurerm"

version = "1.0.3"

}

output "resource_group" {

value = module.apollo.mcd_agent_resource_group_name

description = "Agent service resource group."

}

output "function_url" {

value = module.apollo.mcd_agent_function_url

description = "The URL for the agent."

}

output "function_name" {

value = module.apollo.mcd_agent_function_name

description = "Agent function name."

}You can build and deploy via:

terraform init && terraform applyPlease note that this module is configured to delete all resources when the resource group is deleted (e.g., when executing terraform destroy). Ensure you review your resources and take appropriate measures before proceeding. For more information, see here.

When deploying an agent, if you wish to connect to a VNet, please see the details here. Specifying a VNet is not strictly required to run the agent, but it enables certain connectivity scenarios, such as when you have an IP allowlist for your resource, want to peer, use PrivateLink for egress, or deploy within your existing network.

Additional module inputs, options, and defaults can be found here. Other details can be found here.

2. Register the Agent

After deploying the agent you can register either via the Monte Carlo UI or CLI.

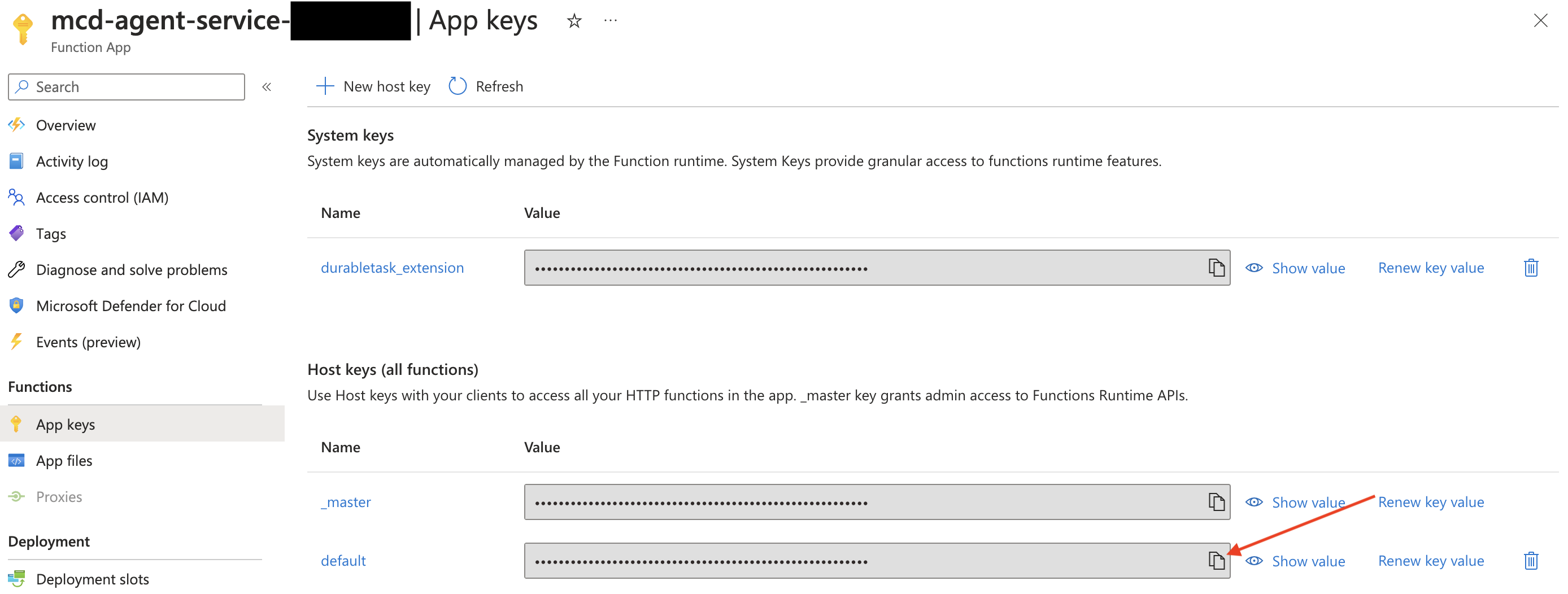

And see here for examples on how to retrieve Terraform output (i.e. registration input). Note that the Application Key is the "Default Host Key" in Azure Function Apps.

After this step is complete all supported integrations using this deployment will automatically use this agent (and object store for troubleshooting and temporary data). You can add these integrations as you normally would using Monte Carlo's UI wizard or CLI.

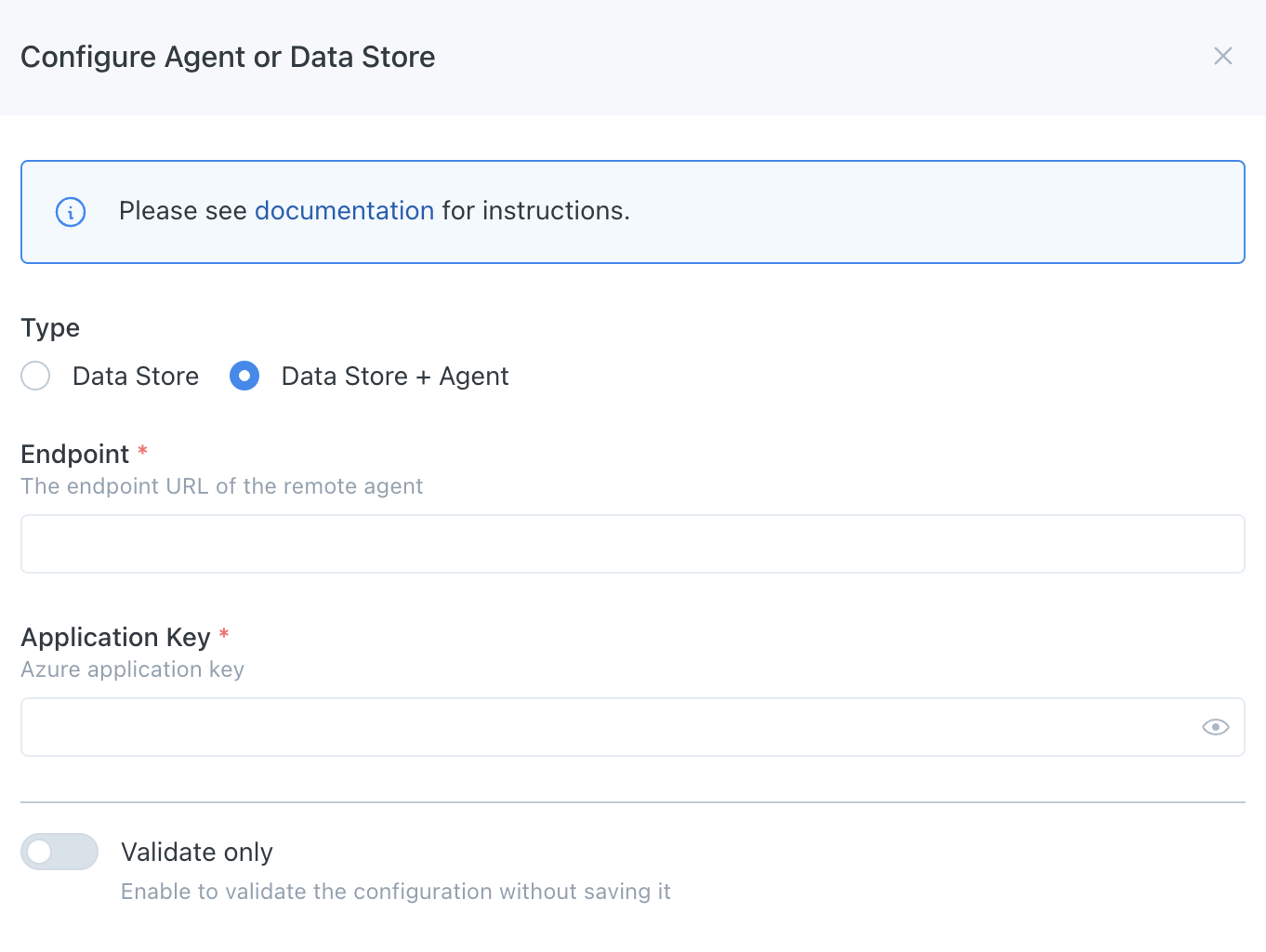

UI

If you are onboarding a new account, you can also register by following the steps on the onboarding screen

- Navigate to settings/integrations/agents and select the

Createbutton. - Follow the onscreen wizard for the "Azure" Platform and "Data Store + Agent" Type.

Azure Agent Registration Wizard

CLI

Use montecarlo agents register-azure-agent to register.

See reference documentation here. And see here for how to install and configure the CLI.

montecarlo agents register-azure-agent --url https://mcd-agent-service-example.azurewebsites.net --app-key -1FAQs

What integrations does the Agent support?

The agent supports all integrations except for the following:

- Data Lake Query Logs from S3 Buckets are not supported: Learn more.

- Tableau requires using the connected app authentication flow: Learn more.

Note that onboarding (connecting) any supported integration using this deployment will use the agent if one is provisioned. Otherwise, any other integrations will use the cloud service to connect directly.

Some integrations, such as dbt Core, Atlan, and Airflow, either leverage our developer toolkit or are managed by a third party and do not require an agent. These integrations natively push data to Monte Carlo, so an agent is not needed.

Can I use more than one Agent?

Yes, please reach out to [email protected] or contact your account representative if you would like to use more than one.

Can I review agent resources and code?

Absolutely! You can find details here:

How do I retrieve registration input from Terraform?

The endpoint (url) can be retrieved via: terraform output function_url.

The application key (default) can be fetched via the az functionapp keys list command using the function name and resource group from terraform output. For instance:

az functionapp keys list -g $(terraform output -raw resource_group) -n $(terraform output -raw function_name) | jq -r '.functionKeys.default'If you prefer this value can also be retrieved from the Azure portal from Function Apps service page. For instance:

Azure portal example

How do I monitor the Agent?

Please refer to the documentation here.

How do I upgrade the Agent?

Please refer to the documentation here.

Can I further constraint inbound access (ingress) to the Agent?

Absolutely! By default this is done via the function app key, but if you prefer you can further restrict requests via an IP allowlist. For instance you can:

- Please refer to the documentation for the list of IP addresses that need to be allowlisted for your platform version.

- Update the configuration for the agent's function app. For instance on the Azure portal*:

- Navigate to the Networking setting and select the "Public network access" configuration.

- Update the network access to "Enabled from select virtual networks and IP addresses" and add a rule for the IP address from step #1. Save your configuration.

*Note that changes made on the Azure portal might be reverted via Terraform. You might want to consider using the ip_restriction block to manage as code.

For more information on connectivity, please refer to our Network Connectivity documentation.

Can I use private endpoints to configure inbound access (ingress) to the agent?

Yes, please refer to the documentation for more details.

Can I further constraint outbound access (egress) from the Agent?

Absolutely! As with any Azure Function, you can control egress in multiple ways. You can find more details here.

Some scenarios where you might want to do this can include:

- You want to allowlist IP connectivity between the agent and your resource.

- You want to deploy the agent in a new VNet with peering and/or set up a Private Link between services.

- You want to deploy the agent in your existing VNet.

Depending on your integration, this might be necessary to establish connectivity.

To connect the agent to a VNet, specify a subnet using the subnet_id variable in the module (version 0.1.3 or newer required). If needed, you can use this Azure CLI command to fetch the subnet ID.

Note that the subnet must already be delegated to Microsoft.Web/serverFarms, or the deployment will fail.

Can I use an existing resource group?

Yes, you can specify the name of an existing resource group by using the existing_resource_group_name variable in the module (version 1.0.3 or newer required).

Please note that we strongly recommend not sharing resource groups with other jobs, as Monte Carlo may overwrite existing data.

Can I use existing storage accounts and private endpoints to access them?

Please note that certain features requiring pre-signed URLs are not supported with private storage accounts. For the Azure agent, this mainly impacts the following scenarios:

- Large results:

- You can either request a Private Link to the storage account from the Monte Carlo Cloud, or contact support to increase the result size limit so that pre-signed URLs are not needed for larger results.

- If you do not take one of these actions, the agent may not operate as expected, and jobs may be affected—even if you follow the example provided below.

- If you choose to enable a Private Link for the storage account, you may also want to establish a Private Link for general communication between Monte Carlo and the agent. Please note that this would require two separate Private Link requests:

- Downloading breach and query results as a CSV:

- This is not supported when using private storage accounts.

Yes, you can create the required storage accounts and configure the Terraform module to use them. When creating the storage accounts, you can disable public access and create private endpoints to allow access from the VNet used by the agent.

Please note that version 1.0.3 or newer of the agent module is required and that we strongly recommend not sharing storage accounts with other jobs, as Monte Carlo may overwrite existing data.

See an example of this here of how this can be done using Terraform. You can find the general requirements for using this feature below, in case you wish to create the storage accounts by another method.

Please note: In the Terraform example above, public access to the storage accounts is allowed (but only from the IP address where Terraform is running). This is a workaround for a limitation in the Azure provider for Terraform, which requires access to the storage accounts in order to create shares or containers.

If you create the storage accounts manually (or using another IaC tool without this limitation), you can completely disable public access.

If you use Terraform, you can manually disable public access after the module is deployed. However, please remember that you will need to restore access from the IP address running Terraform each time you want to deploy the module again.

Please note the following requirements when using this feature:

-

VNet with at least two subnets:

- One subnet for the agent, delegated to

Microsoft.Web/serverFarms, as described in the example above. - Another subnet for the private endpoints.

- One subnet for the agent, delegated to

-

Two storage accounts:

- First, create a storage account for Durable Functions:

- You must create a share in this account. The name of this share is one of the variables you need to pass to the agent module.

- You need to create private endpoints for the following sub-resources in the subnet to be used for private endpoints:

blob,table,file, andqueue. - For each private endpoint, you must enable "Private DNS Zone" integration. For example, for the

blobsub-resource, the private endpoint must be available as<storage_account_name>.privatelink.blob.core.windows.netin the VNet where the agent is deployed.

- Second, create a storage account for Agent data (i.e., the data store):

- You must create a container in this account. The name of this container is one of the variables you need to pass to the agent module.

- You need to create a single private endpoint for the

blobsub-resource in the subnet designated for private endpoints. - "Private DNS Zone" integration must be enabled. The private endpoint should be accessible as

<storage_account_name>.privatelink.blob.core.windows.netin the VNet where the agent is deployed.

- First, create a storage account for Durable Functions:

-

Use the following configuration for the agent, specifying these variables:

existing_storage_accounts = { agent_durable_function_storage_account_name = <name of the durable functions storage account> agent_durable_function_storage_account_access_key = <access key to access the durable functions storage account> agent_durable_function_storage_account_share_name = <name of the share created in the durable functions storage account> agent_data_storage_account_name = <name of the storage account used for agent data> agent_data_storage_container_name = <name of the container created in the storage account used for agent adata> private_access = true } subnet_id = <ID of the subnet for the agent> -

Either request a Private Link to the storage account from Monte Carlo Cloud or contact support to increase the result size limit, so that pre-signed URLs are not required for larger results. See details at the top of this FAQ.

You can find additional documentation about restricting access to storage accounts from Azure Functions here.

Can I use managed identities instead of access keys for the storage account used for Durable Functions?

Managed identities for the storage account used by Azure Durable Functions are not officially supported or certified by Monte Carlo. While Azure allows this configuration, it has not been tested by Monte Carlo and is not supported by our Terraform module. Enabling this configuration is considered a customization and may limit Monte Carlo’s ability to provide support.

Managed identities are supported for the storage account used for agent data (data sampling).

How do I check the reachability between Monte Carlo and the Agent?

Please refer to the documentation here.

How do I debug connectivity between the Agent and my integration?

Please refer to the documentation here.

Updated about 1 month ago