Databricks (metastore)

PrerequisitesTo complete this guide, you will need admin rights in your Databricks workspace.

To connect Monte Carlo to a Databricks central Hive metastore, follow these steps:

- Create a cluster in your Databricks workspace.

- Create an API key in your Databricks workspace.

- Provide service account credentials to Monte Carlo.

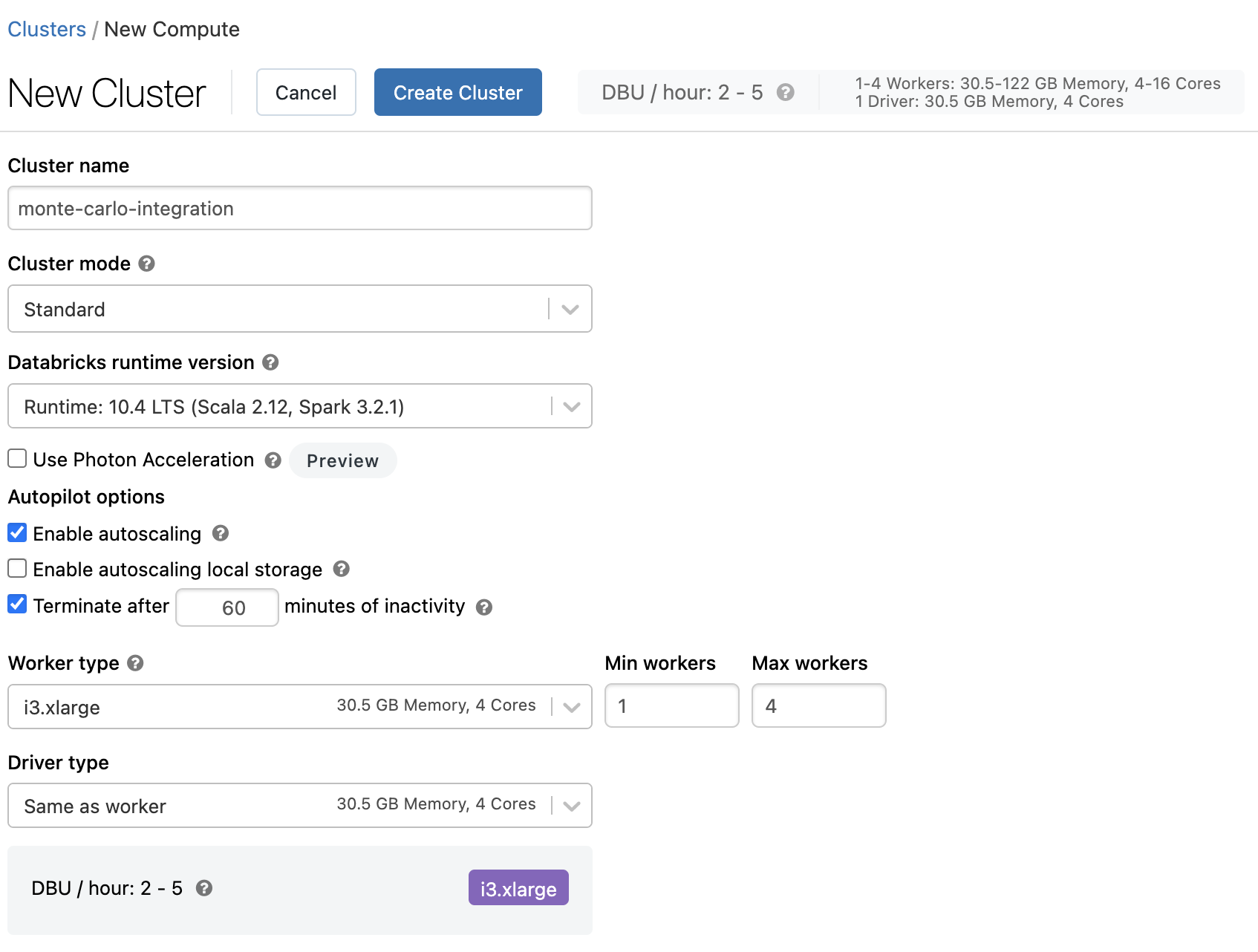

Create a Databricks cluster

Monte Carlo requires a Databricks runtime version with Spark >=

3.0.

-

Follow these steps to create an all-purpose cluster in your workspace. For environments with 10,000 tables or fewer Monte Carlo recommends using an

i3.2xlargenode type. Otherwise please reach out to your account representative for help right-sizing. -

Follow this guide to retrieve the cluster ID and start the cluster.

-

Confirm that this cluster has access to the catalogs, schemas, and tables that need to be monitored. To check this, you can run the following commands in a notebook that your new cluster. If all of the commands work and show you the objects you expect, this cluster is configured correctly. If this doesn't show the expected objects, this may be an issue with the settings on the cluster. Ensure that the cluster is connecting to the correct metastore.

SHOW CATALOGS

SHOW SCHEMAS IN <CATALOG>

SHOW TABLES IN <CATLALOG.SCHEMA>

DESCRIBE EXTENDED <CATALOG.SCHEMA.TABLE>

Cluster creation wizard example

Provide service account credentials

Ensure the cluster is running!Before moving forward, please ensure your Databricks cluster is running otherwise the command will timeout!

Provide connection details for the Databricks central Hive metastore using Monte Carlo's CLI:

- Follow this guide to install and configure the CLI. Requires >=

0.25.1. - Use the command

montecarlo integrations add-databricks-metastoreto set up Databricks connectivity (please ensure the Databricks cluster is running before running this command). For reference, see help for this command below:

This command creates resources in your Databricks workspace.By default this command automates the creation of a secret, scope, directory, notebook and job to enable collection in your workspace. If you wish to create these resources manually instead, please reach out to your account representative. Otherwise only the

databricks-workspace-url,databricks-workspace-id,databricks-cluster-idanddatabricks-tokenoptions are required. See this guide for how to locate your workspace ID and URL. The cluster ID and token should have been generated in the previous steps.

Usage: montecarlo integrations add-databricks-metastore [OPTIONS]

Setup a Databricks metastore integration. For metadata and health queries.

Options:

--name TEXT Friendly name for the created warehouse.

Name must be unique.

--databricks-workspace-url TEXT

Databricks workspace URL. [required]

--databricks-workspace-id TEXT Databricks workspace ID. [required]

--databricks-cluster-id TEXT Databricks cluster ID. [required]

--databricks-token TEXT Databricks access token. If you prefer a

prompt (with hidden input) enter -1.

[required]

--skip-secret-creation Skip secret creation.

--databricks-secret-key TEXT Databricks secret key. [default: monte-

carlo-collector-gateway-secret]

--databricks-secret-scope TEXT Databricks secret scope. [default: monte-

carlo-collector-gateway-scope]

--skip-notebook-creation Skip notebook creation. This option requires

setting 'databricks-job-id', 'databricks-

job-name', and 'databricks_notebook_path'.

--databricks-job-id TEXT Databricks job id, required if notebook

creation is skipped. This option requires

setting 'skip-notebook-creation'.

--databricks-job-name TEXT Databricks job name, required if notebook

creation is skipped. This option requires

setting 'skip-notebook-creation'.

--databricks-notebook-path TEXT

Databricks notebook path, required if

notebook creation is skipped. This option

requires setting 'skip-notebook-creation'.

--databricks-notebook-source TEXT

Databricks notebook source, required if

notebook creation is skipped. (e.g. "resourc

es/databricks/notebook/v1/collection.py")

This option requires setting 'skip-notebook-

creation'.

--collector-id UUID ID for the data collector. To disambiguate

accounts with multiple collectors.

--skip-validation Skip all connection tests. This option

cannot be used with 'validate-only'.

--validate-only Run connection tests without adding. This

option cannot be used with 'skip-

validation'.

--auto-yes Skip any interactive approval.

--option-file FILE Read configuration from FILE.

--help Show this message and exit.FAQs

What Databricks platforms are supported?

All three Databricks platforms (AWS, GCP and Azure) are supported!

What if I am already using the Unity Catalog (UC)? Is that supported too?

It is! See details here.

What about Delta Lake?

This integration does support Delta tables too! Delta size and freshness metrics are monitored out of the box. You can also opt in to any field health, dimension, custom SQL and SLI monitors as well. See here for additional details.

What about my non Delta tables?

Like with Delta tables you can opt into field health, dimension, custom SQL and SLI monitors. To enable write throughput and freshness please enable S3 metadata events. See here for details on how to set up this integration.

What if I'm using an external Hive metastore or Glue catalog instead of the Databricks central Hive metastore with Delta? Is this supported too?

This is supported! See details here.

How do I connect to multiple Databricks workspaces?

Connect to multiple workspaces with a separate connection per-workspace. You'll give each a separate name, and there will be a collector-notebook created in each workspace.

Do I need to set up aSpark query engine connection too?

Nope. The cluster you created above can also be used for granularly tracking data health on particular tables (i.e. creating opt-in monitors).

What is the minimum Data Collector version required?

The latest Databricks integration requires at least v14887 of the Data Collector. You can use the Monte Carlo UI or CLI to verify the current version of your Data Collector and upgrade if necessary.

$ montecarlo collectors listHow do I handle a "Cannot make databricks job request for a DC with disabled remote updates" error?

If you have disabled remote updates on your Data Collector we cannot automatically provision resources in your Databricks workspace using the CLI. Please reach out to your account representative for details on how to create these resources manually.

How do I handle a "A Databricks connection already exists" error?

This means you have already connected to Databricks. You cannot have more than one Databricks metastore or Databricks delta integration.

How do I handle a "Scope monte-carlo-collector-gateway-scope already exists" error?

This means a scope with this name already exists in your workspace. You can specify creating a scope with a different name using the --databricks-secret-scope flag.

Alternatively, after carefully reviewing usage, you can delete the scope via the Databricks CLI/API. Please ensure you are not using this scope elsewhere as any secrets attached to the scope are not recoverable. See details here.

How do I handle a "Path (/monte_carlo/collector/integrations/collection.py) already exists" error?

This means a notebook with this name already exists in your workspace. If you can confirm this is a notebook provisioned by Monte Carlo and there are no existing jobs you should be able to delete the notebook via the Databricks CLI/API. See details here. Otherwise please reach out to your account representative.

How do I retrieve job logs?

- Open your Databricks workspace.

- Select Workflows from the sidebar menu.

- Select Jobs from the top and search for a job containing the name

monte-carlo-metadata-collection. - Select the job.

- Select any run to review logs for that particular execution. The jobs should all show

Succeededfor the status, but for partial failures (e.g. S3 permission issues) the log output will contain the errors and overall error counts.

Updated 8 months ago