S3 Events - Metadata

S3 events are used to track data freshness and volume at scale for tables stored on S3.

During setup, the Monte Carlo CLI is used to interact with the Monte Carlo API as well as with AWS. Please follow this guide to install and configure the CLI on your local machine.

Enable events

- Run

montecarlo integrations configure-metadata-events --connection-type [databricks-metastore|glue|hive-mysql].

% montecarlo integrations configure-metadata-events --help

Usage: montecarlo integrations configure-metadata-events [OPTIONS]

Configure S3 metadata events. For tracking data freshness and volume at

scale. Requires s3 notifications to be configured first.

Options:

--connection-type [databricks-metastore|glue|hive-mysql]

Type of the integration. This option cannot

be used with 'connection-id'. [required]

--name TEXT Friendly name for the created warehouse.

Name must be unique.

--collector-id UUID ID for the data collector. To disambiguate

accounts with multiple collectors.

--option-file FILE Read configuration from FILE.

--help Show this message and exit.The easiest way to set up S3 events in AWS is using the Monte Carlo CLI, as it completely automates the infrastructure creation and policy configuration (Option 1). In case it is not possible, proceed to Option 2.

Option 1: Use the Monte Carlo CLI [Recommended]

If you host your own Data Collector:

- Run

montecarlo integrations add-eventswith the necessary parameters.

If Monte Carlo hosts your Data Collector:

- Coordinate with Monte Carlo Support, and when they give you the go-ahead, you can follow these steps.

- Run

montecarlo integrations create-event-topicwith the necessary parameters. - Let Monte Carlo support know that you've run this, and they will perform the necessary steps on their side.

- Run

montecarlo integrations create-bucket-side-event-infrastructurewith the necessary parameters.

$ montecarlo integrations add-events --help

Usage: montecarlo integrations add-events [OPTIONS]

Setup complete S3 event notifications for a lake.

Options:

--bucket TEXT Name of bucket to enable events for.

--prefix TEXT Limit the notifications to objects starting

with a prefix (e.g. 'data/').

--suffix TEXT Limit notifications to objects ending with a

suffix (e.g. '.csv').

--topic-arn TEXT Use an existing SNS topic (same region as

the bucket). Creates a topic if one is not

specified or if an MCD topic does not

already exist in the region.

--buckets-filename TEXT Filename that contains bucket config to

enable events for This option cannot be used

with 'bucket-name'.

--event-type [airflow-logs|metadata|query-logs]

Type of event to setup. [default: metadata;

required]

--collector-aws-profile TEXT Override the AWS profile use by the CLI for

operations on SQS/Collector. This can be

helpful if the resources are in different

accounts.

--resource-aws-profile TEXT Override the AWS profile use by the CLI for

operations on S3/SNS. This can be helpful if

the resources are in different accounts.

--auto-yes Skip any interactive approval.

--collector-id UUID ID for the data collector. To disambiguate

accounts with multiple collectors.

--option-file FILE Read configuration from FILE.

--help Show this message and exit.Usage: cli.py integrations create-event-topic [OPTIONS]

Setup Event Topic for S3 event notifications in a lake.

Options:

--bucket TEXT Name of bucket to enable events for.

--prefix TEXT Limit the notifications to objects starting

with a prefix (e.g. 'data/').

--suffix TEXT Limit notifications to objects ending with a

suffix (e.g. '.csv').

--topic-arn TEXT Use an existing SNS topic (same region as

the bucket). Creates a topic if one is not

specified or if an MCD topic does not

already exist in the region.

--buckets-filename TEXT Filename that contains bucket config to

enable events for This option cannot be used

with 'bucket-name'.

--event-type [airflow-logs|metadata|query-logs]

Type of event to setup. [default: metadata;

required]

--resource-aws-profile TEXT Override the AWS profile use by the CLI for

operations on S3/SNS. This can be helpful if

the resources are in different accounts.

--auto-yes Skip any interactive approval.

--collector-id UUID ID for the data collector. To disambiguate

accounts with multiple collectors.

--option-file FILE Read configuration from FILE.

--help Show this message and exit.Usage: cli.py integrations create-bucket-side-event-infrastructure

[OPTIONS]

Setup Bucket Side S3 event infrastructure for a lake.

Options:

--bucket TEXT Name of bucket to enable events for.

--prefix TEXT Limit the notifications to objects starting

with a prefix (e.g. 'data/').

--suffix TEXT Limit notifications to objects ending with a

suffix (e.g. '.csv').

--topic-arn TEXT Use an existing SNS topic (same region as

the bucket). Creates a topic if one is not

specified or if an MCD topic does not

already exist in the region.

--buckets-filename TEXT Filename that contains bucket config to

enable events for This option cannot be used

with 'bucket-name'.

--event-type [airflow-logs|metadata|query-logs]

Type of event to setup. [default: metadata;

required]

--resource-aws-profile TEXT Override the AWS profile use by the CLI for

operations on S3/SNS. This can be helpful if

the resources are in different accounts.

--auto-yes Skip any interactive approval.

--collector-id UUID ID for the data collector. To disambiguate

accounts with multiple collectors.

--option-file FILE Read configuration from FILE.

--help Show this message and exit.Using the --buckets-filename flag

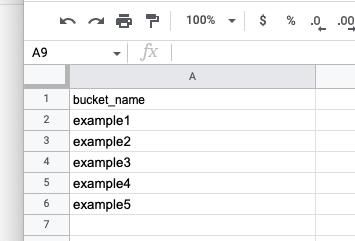

--buckets-filename flagIf you are using the --buckets-filename flag, it needs to be provided in a .csv format; you can use the path as the input here. For example:

montecarlo integrations add-events --buckets-filename .\testing.csv --event-type metadataThe csv file is a multi-line file where each bucket name is listed on a separate line beginning with the header bucket_name:

We will not override existing events!Please be aware this command will not override any existing bucket events. That means if you are enabling events on a bucket that already has event notifications, the command will fail. This is because we do not want to modify your existing event configurations, and we would need to do so to add our own events. This is due to a concept called SNS fanout which allows you to publish from one endpoint to multiple destinations, thus allowing for parallel asynchronous processing, please find more information about that here: https://docs.aws.amazon.com/sns/latest/dg/sns-common-scenarios.html

You can bypass this on a single bucket by following Option 2 outlined below.

To bypass this for a list of buckets, you can use this bash command to pass in a

.txtfile list of your buckets using the single bucket flag--bucketto enable events for any buckets that do not have events on them, and then modify the buckets which do by hand using Option 2 below:for bucket in $(cat buckets.txt); do <command> $bucket; doneWhere the

<command>is:montecarlo integrations add-events --bucket <bucket_name> --event-type metadataThis will allow you to enable events on a list of buckets one by one, ignoring those that already have standing event notifications.

Option 2: Use the AWS UI

The following steps are only required if setting up S3 events using the CLI is not possible.

Some steps change the data collector infrastructure, while others create infrastructure in the AWS account hosting the data. Each step is marked with "Data account" or "Collector account". If the data collector is deployed to the same account as the data, all steps are to be executed in the same account. If the data collector is managed by Monte Carlo, please reach out to your representative to execute the "Collector account" steps.

- Retrieve the bucket ARN (Data account)

- Retrieve the SQS queue ARN (Collector account)

- Retrieve the Data Collector AWS account ID (Collector account)

- Create a SNS Topic (Data account)

- Update the SQS access policy (Collector account)

- Create a SNS subscription (Data account)

- Create event notification (Data account)

To complete this guide, you will need admin credentials for AWS

1. Retrieve the bucket ARN (Data account)

- Open the S3 Console and search for the bucket that you would like to enable events for.

- Select the bucket.

- Save the bucket ARN by selecting “Copy Bucket ARN” for later. This value will be further referred as S3_ARN.

2. Retrieve the SQS queue ARN (Collector account)

If the data collector is managed by Monte Carlo, please reach out to your representative for this value instead.

- Open the Cloudformation console and search for the Monte Carlo data collector.

- Select the stack.

- Select the “Outputs” tab.

- Save the Metadata Queue ARN for later (Key: MetadataEventQueue). This value will be further referred as EVENT_QUEUE_ARN.

3. Retrieve the Data Collector AWS account ID (Collector account)

If the data collector is managed by Monte Carlo, please reach out to your representative for this value instead.

- From the console, select your username in the upper right corner.

- Select “My Account”.

- Save the Account Id (without dashes) for later. This value will be further referred as COLLECTOR_ACCOUNT_ID.

4. Create a SNS Topic (Data account)

- Open the SNS console and select “Topics”. IMPORTANT: Make sure you are in the same region as the bucket you want to add an event for.

- Select “Create Topic”.

- Choose "Standard" type.

- Enter a meaningful name.

- Select “Create Topic” and save the Topic ARN for later. This value will be further referred as SNS_ARN.

- The topic you just created has an access policy. Update the policy by appending the following two policy statements, replacing values in brackets with the SNS_ARN, S3_ARN and COLLECTOR_ACCOUNT_ID saved above.

- Save.

{

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": "SNS:Publish",

"Resource": "<SNS_ARN>",

"Condition": {

"StringEquals": {

"aws:SourceArn": "<S3_ARN>"

}

}

}{

"Sid": "__dc_sub",

"Effect": "Allow",

"Principal": {

"AWS": "<COLLECTOR_ACCOUNT_ID>"

},

"Action": "sns:Subscribe",

"Resource": "<SNS_ARN>"

}5. Update the SQS access policy (Collector account)

If the data collector is managed by Monte Carlo these steps can be skipped by just sending the SNS Topic ARN to your representative.

- Open the SQS console.

- Search for the queue. The name follows this structure: {CF_STACK}-MetadataEventQueue-{RANDOM_STR}

- Select the “Access Policy” Tab and Select “Edit”.

If the access policy is empty or looks something like this:

{

"Version": "2012-10-17",

"Id": "arn:aws:sqs:<region>:<account>:<name>/SQSDefaultPolicy"

}Paste the following, replacing the values in brackets with the SNS_ARN, COLLECTOR_ACCOUNT_ID and EVENT_QUEUE_ARN values saved above.

{

"Version":"2008-10-17",

"Statement":[

{

"Sid":"__owner",

"Effect":"Allow",

"Principal":{

"AWS":"arn:aws:iam::<COLLECTOR_ACCOUNT_ID>:root"

},

"Action":"SQS:*",

"Resource":"<EVENT_QUEUE_ARN>"

},

{

"Sid":"__sender",

"Effect":"Allow",

"Principal":{

"AWS":"*"

},

"Action":"SQS:SendMessage",

"Resource":"<EVENT_QUEUE_ARN>",

"Condition":{

"ArnLike":{

"aws:SourceArn":[

"<SNS_ARN>"

]

}

}

}

]

}If the access policy already has a SID with “__sender” (i.e. looks like above) append your SNS_ARN to the SourceArn list instead.

"aws:SourceArn": [

"arn:aws:sns:::existing_sns_topic",

"<SNS_ARN>"

]6. Create a SNS subscription (Data account)

- Open the SNS console and select “Subscriptions”.

- Select “Create Subscription”.

- Select the topic ARN you saved above in the "Create a SNS Topic" subsection.

- Select Amazon SQS as the protocol.

- IMPORTANT: Be sure to select “Enable raw message delivery”.

- Select (or paste) the SQS ARN.

- Select “Create Subscription”.

- Validate the status is “Confirmed”.

7. Create event notification (Data account)

- Open the S3 Console and search for the bucket that you would like to enable events for.

- Select the bucket.

- Select the "Properties" tab.

- Select “Create event notification” under Event notifications.

- Fill in a meaningful name.

- Optionally specify a prefix and/or suffix.

- Select “All object create events” and “All object delete events” under Event types.

- Enter the SNS topic ARN as the Destination topic ARN. You can also choose the topic from the combo instead.

Tips and tricks

Prefixes with special characters

If you want to limit notifications to objects containing any special characters, AWS requires that you use the URL encoding when creating the trigger.

For instance, if you want to create a filter for the foo=bar/prefix the configuration should be foo%3Dbar/.

Updated 6 months ago