Airflow Logs from S3

Our S3 logs integration currently only works for Airflow < 2.3. We're working on support for Airflow 2.3+.

Monte Carlo can ingest Airflow task logs from S3 when you are using the S3 task handler from the Amazon Airflow Provider. To allow Monte Carlo to ingest the task log files from S3:

- Verify Data Collector version

- Create IAM role

- Enable Airflow logs integration

- Add S3 events

- Tag tables with the DAG ID in Monte Carlo (optional)

To use the integration, your Airflow logs must use the default Airflow log format and the default Airflow log filename template.

Verify Data Collector Information

Version

The Airflow logs integration requires at least v2523 of the Data Collector, and either the hephaestus or janus infrastructure template. You can use the Monte Carlo CLI to verify the current version and template for your Data Collector.

$ montecarlo collectors list

v0.20.3+ of the CLI is required to see template information for your Data Collector.If you see

cloudformation:hephaestus:<version>for the Data Collector template, you will have the infrastructure required for this integration. If you see anything else, please contact Support for help getting migrated to thehephaestustemplate.

Infrastructure

You must have the correct event infrastructure created in your collector to enable Airflow logs. Confirm you have the proper infrastructure by following the below steps:

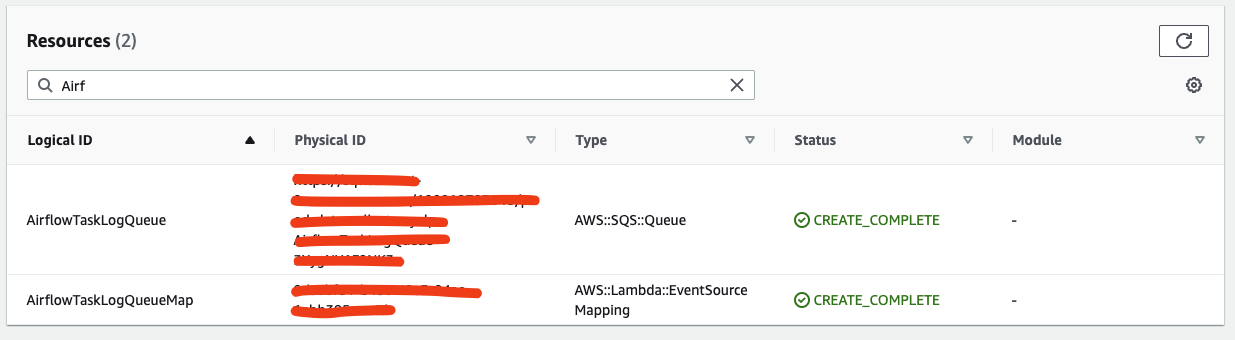

- Find your collector stack in the AWS account it is hosted in.

- Go to the "Resources" tab.

- Search for "Airflow". If you have the correct infrastructure, you should see two results: one for an SQS queue named "AirflowTaskLogQueue" and another for a mapping named "AirflowTaskLogQueueMap":

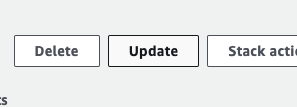

If you do not have these resources, you will need to make them before moving forward:

- Update your stack:

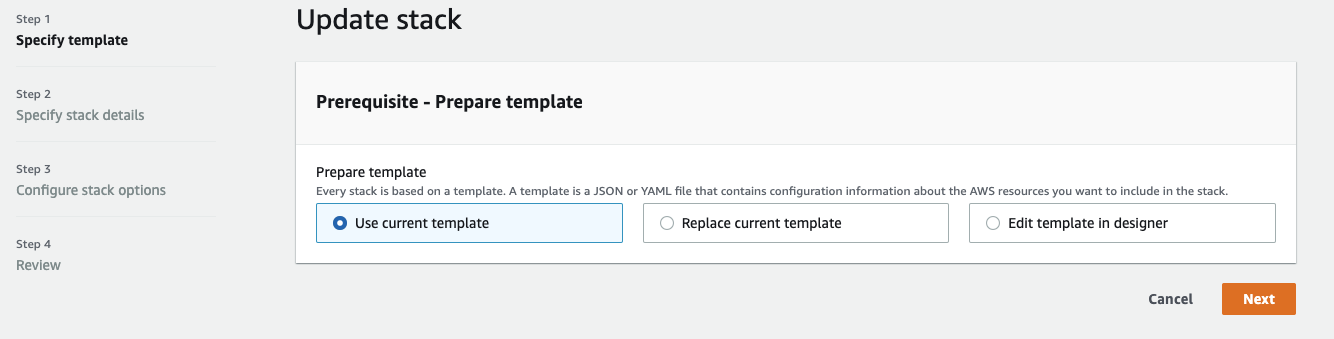

- Use the current template:

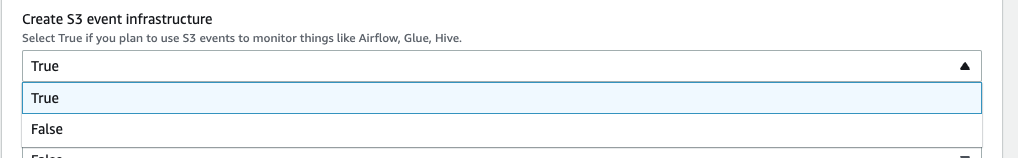

- Set "Create s3 event infrastructure" to "True":

The update should only take a couple of minutes as all it will be doing is making an SQS queue for use by the Airflow logs integration.

After the update is completed successfully, please move forward creating the IAM role.

Create IAM role

Create the following IAM policy allowing read access to your Airflow task logs. Replace <S3_ARN> with the ARN of the S3 bucket, and optional path prefix, to your Airflow task logs:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": "<S3_ARN>/*"

}

]

}Follow these steps to create an IAM role with this policy that Monte Carlo will be able to assume.

Enable Airflow logs integration

Use the Monte Carlo CLI to enable the Airflow S3 logs integration.

% montecarlo integrations configure-airflow-log-events --help

Usage: montecarlo integrations configure-airflow-log-events

[OPTIONS]

Configure S3 events for Airflow task logs.

Options:

--name TEXT Friendly name for the created warehouse. Name must be

unique.

--collector-id UUID ID for the data collector. To disambiguate accounts

with multiple collectors.

--role TEXT Assumable role ARN to use for accessing AWS resources.

[required]

--external-id TEXT An external id, per assumable role conditions.

--option-file FILE Read configuration from FILE.

--help Show this message and exit.If you created an assumable role in the previous step, use the --role option to specify the ARN of that role.

Add S3 events

Follow instructions here to add airflow-logs event notifications to the S3 bucket where Airflow task logs are being written.

Tag tables with DAG ID

This step is not required.

If you tag your tables in MC with the IDs of the associated DAGs, you will be able to filter the Airflow task failures down to just the ones from DAGs matching the tables in the incident. To do so, tag tables with airflow_dag_id as the key and the associated DAG ID as the value.

That's it! As your Airflow tasks commit log files to the configured S3 bucket, they should be available in Monte Carlo near real time.

Updated 8 months ago