Agent Monitors Overview

Agent Observability capabilities, instrumentation setup, and monitor types for tracking agent behavior.

Overview

Agent Observability gives you full visibility into your AI agents, from the data that powers them to the prompts they receive and the outputs they generate. Instead of treating agents as black box systems, Monte Carlo provides a unified, end-to-end view across your data pipelines, models, tools, and agent workflows, so you can see exactly what happened, why it happened, and how to fix it.

Every agent run becomes a trace containing prompts, context, completions, token usage, latency, model metadata, errors, and workflow attributes. This level of detail enables teams to systematically evaluate output quality, detect silent failures, identify regressions, monitor cost and performance, and trace issues back to the upstream data or logic that shaped agent behavior.

With a warehouse-native architecture, all telemetry — including prompts and outputs — remains in your environment. Monte Carlo reads directly from your warehouse to provide the governance, security, and auditability enterprises expect, while giving you full visibility across diverse models, architectures, and workflows.

What Agent Observability unlocks

Agent Observability brings together data and AI in one connected view so you can deliver reliable, production-grade AI and detect issues fast.

- Trusted, production-grade AI with measurable quality

- Detection of subtle regressions, incomplete context, and behavioral drift before users are affected

- Quality evaluation at scale using customizable LLM-as-judge templates or deterministic checks

- Unified root-cause analysis across both data and AI layers

- Faster debugging with trace-level visibility

- Support for any model and any agent framework

- Monitor agents alongside the pipelines and data that feed them

Supported Warehouses

- Snowflake

- Databricks

- BigQuery

- Athena

Agent Telemetry Ingestion pattern

- Agents emit OpenTelemetry (OTLP) traces via the Monte Carlo SDK.

- An OTLP collector receives and processes the traces.

- The collector writes telemetry to object storage and/or directly into your warehouse.

- Monte Carlo reads the warehouse telemetry table for monitoring, evaluation, and alerting.

Using the same warehouse that stores your operational data makes it easy to correlate agent behavior with lineage, data health, and pipeline integrity.

Instrumenting your agent

To begin collecting agent telemetry, configure the following components:

- Install, set up tracing, and enhance tracing data with identifying attributes with the Monte Carlo OpenTelemetry SDK.

- Deploy an OpenTelemetry (OTLP) Collector, which receives and processes the spans emitted by your agent.

- Or, use Monte Carlo's hosted OpenTelemetry (OTLP) Collector to receive, process, and write the spans emitted by your agent into your data store to simplify infrastructure requirements. Note: Only AWS data stores are supported at this time.

- Configure your warehouse to ingest AI agent traces:

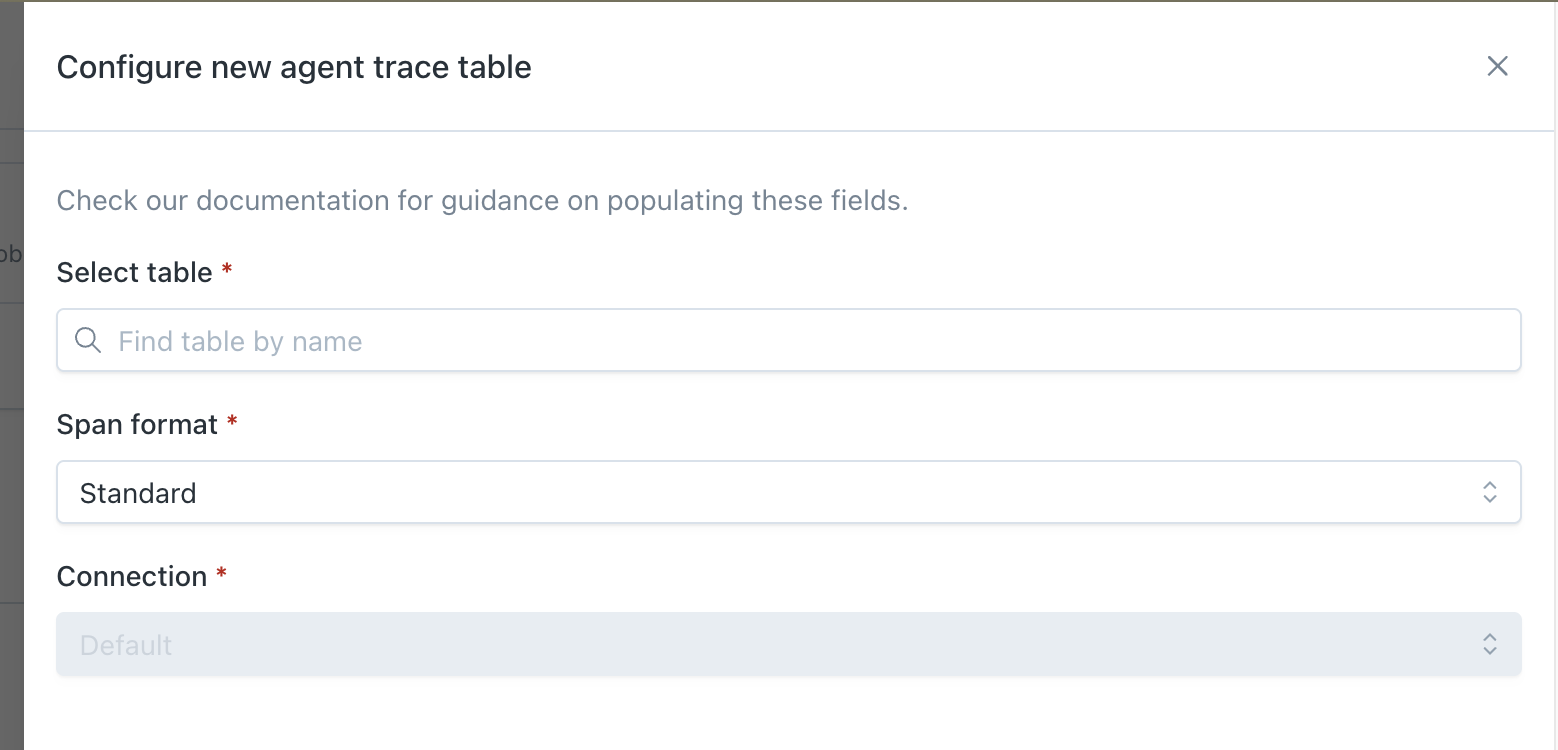

- Configure your trace table in Monte Carlo by visiting the Agent Observability settings page and selecting which table contains your AI Agent traces along with the format of the traces and which connection should be used to query this table.

Configuring Agent Monitors

Monte Carlo offers specialized types of agent monitors to give you granular control over agent monitoring:

-

Agent Evaluation Monitors: Monitor agent output quality by alerting when agent responses contain hallucinations, fail accuracy checks, or don't meet quality standards.

Creating an Evaluation Monitor -

Agent Metric Monitors: Alert on unexpected changes in agent performance metrics like latency spikes, token usage exceeding budgets, or error rate thresholds.

Creating an Agent Metric Monitor -

Agent Trajectory Monitors: Verify execution order and workflow patterns by alerting when tools are called in unexpected sequences or frequencies.

Creating an Agent Trajectory Monitor

Use cases and examples

For comprehensive agent monitoring, use all Agent monitor types together: Evaluation Monitors ensure output quality, Metric Monitors ensure operational reliability, and Trajectory Monitors ensure proper execution flow.

| Agent Evaluation Monitors | Agent Metric Monitors | Agent Trajectory Monitors | |

|---|---|---|---|

| Purpose | Measure output quality and correctness | Track operational health and performance | Validate tool call sequences |

| Primary use cases | Hallucination detection, accuracy checks, quality standards | Latency monitoring, cost control, error tracking | Detect dependency issues, recursive calls, unexpected execution sequences |

| What you're monitoring | Agent response quality, accuracy, completeness | Duration, token count, error rate, request volume | Tool call order, execution frequency, workflow steps |

| Best for | Validating "what" the agent produces | Monitoring "how" the agent performs | Ensuring agents follow expected execution paths |

| Alert examples | "Answer relevance is < 4", "Clarity score is anomalous" | "Max of total tokens is > 1,200", "Mean of duration is > 5s" | "delete_data occurs before check_permissions", "web_search occurs > 5 times" |

Updated 5 days ago